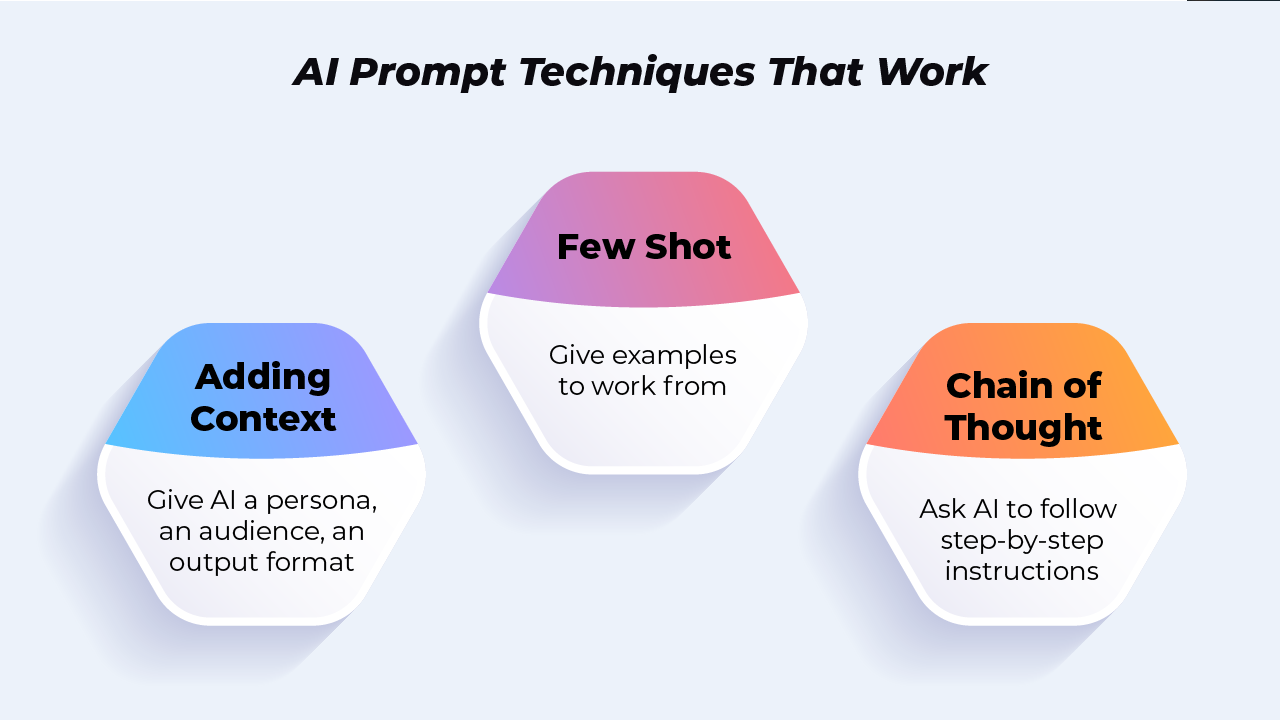

We open this edition of our bi-monthly AI roundup with a prompting question: can you name three AI prompting techniques that regularly improve the quality of AI responses?

With studies increasingly showing that prompts really matter for AI output (so much that even AI developers themselves are unsure what their models are capable of), we’re also seeing some odd things, as covered in research by VMWare authors Rick Battle and Teja Gallapudi.

Their study found that by putting AI prompts in terms of Star Trek scenarios (for sets of 50 math problems, for example, such as: “Command, we need you to…” or asking the AI to start its response with “Captain’s Log, Stardate 2024…”) or high-risk thrillers (for sets of 100, such as: “The life of the president’s advisor hangs in the balance…”) consistently produced more effective than prompts than without.

And no one is really sure why.

One theory is that, like it’s training data, AI performs better when prompted with urgency or encouragement, which leads to us to one of this week’s topics: the quirky benefits (vs real risks) of anthropomorphizing AIs.

In March and April, the first signs of an AI thaw on Wall Street appeared. If 2024 is truly the “show me the money” year for AI, that pressure may be mounting now, but it’s not shared by everyone.

Our focus in this edition: AI usage trends, leadership and regulation, innovations, and a look at the obvious cons (and some pros) of anthropomorphizing our AIs.

[Check out our prior AI roundup to catch up on events from January and February !]

A(n Updated) Look at Usage

Despite the massive volume of people using AI every day around the world, updated US stats from the Pew Research Center give us some context:

- 34% of those surveyed claimed to have heard nothing at all about ChatGPT

- 48% had never used ChatGPT either for entertainment or to learn something new

- 53% had not used it for tasks at work

- (And thankfully, only 2% admit to using it to get information on the 2024 Presidential election.)

Despite this, there was a striking increase (+two-thirds) over the last six months in the number of people indicating they use ChatGPT for work tasks, an increase that may say more about AI’s startlingly fast workplace adoption than the numbers above lead you to believe. (This surge is greater over a similar time span than most any tech we’ve seen.)

Speaking of numbers, with Alphabet/Google, Microsoft, and Meta reporting earnings (Nvidia stands alone next month), the initial thaw feared from hardware was not shared across all the big providers. Alphabet (sales up 15% and giving first ever stock dividend) and Microsoft (also beating expectations with 17% jump in revenue and 20% in profits) continued booming. Meta, meanwhile, cooled, with a 10% drop in stock price on softer earnings and bigger cash investment in AI.

Inversely, chip-related companies ASML and TSMC both lost 5% in value mid-April, with Nvidia, AMD, and Micron all seeing some signs of difficulty after spectacular growth.

And companies continue to buy in on AI (e.g. Coca-Cola announced a $1.1 billion deal for Microsoft’s cloud and AI services in late April).

Leadership and Regulation

With March being Women’s History Month, we open this section with a look at the role of women in leadership of AI companies—something overlooked or even omitted, even by massive publications like the New York Times.

As profiled by AI entrepreneur Dr. Ayesha Khanna, here are 12 emerging AI startups with co-founding (or founding) women:

- Adept: Kelsey Szot

- Anthropic: President Daniela Amodei

- insitro: Daphne Koller (also CEO)

- Kumo.AI: Hema Raghavan

- Notion: Jessica Lam

- Pika: Demi G. (also CEO)

- Rosebud AI: founder Lisha Li (also CEO)

- Scale AI: Lucy Guo

- Synthesia: Lourdes Agapito

- Vannevar Labs: President Nini Hamrick

- Waabi: founder Raquel Urtasun (also CEO)

- Writer: May Habib (also CEO)

Note that these 12 appear in the Forbes Top 50 AI Startups list for 2024, as the only ones co-founded, or led, by women. Clearly there is work still to do for women to have an equal seat at the table in AI.

In terms of governance, March and April saw continued regulation and policy updates, such as the White House’s guidance to federal agencies, which made binding that:

- Each government agency must appoint a senior, chief artificial intelligence officer

- The government will build an AI workforce, hiring at least 100 professionals by summer (see below)

- Any agency using AI must have safeguards in place (assessing, testing, and monitoring impact) by 12/1, or stop use

- Each agency (except intelligence and Department of Defense) must annually share an online inventory of how they’re using AI and its risks

As Shalanda Young, Director of the Office of Management and Budget (OMB) told the press: “The public deserves confidence that the federal government will use the technology responsibly.”

The New York Times in March profiled the Department of Homeland Security’s move into AI, as one of the first US agencies to embrace it across divisions, launching a partnership with Meta, Anthropic, and OpenAI.

As part of their plan, they aim to add 50 AI experts of their own, continuing a trend that sees governments in the US, Europe, and the UK looking to hire AI experts at a time when private sector compensation packages that are hard to even approximate.

As Anthropic cofounder Jack Clark said of EU offers on X/Twitter: “You don’t need to be competitive with industry, but you definitely need to be in the ballpark.”

Concerning global AI regulations, the EU AI Act approval made it the world’s first comprehensive AI law formally passed.

Also this month: debate rages on about AI and the future of work, with a number of sources questioning the initial assertion that generative AI developments will absolutely mean mass layoffs (as in, 300 million lost jobs worldwide).

Check out our PTP Report article AI: Reasons for Hope on an in-depth look at this question, as we profile MIT economist David Autor and his belief AI may, in fact, help rebuild the American middle class.

Tech Innovations

With deep learning advancements seeing LLM effectiveness doubling every 5–14 months, AI capabilities are improving far faster than the hardware that runs them.

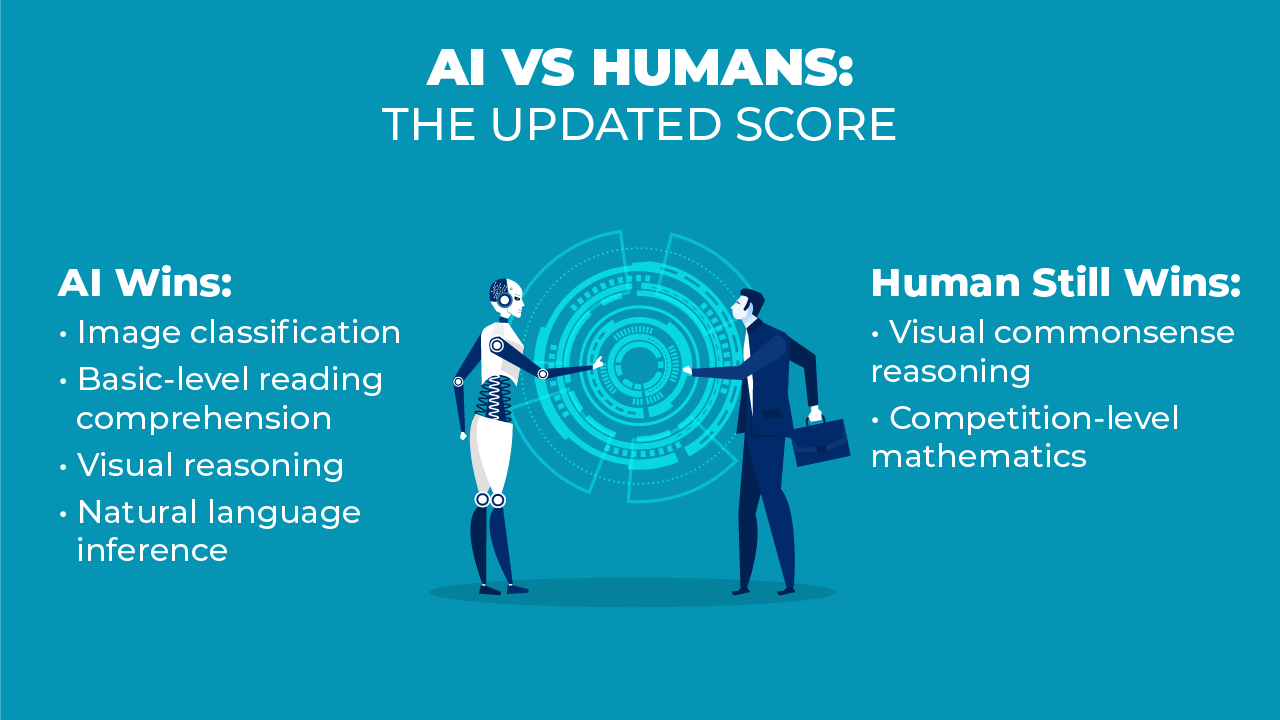

In Stanford’s epic Artificial Intelligence Index Report 2024 this month, we see just how fast AI is gaining on people, too, even in areas where it has traditionally underperformed.

Regardless of user support for generative AI tools, Meta rolled out AI across their consumer offerings in April, with their chatbot “Meta AI” appearing across Facebook, Instagram, Messenger, and WhatsApp, while OpenAI made waves when it launched Sora, a generative AI model that turns prompts into (sometimes) stunningly real video.

On the hardware front, the chips race continues to heat up, with Nvidia releasing what they call “the world’s most powerful chip” for AI in the Blackwell B200, radically boosting capacity while also reducing energy consumption, but at a potential cost, per GPU, of $30,000–$40,000.

Intel, meanwhile, launched a challenger for Nvidia’s H100 (at lower cost), and received an enormous grant ($8.5 billion) via the US government’s CHIPS Act, making it yet another force to reckon with in the field of AI chips.

DARPA, the Defense Advanced Research Projects Agency announced in April that an AI-piloted aircraft (with a human onboard for safety) engaged in aerial, simulated dogfights with a human-flown F-16 aircraft, fulfilling a scenario long popularized in fiction.

Conclusion: Anthropomorphizing AI

We conclude this week with a topic also continuing to circulate in 2024—our tendency, as people, to think of AIs like living entities.

The tendency to ascribe human qualities to AI is generally perceived as dangerous, as it:

- Mystifies science (obscuring real concerns like scraped data sources not being properly attributed, and inherent bias)

- Empowers tech companies (as the makers of a new life form) vs users themselves (who keep finding ways to get the most out of these tools with human ingenuity)

- Encourages over-sharing of data (risking exposure of personal information with wrongly-placed trust)

- Increases fear of the technology (as the unknown, and what it might do to us in the future)

But now here’s a voice for the reverse. In a blog post entitled On the necessity of a sin, the Wharton School’s Ethan Mollick suggests that the best users of AI now may be managers and educators, in part because they’re skilled at getting the best from the people they work with.

Mollick proposes letting AI be “weird,” and looking at prompting akin to a form of conversation, where the AI can be steered with feedback and corrections. In this way, we may actually get the most out of current genAI models by treating it more like a person.

We hope you’ve enjoyed this week’s coverage of some of the more noteworthy developments in AI of March and April!

Keep your eyes out for our next installment of our AI roundup in June.

Check out other articles from PTP on AI

Get the latest updates on recruiting trends, job market, and IT, and expert advice on hiring and job seeking at The PTP Report

References

The Unreasonable Effectiveness of Eccentric Automatic Prompts, arXiv (2/20/24)

Artificial Intelligence Index Report 2024, Stanford University

2024 PEW RESEARCH CENTER’S AMERICAN TRENDS PANEL WAVE 142 INTERNET TOPLINE FEBRUARY 7-11, 202, Pew Research

Here’s Proof the AI Boom Is Real: More People Are Tapping ChatGPT at Work, Wired

The White House issued new rules on how government can use AI. Here’s what they do, NPR

The Department of Homeland Security Is Embracing A.I., New York Times

Coca-Cola signs $1.1 billion deal to use Microsoft cloud, AI services, Reuters

Regulators Need AI Expertise. They Can’t Afford It, Wired

Chip race: Microsoft, Meta, Google, and Nvidia battle it out for AI chip supremacy, The Verge