Investment in AI agents is surging.

But that doesn’t mean adoption is going without a hitch. With more developers leaning on AI tools to meet increasing demands, the importance of checking generated code, or software testing, has never been greater.

While there’s no doubt AI-generated code is improving, even dazzling at times, it can also baffle with its periodic short-comings. And by most accounts it fares worse with the stricter requirements of established codebases than for ad-hock weekend projects, where it shines.

When we do reach the much-teased 80/20 future, wherein software engineers use AI to generate the vast majority of code, we’re going to need effective software testing automation also in place.

Luckily, agentic AI in software testing is also advancing quickly, and while it’s similarly not ready to take over without human oversight, it is providing an enormous boost to QA, enabling far more coverage, more efficiently than before.

In today’s PTP Report, we return to the topic of AI agents in software testing, looking at the state of real-world implementations aiming to keep pace with increasing demands.

[Check our first article on AI testing agents from early April, which looks at the Nvidia HEPH framework.]

AI Agents for Software Testing: One Step Past AI Automation

Manual testing done by experienced experts is still the best.

But then, it’s never been reasonable to expect to be able to provide that level of attention for everything. Traditional test automation has long enabled companies to fill some of the gap and remains effective for repetitive, simple scenarios in well-established frameworks.

But it also demands hours spent on maintenance and breaks down fast with added complexity. Teams struggle with tests that take too long to run, effective QA slowing down releases, not enough time to create quality new tests and maintain, tests being flaky, and good coverage just being too low.

Non-agentic AI solutions are already effectively helping many companies with some of this pain by accelerating the writing and maintenance of tests. And comparing AI testing vs traditional testing isn’t the goal anyway—because as anyone who’s used AI in testing knows, it still makes mistakes.

The goal is to extend the testing expertise you do have, letting AI take on tasks human QA teams can’t get to now, and with it also extend coverage.

But even this isn’t enough to keep up with accelerating code creation, which, as Szilárd Széll, DevOps Transformation Lead at Eficode (and Tester of the Year for Finland, 2024) tells Richard Seidl, is producing more code duplication and copy/paste code, and more security vulnerabilities thanks to AI automation advances in coding.

Agentic AI, with systems capable of taking autonomous action, is the next step.

Three Types of Autonomous Testing Agents

For starters, the words “agent” and “agentic” are getting added to many AI testing tools right now, whether they can really act autonomously or not.

And there is a lot of gray area, such as when referring to self-healing tests, or AI systems that provide suggestions on failed test runs, for example, or can apply some limited reasoning steps when they do run into issues.

Actual agentic systems differ in that they don’t just execute test cases but should also learn, adapt, and provide optimization autonomously. Agents can refine, recognize context shifts, and evolve as an application changes.

This is different than searching a UI for terms, for example (as humans do), which can give the illusion of adaptation when a UI element is moved or re-sized.

Agentic systems can enable more of a feedback loop than isolated, linear executions do.

Seidl breaks down the types of AI testing agents into:

- Rule-Based: These execute to predefined rules. They are most effective when you can predetermine possible outcomes, but this also makes them less effective at handling the unexpected, requiring greater human intervention in such cases.

- Machine Learning (ML): We learn by doing, and that’s the goal of this approach, too. By taking into account prior test execution, application, and user behavior, this approach refines behavior by practice. It is best at generating dynamic tests and finding unexpected defects but also introduces a number of challenges due to its unpredictable nature (see below).

- Hybrid: By combining expected logic with ML, agents maintain more reliability for validation, while also gaining an improved ability to optimize over time and adapt. One example of this comes from using multiple AI systems to work together, and you can also read more on multi-agent systems in our prior PTP Report.

Agentic AI Software Testing Today: Real-World Functionalities

Many AI-powered QA automation systems at the time are geared to address only specific pain points, such as the speed of writing new tests, maintaining existing tests, generating test data, and accelerating the speed of testing.

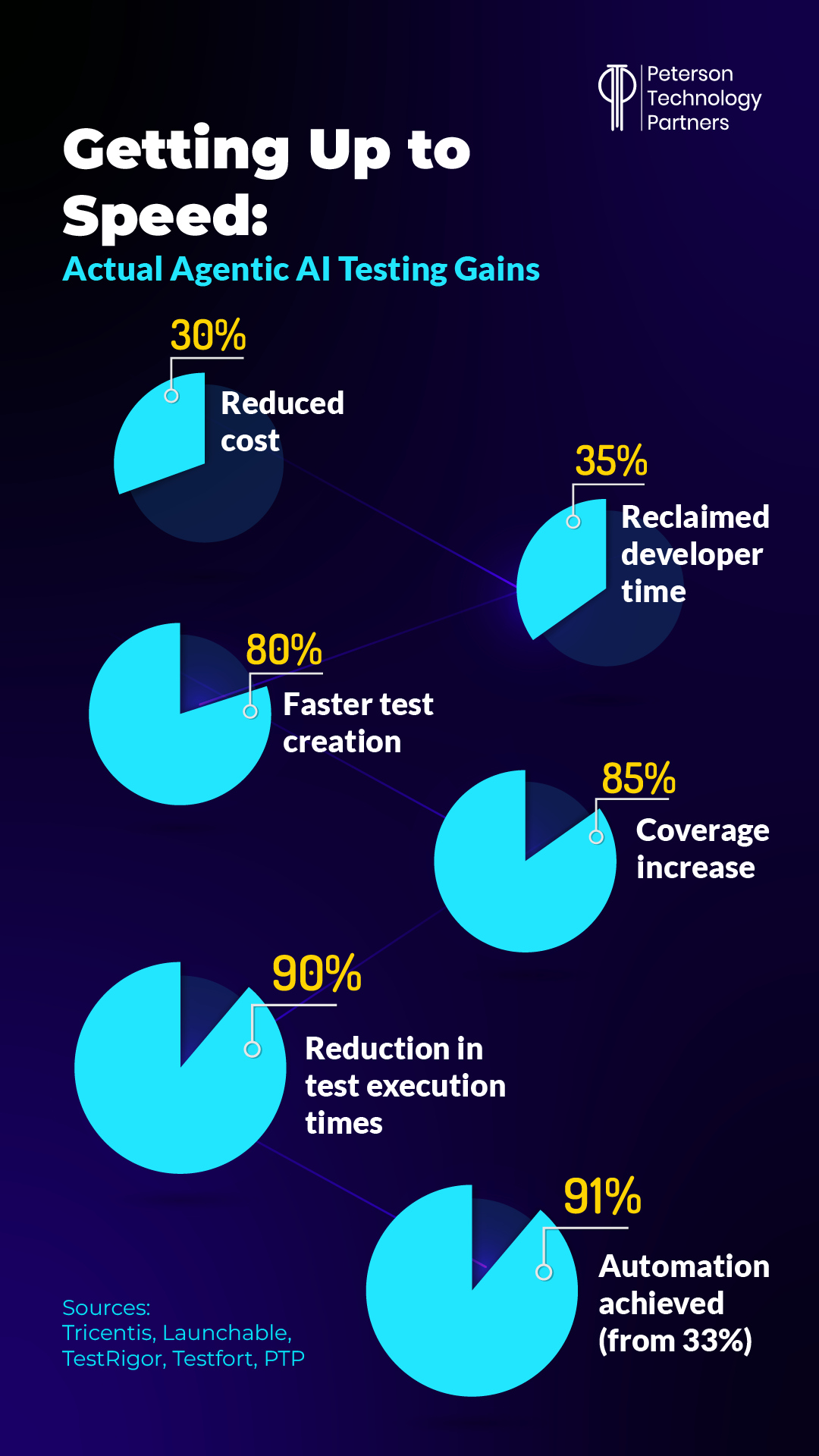

And while none are able to truly take over testing without human assistance, they are generating real results.

Some of these AI-powered QA tools also include no-code or natural language test generation options, and pre-recording steps for AI agents to work from. By just demonstrating or writing what you need, the AI can then generate test cases (but they still need review).

Most enable cross-browser testing and native web and mobile, API, and accessibility testing, and many even offer re-usable tests for established systems (such as Salesforce or SAP) and even pre-populate scripts for CI/CD integration.

Some work as wrappers for existing solutions like Selenium (i.e., Katalon Studio, Opkey), others function as SaaS on the cloud (i.e., Tricentis Testim, Launchable, ACCELQ Autopilot, Qodo, Mabl, testRigor), or even as on-prem/IDE extension (i.e., Diffblue Cover for Java).

All agentic solutions can generate tests and also execute them, with most adding ML optimization based on historical runs, regression testing that benefits from historical data, and even predictive analytics.

Self-healing capabilities will automatically update test cases for detected modifications, and some agent systems can even interact with other AIs. Anthropic’s open standard framework Model Context Protocol (MCP) is being widely utilized to enable coding AI and testing AI to communicate (such as between Cursor and testers AI).

The goal is to accelerate the feedback loop and shift testing left, or even, as many companies promote, shorten (or perhaps improve) a company’s development cycle.

But it’s still early days for agent-to-agent communication, and the MCP was only introduced in November of last year.

Handling Agentic AI Testing Issues

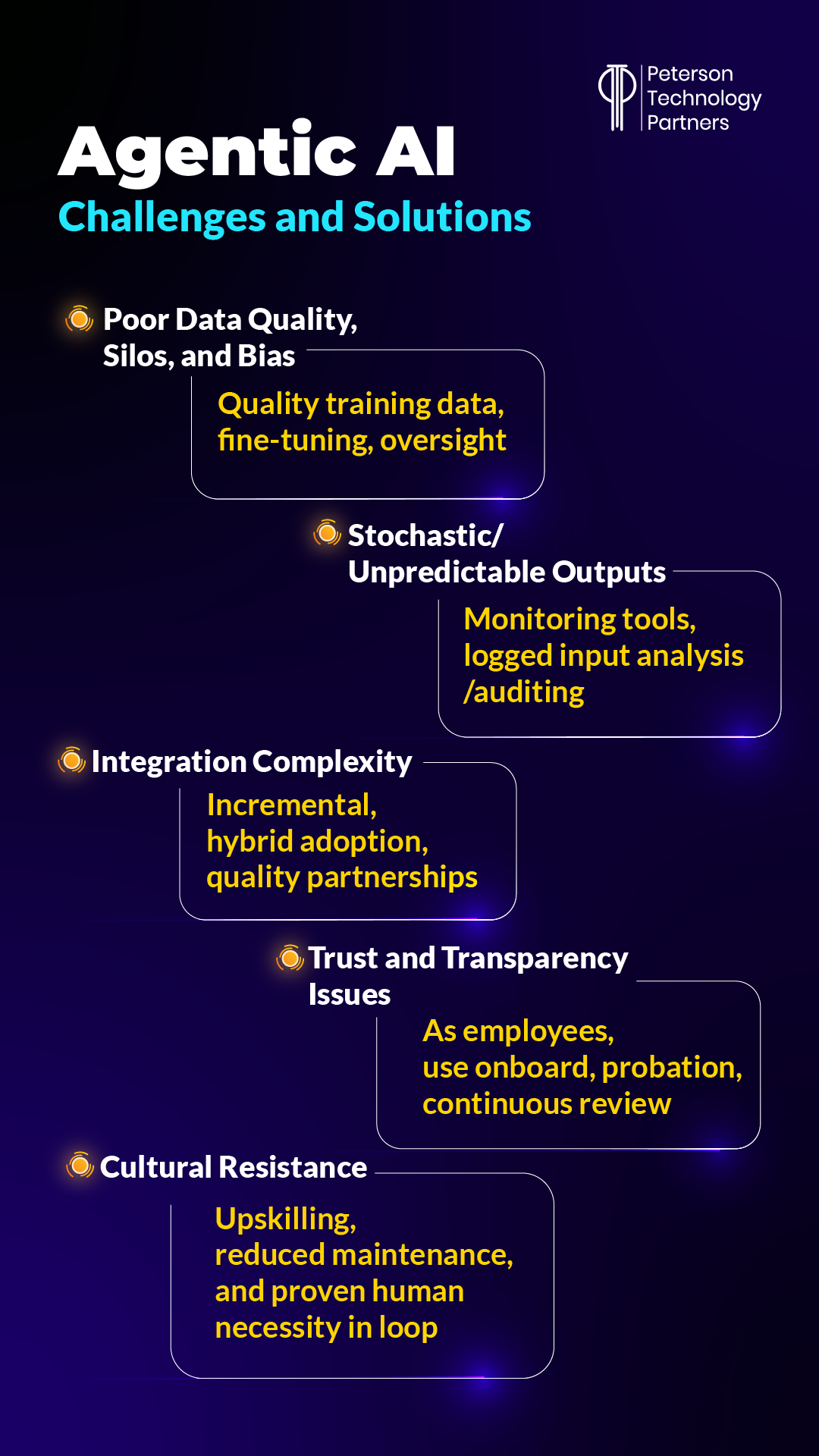

It’s early days for agentic AI software testing overall, too. As Szilárd Széll discussed with Richard Seidl on his podcast, the fact that AI never repeats can be a significant challenge for testing, as can ensuring that, with self-learning, it is working from the correct context when making autonomous decisions.

The more documentation a company has to train on the better, and logging all prompts, for example, enables companies to not only audit what their AI systems are doing, but also feed back into AI for improved analysis.

But as Széll points out, the idea of AI trust may be unfair in this context, as we don’t trust any individual worker to be right all the time.

All new testers will have to overcome entry criteria—getting screened, onboarded, trained, closely observed, and likely put on a probation period.

Performance evaluations and code reviews, for example, refresh the evaluation process, and the same consideration should be applied for AI systems, due to their stochastic nature.

Automated software testing is already yielding benefits of scale, but like all AI implementations, it adds complexity and requires experience and training to capitalize on.

Practical AI-Powered QA Integration with PTP

PTP helps companies implement automated software testing, and we’ve also seen striking gains in the right situations. We’ve helped companies boost quality and cut both time and costs with agentic solutions.

Our recommended process is to:

- Begin with an Effective Readiness Assessment: Look at the state of your stack and data to find the areas where AI agents can provide acceleration or help your team meet testing demands.

- Focused Agent Pilot Build and Iterate: Design a test agent for a specific module or product team first, and then scale up, integrating with your SDLC, CI/CD pipelines, and reporting.

- Upskill Teams: Once testers have a chance to work with AI systems, they’ll quickly see where they flourish and where they need a closer hand. Being able to extend reach and automate tasks they’ve struggled to get to can evolve roles in a positive way.

- Establish Effective AI Governance and Monitoring: These are tools, and they need clear audit trails, continuous monitoring, and validation.

The future of agentic solutions is extremely exciting in QA, and there are already enormous benefits that can be gained from the right implementation.

Conclusion: AI-Enhanced Software Quality Is Possible Now

So much about what AI can accomplish is speculative, and there’s a lot of hype out there with so much on the line.

But AI agents are not ready to replace experienced QA personnel. Especially not with the volume of code that’s increasingly being generated by AI copilots and developers working on accelerating timelines.

What they can do already is extend your coverage, increase your efficiency, and help your teams get to more than is possible without.

These systems are more flexible than prior forms of automation and can learn from historical failures to predict future ones. And they only continue to become more reliable, cost-effective, and useful as they gain real-world experience.

The near future of QA with AI looks bright, but ultimately, moving ASAP may be a necessity just to keep up.

References

Microsoft Is Rolling Out AI Agents. Developers Are Finding Coding Errors., Barron’s

AI Agents & the Future of Testing – Szilárd Széll, Richard Seidl Testing Unleashed Podcast

AI Agents – The Future of Software Testing, Mukesh Otwani

FAQs

How is agentic AI software testing different from traditional automation?

Traditional automation executes predefined actions based on typically static inputs. It also requires extensive maintenance.

Agentic AI solutions can explore paths, update or provide update suggestions themselves, write new tests and adapt existing ones, and learn from historical data.

In other words, they don’t just run tests but plan, write, execute, maintain, and improve them, as well as provide improved reporting and analysis.

What are the main risks with adopting agentic AI testing solutions?

Unpredictability. This is both a great asset to AI and a big challenge for QA.

Poor data quality or readiness can also stifle a system’s benefits, and the implementation needs to be well planned and carefully scaled.

Humans must remain in the loop at each stage.

What should businesses do to explore agentic testing today?

You need to clearly understand the limitations in your current process to see where AI systems can immediately address pain points, while also being ready to scale into the future.

Based on available resources, this can include piloting a SaaS tool in isolated, low-risk domains, or exploring options with experienced partners. Oversight mechanisms with AI should be established from the start, as it’s not a plug-and-play tool, as much as an evolving solution that will grow to encompass more when you are ready.