AI + QA. In theory, it’s a match made in heaven.

Especially in software, where coverage, analysis, and test maintenance are almost always constrained by intensive real-world demands. With its speed and ability to work over enormous datasets, AI in QA promises to increase the speed of testing, shift it left for earlier detection and even requirements improvement, maximize what gets tested, and break down current barriers.

Agentic AI testing, with its increasing chain of autonomy, looks to replicate user behavior at scale, improve bug detection, keep test suites updated, and build and maintain far more effective test data.

Is this possible today?

Yes and no. And there are other problems which complicate this dream, like the expanding use of AI-generated code at scale and the increasing demands on development teams to do more and much faster.

As software development capitalizes on ever more automation, the need for skilled QA professionals isn’t diminishing. To keep pace, QA needs agents, but the agents themselves need to be auditable, safe, and effectively governed.

In today’s PTP Report, we return to agentic AI software testing, looking at the risks and benefits, and considering the best ways to reap the rewards while minimizing additional exposure.

With more agents coming online and more AI-generated code every day, the time to get your hands around AI governance in QA is now.

Autonomous AI Systems: Risks and Rewards

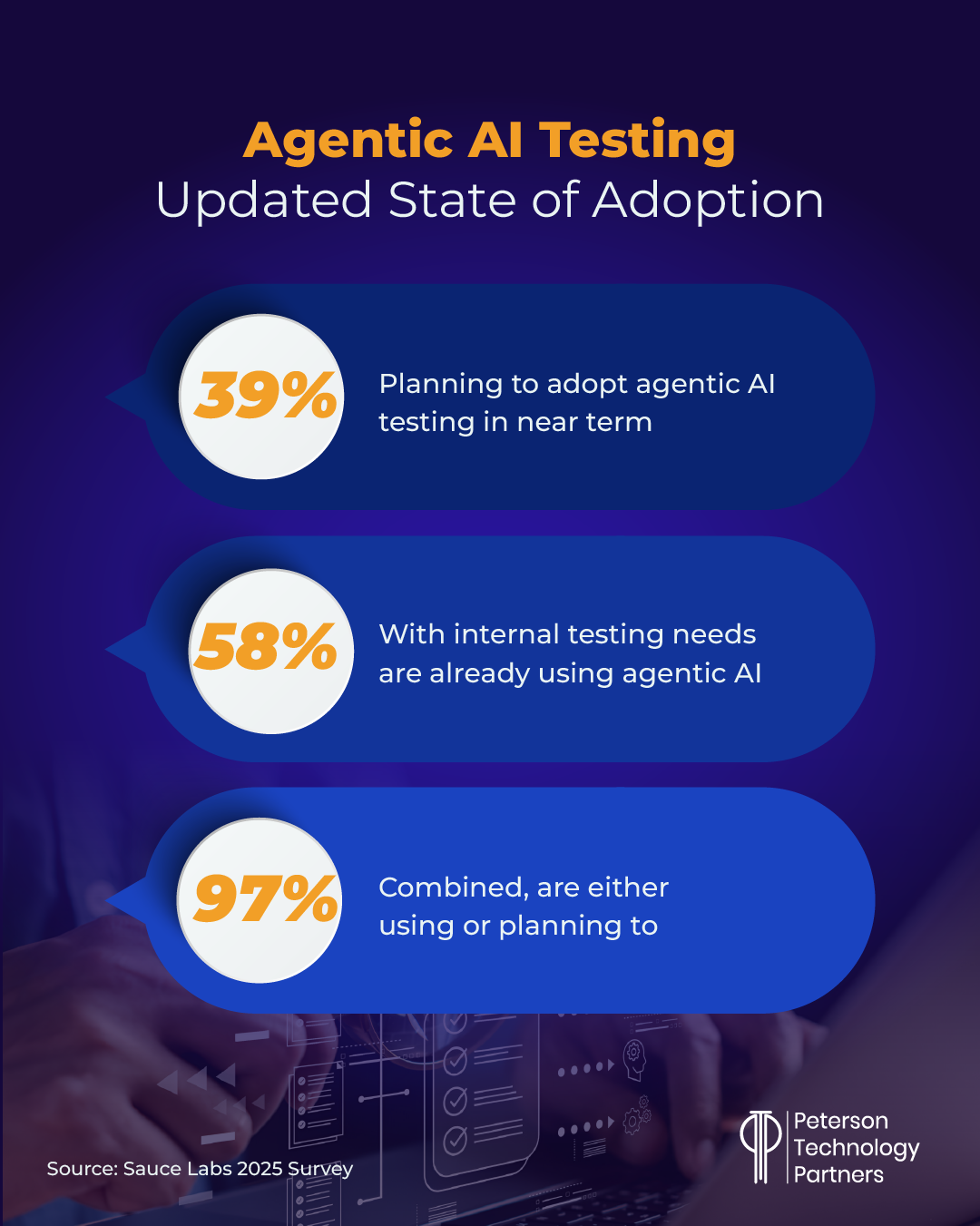

Overall, AI agents are taking off in a big way. Depending on your survey, sector, and source, you may see anything from 85% and up for the number of companies that are looking now to put them into (some kind of) action.

And with their capacity to string together tasks, one of the most obvious use cases is testing. In automating computer use, these systems can behave as customers, at scale. Testing also involves repetition, an area where agents should (theoretically) excel.

They can also run 24/7 and 365, meaning they can get to edge cases that a testing team may want to cover but just don’t have time for.

The future in this field is no doubt bright. Fusing AI and model-based testing, for example, shows promise with abstractions built in the requirements stage that get tuned to automation utilized throughout the process, from UML diagrams to end-to-end test coverage of those requirements.

But in the now, effective implementation requires strong AI policy guardrails, implementation experience, and practical oversight.

At PTP, we’ve helped Fortune 100 companies across sectors implement agentic AI software testing solutions, and we’ve seen them succeed consistently with current technologies. This means boosting code quality but also accelerating speed and root cause analysis.

But this requires sufficient readiness, effectively scaled implementation, and continuous governance that’s in control from the start.

When projects fail, they often overreach, struggle for consistency, drop the ball in key areas, or lack effective preparation, policy, containment, and auditability.

Generative AI systems, in themselves, are notoriously difficult (and expensive) to test at any depth. As former Apple and Borland testing manager and longtime consultant James Bach wrote in October for his Satisfice blog:

“Testing a GenAI product is a similar challenge to testing cybersecurity: you can’t ever know that you have tried all the things you should try, because there is no reliable map and no safe assumptions you can make about the nature of potential bugs. Testing GenAI is not like testing an app—instead it’s essentially platform testing. But unlike a conventional software platform the client app can’t easily or completely lock away irrelevant aspects of the platform on which it is built. Anything controlled by a prompt is not controlled at all, only sort of molded.”

Handling such variability requires controlling access carefully, limiting what AI can do and where successfully, and ensuring effective and functional human oversight.

Achieving Effective AI Audit Logging and Oversight

While it seems inevitable that AI agents will soon be policing other AI agents (Gartner calls these “guardian agents” and Salesforce “watchdog agents”), as of today, effective, practical agentic testing means having a good plan coupled with strong controls and pragmatic oversight and reporting capabilities.

Governed Gateways in AI Testing Implementations

MCP (Model Control Protocol) is a framework that standardizes how applications are exposed to AI agents. It can also give you a single auditable point for who can call what, how often, and where results flow.

MCP servers can be used today to stand between AI agents and testing tools, allowing companies to alter their AI models independent of current testing tools.

Tricentis is an example of a company implementing remote MCP servers at enterprise scale to standardize interface, control complications, and improve oversight.

AI Sandboxing Techniques

We covered the horror story of an AI agent wiping out a production database in our article on some of the benefits and challenges of vibe coding. And while that data was swiftly recovered and the episode resulted in numerous improvements to Replit, it also caused many hearts to skip a beat. It was an AI agent running in development, after all.

Sandboxing means isolating your environment to deny internet access by default, for example, constrain downloads, and generally fence a browser’s network. Needed tokens should be very short-lived, and all data access should reach only synthetic or masked data.

For code, Google Cloud, for example, offers AI sandboxes in the Vertex AI Agent Engine (code execution in preview). These allow AI-generated code to run safely while minimizing security risks (and overhead).

But in general, a good sandbox treats agent behavior as malicious by default and includes the capability to replay and explain every step that’s been taken. With such protections in place, an agent can safely move and access the tools it needs without the risk of overstepping its bounds.

Your Most Brilliant Intern—Managing Identities, Secrets, and Approvals

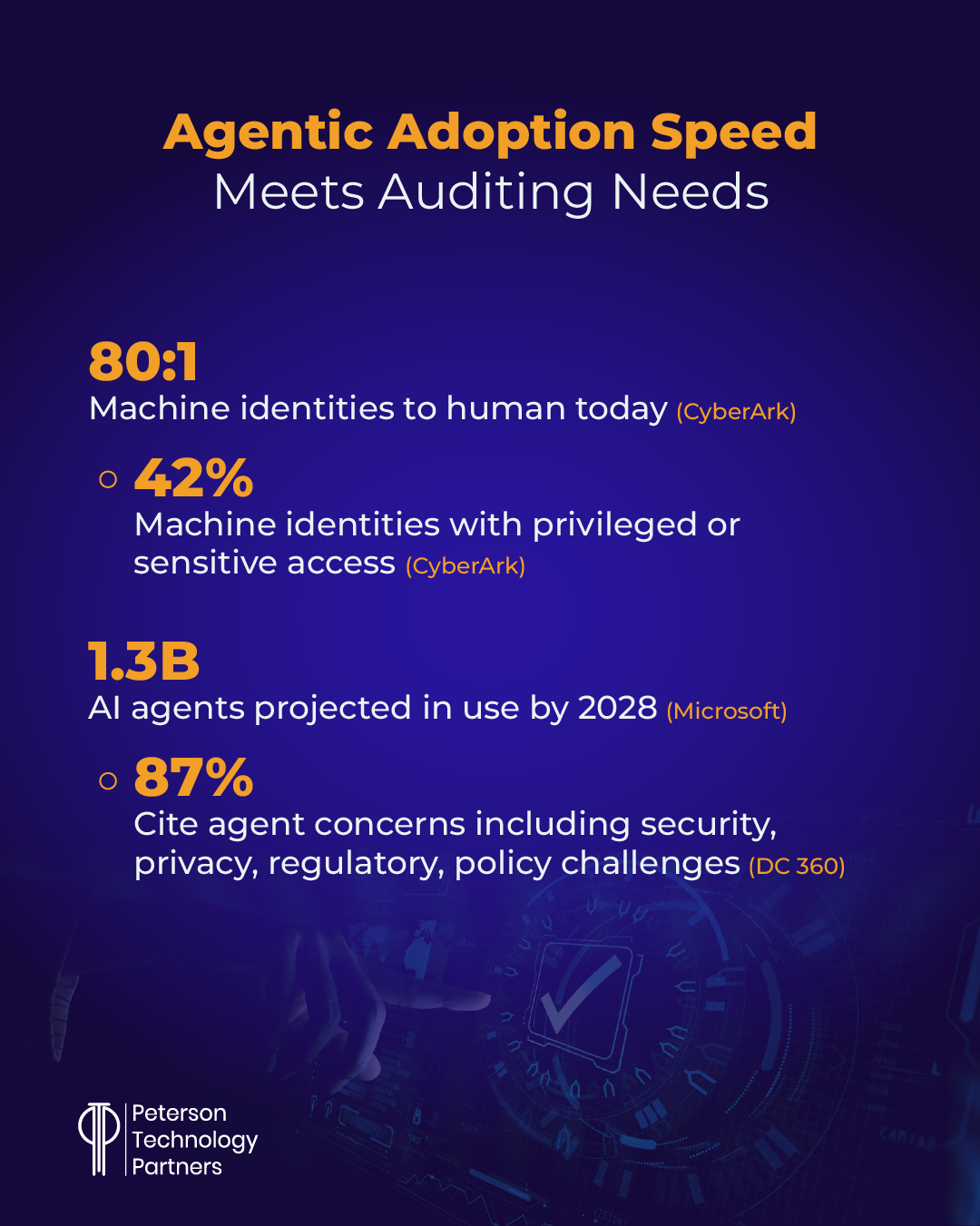

Already today machine identities far outnumber human ones. And with more agents coming online every day, this disparity, and complexity that accompanies it, is only growing.

Each of these identities needs control and monitoring, whether it’s an employee, a machine itself, or an AI agent.

As Haider Pasha, EMEA CISO at Palo Alto Networks, told IT Pro’s Rory Bathgate:

“That’s the simplest way I’m explaining it to my peers, is I’m saying ‘Look, treat it like an intern. What level of privileges would you give an intern? How do you secure the identity, the access, the device that’s being used, the workload it can access, the tools it can call?’”

In other words, machine identities should be short-lived, narrow in scope, and rotated regularly.

Maintaining different identities for exploration, execution, and reporting helps ensure least privilege and limit cases of untended agents doing things they shouldn’t be able to do.

Merging AI and Centers of Excellence (CoE)

Ultimately, much of this ties back to your humans-in-the-loop.

At PTP, our most popular AI testing governance framework is the Test Automation Center of Excellence (CoE). This cross-functional stakeholder team works to define standards, implement training, maintain repositories, and ultimately ensure quality.

It begins by sufficiently defining testing automation best practices across teams beforehand to ensure effective, ongoing governance and compliance. It also serves as a mechanism to centralize reusable assets and ensure continuous improvement.

One of the greatest areas of utility for AI agents in testing is test case generation from natural language or even requirements documentation. These systems can automate the updating of test cases and test data, and the CoE in turn optimizes this process.

It enables CI/CD pipeline integration, alignment with business requirements, and the automation of most of what is required in maintaining testing repositories.

For more on this, you can check out our prior PTP Report detailing an example AI testing automation implementation.

Conclusion: Mastering Autonomy Includes Effective Audit

Ensuring AI governance and safety in testing begins with an effective plan and continues with ensuring auditable AI systems are in place. And ultimately, it’s only of value when the appropriate checks can be reasonably maintained.

AI source models are exceedingly difficult to test, and remain vulnerable to attack, putting the impetus on implementation, your software layer, and the humans presiding over the tools to ensure you reap continued gains without sacrificing safety.

But the benefits can already be significant—expanded reach, improved visibility, time and money savings, and even higher code quality.

At a time when AI coding solutions are making development work more complicated while spinning out faster and easier, if not better, code, software testing—and QA overall—is arguably more important than ever.

References

Agentic AI poses major challenge for security professionals, says Palo Alto Networks’ EMEA CISO, IT Pro

Machine Identities Outnumber Humans by More Than 80 to 1: New Report Exposes the Exponential Threats of Fragmented Identity Security, CyberArk

Seriously Testing LLMs, Satisfice

3 Ways to Responsibly Manage Multi-Agent Systems, The 360 Blog

Model Generation with LLMs: From Requirements to UML Sequence Diagrams, arXiv:2404.06371 [cs. SE]

FAQs

How is agentic AI testing different from other forms of test automation?

In short, it acts with some autonomy and makes use of tools. This means the agent can undertake multiple steps and adjust on the fly, including searching modified UIs, for example, for elements. Agents can also generate test cases from natural language descriptions, and update tests based on past runs and shifting requirements. But this autonomy can also inject greater risk that must be accounted for.

How can companies ensure no data leakage or privacy issues occur with agents in testing?

By default, agents should not have access to data. For data that is needed, synthetic or de-identified data should be used. Luckily, creating effective test data is another great use of AI in testing.

What roles are typically needed to staff a Center of Excellence (CoE)?

In general, it should include key stakeholders needed for automation governance, execution, and optimization. This often includes:

- A CoE lead tasked with overseeing the strategy and roadmap

- Test automation architects to define the framework standards and guardrails

- Test engineers who will develop and oversee automation scripts

- DevOps engineers for CI/CD pipeline integration

- Business analysts to align with requirements and test the strategy

- Training leads to ensure skills development and conduct needed workshops