AI developer tools are big business. The market is currently worth more than $2 billion (Mordor Intelligence puts it at $7.3 billion), with several AI coding startups hitting $100 million in annual revenue in record time as all the major AI companies continue ramping up their own versions.

These tools are also already widely used by developers, no matter how you track the data.

Author and Co-Founder of Filtered Marc Zao-Sanders has been researching the most popular AI use cases over the past few years, and his 2025 results show the surge in the professional coding AI use case over the past year alone (from 47th in 2024 to fifth in 2025 among all AI use cases, personal and professional). By his measure, it’s the most popular way people are currently using AI at work.

(You can check out his study in long form on his site, as a Harvard Business Review article, or read coverage by PTP Founder and CEO Nick Shah in Substack from last month, which also profiles top AI use cases at work.)

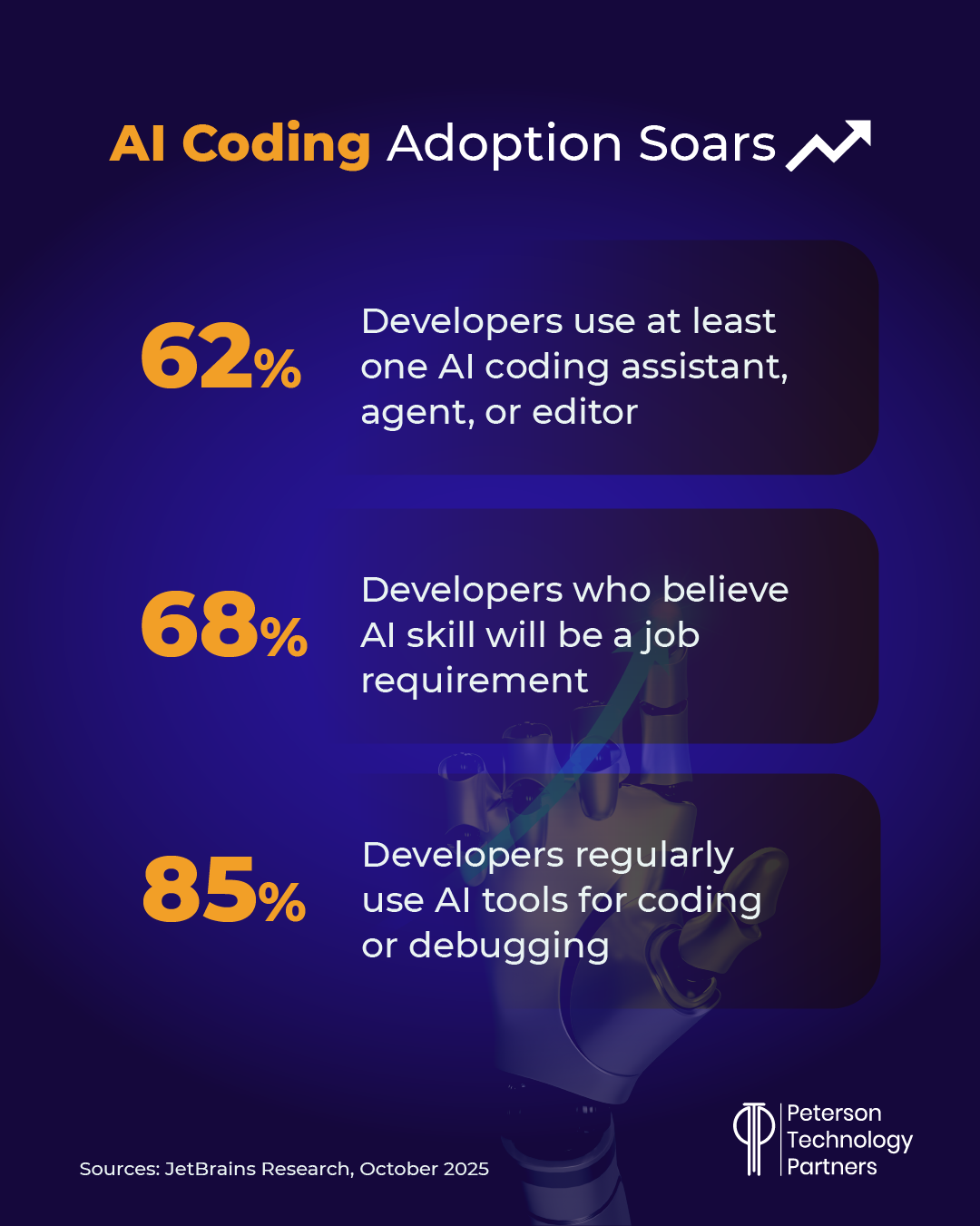

Across surveys and studies from AI hypers and AI doomers alike, 80%+ is a common number given for developers using AI-powered coding assistance of some kind. Here are numbers from a recent JetBrains survey:

All of this combines to make the focus of this article—AI resistance at work, and especially among developers—look nonexistent.

After all, it’s widely in use, use is growing, and while efficiency numbers vary wildly based on who you ask and how they’re using it, people like Perplexity’s CEO Aravind Srinivas have said (during a conversation at the AI Startup School in San Francisco) the “level of change is incredible” and the “speed at which we can fix bugs and ship to production is crazy.”

But this doesn’t tell the whole story. Because while use may be climbing, often by mandate, many measures of trust have been declining.

Top-Down: Mandating AI in Software Development

Perplexity’s Srinivas described cases where engineers have cut discovery time on new work in machine learning from three or four days to “literally one hour,” and said their biggest area of gain overall is currently on front-end work, where they take advantage of the ability to upload marked-up screencaps, for example, to change UI features.

And overall, Perplexity has made using tools like Cursor and GitHub Copilot mandatory. In this, they’re not alone.

In August, Coinbase’s CEO Brian Armstrong told John Collison on his Cheeky Pint podcast that he immediately fired developers who hadn’t at least onboarded AI coding tools (and didn’t have what he called a good excuse) a week after the company issued a similar mandate.

While he admits that it was a “heavy-handed approach” that wasn’t popular with everyone, he’s stood by the message it sent that AI use at his company isn’t optional.

“Like a lot of companies, we’re leaning as hard as we can into AI,” Armstrong said. “We made a big push to get every engineer on Cursor and Copilot.”

Now 40% of Coinbase’s new code is reportedly written by AI, with the goal of getting it to 50% this month.

In March, Anthropic CEO Dario Amodei said we were only six months away from a time when AI would be writing 90% of code, and in April, Google CEO Sundar Pichai added that well more than 30% of all new code at Google was already AI generated. Microsoft’s Satya Nadella put their own percentage around 20–30%, while Meta’s CEO Mark Zuckerberg said that on one of their projects, half the development work over the next year would be entirely done by AI.

Disconnect: A Leadership vs Developer AI Gap

In an interview this month with NPR’s Huo Jingnan, the head of Anthropic’s Claude Code unit Boris Cherny said that at Anthropic, “most code is written by Claude Code” though he admitted they need a better measure to know the exact percentage.

He also added a key point which is often at the heart of this discussion: “Every line of code should be reviewed by an engineer.”

As mentioned above, AI efficiency stats for coding are extremely varied and in many cases very positive, but the nonprofit METR (Model Evaluation & Threat Research) group found in early 2025 that developers using AI tools actually took 19% longer than those without in randomized testing, despite the developers themselves believing the tools sped them up (by a perceived 20%).

Similar stats abound, depending on the tasks, method of implementation, and mode of AI assistance. And overall, with wide adoption, varying tools and methods of working, consensus is hard to come by.

Still, among many developers there’s a growing sense that while AI is an incredible booster for many tasks (especially where accuracy, efficiency, and security are not at a premium), it is not the uniform leveler their leaders expect. Also, AI-generated code can be extremely messy, agents can get wildly off track, and for some use cases, using the tools proves more trouble than it’s worth.

But with pressure from the top to not only make use of AI tools but also provide numbers showing increased efficiency, one Amazon engineer said of AI use at the company:

“It is very much a solution in search of problems much of the time.”

And with Amazon very firmly announcing that AI will be changing the way they work earlier this year—and just today releasing news via their blog that they are eliminating some 14,000 corporate jobs while continuing to hire in “key strategic areas while also finding additional places we can remove layers, increase ownership, and realize efficiency gains”—the stakes for developers no doubt feel quite high.

AI Resistance in the Workplace

Axios in October profiled The AI resisters, showcasing that while nearly all college-aged adults have tried AI, a sizeable section of the US workforce appears to be actively dragging its feet on AI.

In addition to unknown number of developers fired at companies like Coinbase (and Jit, see below), The Information reported in October on tensions coming to a head at companies like Mixus and Ramp, where veteran developers have pushed back against mandates on Cursor use, leading to a standoff between leadership and their workforce.

Fighting AI Coding Tools Adoption, or Just Struggling for Consistency?

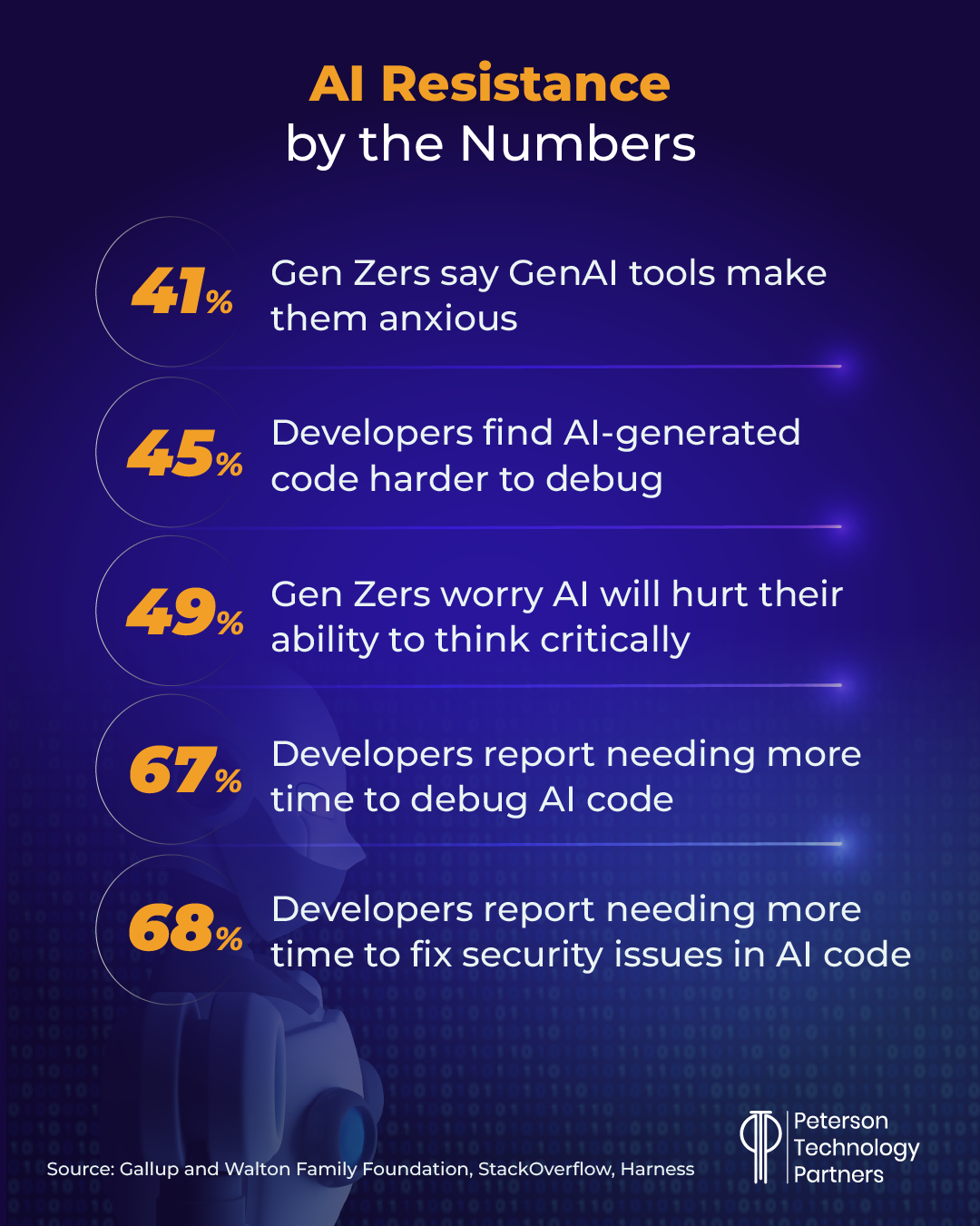

StackOverflow’s 2025 Developer Survey supports the sense that, while use of AI for coding continues to climb, trust does not. Only 4.4% of developers found AI did very well at handing complex tasks, with only 3.1% highly trusting in its accuracy. (Our leading stat is also from this survey, with 45% of developers distrusting AI tools overall.)

At the same time, 22.9% called using AI tools in their workflow very favorable, with another 36.8% calling it favorable (or 59.7% combined positive about the addition, with another 17.6% indifferent).

All this is to say that while leadership mandates might be hard to act on or irksome, and AI tools may provide less benefit at best and at their worst may even make things harder, they can be a net positive, and there may not exactly be a coder revolution brewing.

Looking Honestly at the Challenges of AI Implementation

We’ve written before on some of the security issues that arise in purely AI-generated code.

Hallucinations are a common problem, as are missing access controls, unprotected/sanitized inputs, efficiency overall, and hardcoded secrets, keys, and even passwords.

In our own experience, we’ve seen solutions (especially on finite, clearly defined asks) be whipped up with incredible speed and effectiveness, while other tasks spiral into repeated, failing results, which the LLMs can then struggle to pull out of.

Perplexity’s Aravind Srinivas, in the same conversation referenced above, pointed out that for front end work, what AI can do is truly incredible. But he also pointed out that while he’s a big fan of the tools, it’s true that they are introducing many new bugs, and that many developers struggle more to find these. More code, faster, but buggier seems to be consensus.

He said that while he’s “really positive that this is the right future,” he also stressed that “there are issues right now” still being resolved.

TechCrunch reported on OpenAI’s own code base in July, with a former employee saying in a blog post that their central repository (“the back-end monolith”) is “a bit of dumping ground” with things breaking regularly or taking too long to run.

But he explained this also comes from the wide variety of coding experience at play in their developers, and that top engineering managers are working on improvements currently.

Achieving Better Human-AI Collaboration in Coding

British programmer, researcher, and co-creator of the Django Web framework Simon Willison previewed OpenAI’s latest model and told Huo Jingnan that he believes AI may actually succeed at writing a high percentage of code, but that this doesn’t mean there will ultimately need to be fewer developers.

“Our job is not to type code into a computer. Our job is to deliver systems that solve problems.”

And at this stage, AI isn’t capable of solving such problems on its own. Still, Willison added that he was “comfortable saying” experienced programmers are likely capable of getting two-to-five–times productivity boosts on certain tasks using AI. The key is identifying the right tasks while maintaining effective development practices overall.

On his blog, Willison recommends the following for setting up a codebase to be more productive with AI:

- Use AI for good automated testing. He uses pytest and one of his projects has 1500 tests. He finds Claude Code effective at selectively running tests relevant to his changes and then running the whole test suite after.

- Let AI agents interactively test code it is writing itself, too (via Playwright or curl).

- Use GitHub issues collection and paste URLs for issues right into Claude Code for resolution.

- LLMs can read code much faster than we can to figure out use, which means that, while documentation remains essential for us, it may not be that useful for coding agents. (But they’re also good at updating documentation for us.)

- Detailed error messages are very useful for LLMs in debugging, so collecting more data in error messages may be a low cost of way of accelerating the entire process.

Implementing agentic AI software testing overall is something that PTP has a lot of experience with, and we’ve also found this to be a great area for reaping many of the benefits of agentic AI while minimizing current risks.

But overall, Willison dislikes the approach of thinking of yourself as a manager or editor of AI coders. Instead, he prefers thinking of himself as a chief surgeon on a medical team.

He recommends letting the AI handle prep, secondary tasks, and administration, while the developer does the surgery itself.

There are a lot of tasks that AI agents are good enough to help with. For example:

- Before starting a big task, have the AI write a guide to relevant areas of the codebase.

- Let the AI take an attempt at a big change that he won’t end up using but can review as a sketch of where to go.

- Let it fix typescript errors or bugs on its own.

- Have LLMs write the documentation about what is being built.

Overcoming AI Skepticism at Jit

As another example of this struggle in action, we look at the tech company Jit. It had to overcome its own majority pushback when leadership attempted to move their development team from the JetBrains IDE to Cursor starting at the end of last year.

Their VP of Engineering Daniel Koch told LeadDev’s Chantal Kapani that the move wasn’t being made just for the hype around AI in software engineering. Instead it was focused on removing the persistent manual bottlenecks their developers were having.

They also found success with offloading context-switching tasks like committing code, creating pull requests, addressing comments, internal documentation, creating tickets, and more.

As in the Willison example above, their goal was to move their developers more to acting more like surgeons, and less like do-everything generalists.

And initially, at the end of 2024, they faced extreme resistance, with only three out of 25 engineers willing to use the AI agent. The more experienced developers disliked the code created, finding it like “someone who just joined the team and didn’t understand” their standards or conventions.

By March, still struggling with adoption, they ran vibe coding workshops focused on Cursor rules—specifically structuring instructions for when and how the AI could offer suggestions or apply automation.

Koch believes this was critical, saying: “It promotes safe experimentation and progressive rollout, reducing fear of unwanted or incorrect AI-driven changes.”

These rules became part of their operating model, and they dedicated a Slack channel for ongoing learning and discussion.

With the rules in place and developers on board, they started getting much better results with the AI agents, too, as they adapted.

And while not everyone remained with the team and it took some time, Koch said that in months, the team saw 1.8–3 times productivity gains from start to finish in delivering new features. Overall, they’ve been able to shorten development time, delivering up to three times faster in some cases.

Koch stressed that leadership is critical in improving AI adoption in teams and that leaders must also use the tools themselves and can’t expect to just delegate these changes.

Conclusion: Developer Trust in AI Goes beyond Hallucinations

While the rewards can be significant, implementing AI in workflows is rarely as seamless or easy as expected. And the challenges often go beyond debugging AI-generated code or redesigning workflows.

For workers around the world and across sectors, AI and job displacement fears also go hand-in-hand. And with regular (if isolated) stories about coders getting fired for refusing to adopt AI solutions as increasingly effective coding solutions are rolled out, it’s easy to see why.

But anyone who’s used these AI tools much can see that while they undoubtedly augment the development process, they’re also nowhere near replacing experienced software developers overall.

The real trick is getting everyone on the same page as quickly and effectively as possible.

References

How People Are Really Using Gen AI in 2025, Harvard Business Review

Perplexity’s engineers use 2 AI coding tools, and they’ve cut development time from days to hours, Business Insider

Aravind Srinivas: Perplexity’s Race to Build Agentic Search, Y Combinator

Coinbase’s CEO Fired Software Engineers Who Didn’t Adopt AI Tools: ‘We’re Leaning as Hard as We Can Into AI’, Entrepreneur

The AI resisters, Axios

Tech CEOs say the era of ‘code by AI’ is here. Some software engineers are skeptical, NPR

Recent News, Simon Willison’s Weblog

A former OpenAI engineer describes what it’s really like to work there, TechCrunch

How Jit overcame developer resistance to shift to Cursor, LeadDev

FAQs

What is driving software developer resistance to AI coding tools?

Well, for starters, use of AI tools among the developer workforce globally is quite high, with the vast majority already using them. That said, many do report concerns over job security, the stability of the developer pipeline, inflated expectations by leadership on what AI can actually do, and added difficulties in debugging AI-generated code, as examples.

Do top-down mandates on AI coding actually work?

There is certainly evidence that they accelerate adoption, both in the number of developers using them and in the speed at which conversion happens. That said, it can come at a cost to company culture, lower morale, and even standoffs in rare cases which have resulted in terminations or departures.

What are ways to get the most from AI in coding with the least risk and the lowest workforce pushback?

We give several recommendations in the article above, but one consistently effective use of agentic AI can be found in software testing. AI systems are good at running the right tests on need and can also execute entire suites automatically when changes are made. They’re also being used to generate test cases, synthetic test data, and can be effective in helping maintain test suites. For more on this, you can check out one of our prior PTP Report articles on agentic AI software testing.