Top AI News Headlines

- Microsoft launches agent tracking tools under Agent 365 platform

- Anthropic’s Claude Code hits $1 billion in revenue in just six months of release

- OpenAI faces seven lawsuits for harmful delusions and encouraging suicide

- Investor Michael Burry calls AI boom a “glorious folly”

- Google and the Bezos-launched Blue Origin are serious about AI data centers in space

ChatGPT turned three at the very end of November. It has more than 800 million weekly users (about 10% of all the world’s adults) and in July was processing more than 2.5 billion messages a day (29,000 queries per second).

That’s a growth of 700% since November 2023. Not bad for an app that launched as a “low-key research preview” that OpenAI staff were instructed not to even present as a product launch. And despite concerns that it was not yet ready for primetime, it had more than one million users in just five days and has since grown faster than any consumer app in history.

ChatGPT’s birthday kicks off our AI news roundup for November. It was a month of product releases (what else is new?), escalating bubble talk, layoffs (also familiar), increasing focus on science and research use cases, and continued progress with agents and data center tech.

But first, Google and Anthropic both demonstrated AI use in cybercrime in new and alarming ways. Google’s Threat Intelligence Group updated earlier reports to detail malware that relies on LLMs during execution to evade detection, dynamically generate malicious scripts, and create functions on demand (vs hard coding).

You can read more about the five forms they have observed in operation (including credential stealers, data miners, and ransomware) in their post.

Anthropic detailed in November how they’d detected the first large-scale, fully agentic cyberattack (likely state-sponsored by China). This used Claude Code (see below) to infiltrate large tech companies, financial institutions, chemical manufacturers, and government agencies, and they believe AI handled 80–90% of the work (with humans needed for just four-to-six critical decisions per campaign).

AI planned multi-step operations, wrote and tested code, harvested credentials, exfiltrated data, and documented it all for future use.

Innovations and Emerging AI Trends for November 2025

For more positive news, we move to AI scientific research breakthroughs.

Scientific Discovery: The US Genesis Mission to Speed AI

There’s been a lot of talk about the data center infrastructure investment exceeding all prior initiatives, like the US highway system or the Manhattan Project.

Another historical endeavor was referenced in late November by Michael Krastios, the science advisor to the president, when he told reporters on a conference call (as reported by CBS) that their newest initiative, called the Genesis Mission, will be “the largest marshaling of federal scientific resources since the Apollo program.”

Created by an executive order signed by President Trump, Genesis directs the Department of Energy and its national laboratories, in partnerships with the private sector and universities, to build the American Science and Security Platform. This will combine the nation’s scientific datasets, AI models, and supercomputing resources for the purpose of rapidly enhancing AI capabilities for research.

Krastios noted that the mission’s goal is to harness AI to shorten discovery timelines “from years to days or even hours.”

AlphaFold’s John Jumper Updates Us on Its Future

Back in 2017, newly minted PhD John Jumper heard Google DeepMind was moving from mastering games to predicting the structure of proteins. He applied for a job, and three years later he co-led the creation of AlphaFold 2 with CEO Demis Hassabis.

It stunned the world by predicting the structures of proteins in just hours, and to a level of detail that used to take months.

In 2024, they shared a Nobel Prize for it, and through multiple iterations the project has now predicted the structures of almost every protein known to science—some 200 million.

In an interview with the MIT Technology Review’s Will Douglas Heaven, Jumper attributed much of their success to fast feedback loops.

“We got a system that would give wrong answers at incredible speed. That made it easy to start becoming very adventurous with the ideas you try.”

AlphaFold has seen wide adoption, in ways that have surprised even him. One example is at UCSF, where researchers use multiple versions daily, finding it incredibly useful at augmenting their research.

But like chatbots, they say, “it will bullshit you with the same confidence as it would give a true answer,” making validation critical.

For Jumper, the work has moved on to fusing the deep but narrow capacities of AlphaFold with LLMs that can bring broader scientific knowledge and reasoning to bear.

Superintelligence Is Back in Style

But did it ever go out?

While Meta’s AI team has been renamed for it, and some big-name petitioners made news last month by asking to stop its development, AI superintelligence only got more popular in Silicon Valley in November.

Microsoft AI CEO Mustafa Suleyman announced the creation of their own MAI Superintelligence team, writing in a blog post that:

“This year it feels like everyone in AI is talking about the dawn of superintelligence. Such a system will have an open-ended ability of ‘learning to learn’, the ultimate meta skill.”

But how we get there remains an open question.

As reported in Nature, the vast majority of members of the Association for the Advancement of Artificial Intelligence (AAAI) agree that neural networks alone are not enough. One popular method is combining an older type (symbolic AI, pairing formal rules and the encoding of logical relationships) with neural networks, in what some are calling neurosymbolic AI. Google DeepMind’s AlphaGeometry is one such system.

We covered the surge of interest in world models in a prior roundup, and Amazon’s Jeff Bezos is the latest to join this crowd with a startup called Project Prometheus. The field is already a focus for AI pioneers like Fei-Fei Li, Yann LeCun (post-Meta), Google, and Meta.

Benjamin Riley, Founder of Cognitive Resonance, hit on the issue with pure LLMs in a November essay for The Verge. Thinking, he argues, is largely independent of language, even if we do use language in thought. He backs this up with decades of research showing that taking away language doesn’t take away thought. (This is view also shared by LeCun.)

And some, like James Landay, Co-Founder and Co-Director of Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI), believe the superintelligence push is also driven by hype, or the need to keep selling new goals.

As Landay told Yahoo Finance’s Daniel Howley:

“I don’t think it’s actually supported at all by what AI can do right now, or what scientific research would lead you to believe AI can do in two years, five years, or 10 years.”

Concerning AI Agents

Google offered an intensive, five-day AI agent training course via Kaggle in November, dropping documents galore and covering topics like what agents really are, MCP and interoperability, context engineering and memory, ensuring quality, and real-world deployment.

They shared their view of agents not as smart chatbots but as a complete software system with reasoning, tools use capability, and memory.

The training also covers Agent Ops, a governance approach combining MLOps and DevOps for a new operational approach. Its purpose is to handle the challenges of building, launching, and governing AI agents effectively.

But what about when agents go wrong?

For managing your fleet, Microsoft launched agent tracking tools under the Agent 365 platform (for early access in November). This extends tools used to manage people and devices to AI agents, in anticipation of companies deploying multiple agents for every worker.

Cloud and data security firm Rubrik is working on an “undo button” for agents in a bid to shore up AI resilience and resolve trust issues as enterprises get increasingly agentic. This will enable the reversal of specific data changes made by a given agent without having to entirely revert to a prior backup.

Given the grey area around what constitutes agentic failure, the tool flags deviations from normal behavior, for example, with the goal of giving administrators a dashboard for single-click rollback.

Carnegie Mellon’s AI Agent Data Validates Augmentation

Research from Carnegie Mellon and Stanford was released this past month that looks to quantify how well agents are really doing at human work.

We detailed these results in a prior PTP Report on AI agents in sales, but the short of it is that current agents are proving much faster (88%+) and cheaper (90%+) than humans, but are also failing frequently (pinned at 32–49% worse than us), and with a tendency to mask it with apparent updates, data fabrications, and tool misuse.

On the plus side, the research once again validates the benefits of using agentic AI for augmentation, where humans saw a 24%+ acceleration of work (vs a drag on attempts at full automation).

Data Center AI Hardware Innovations

What’s even bigger than AI this year?

Maybe data centers, which, as discussed above, are an infrastructure program unlike most anything in US history.

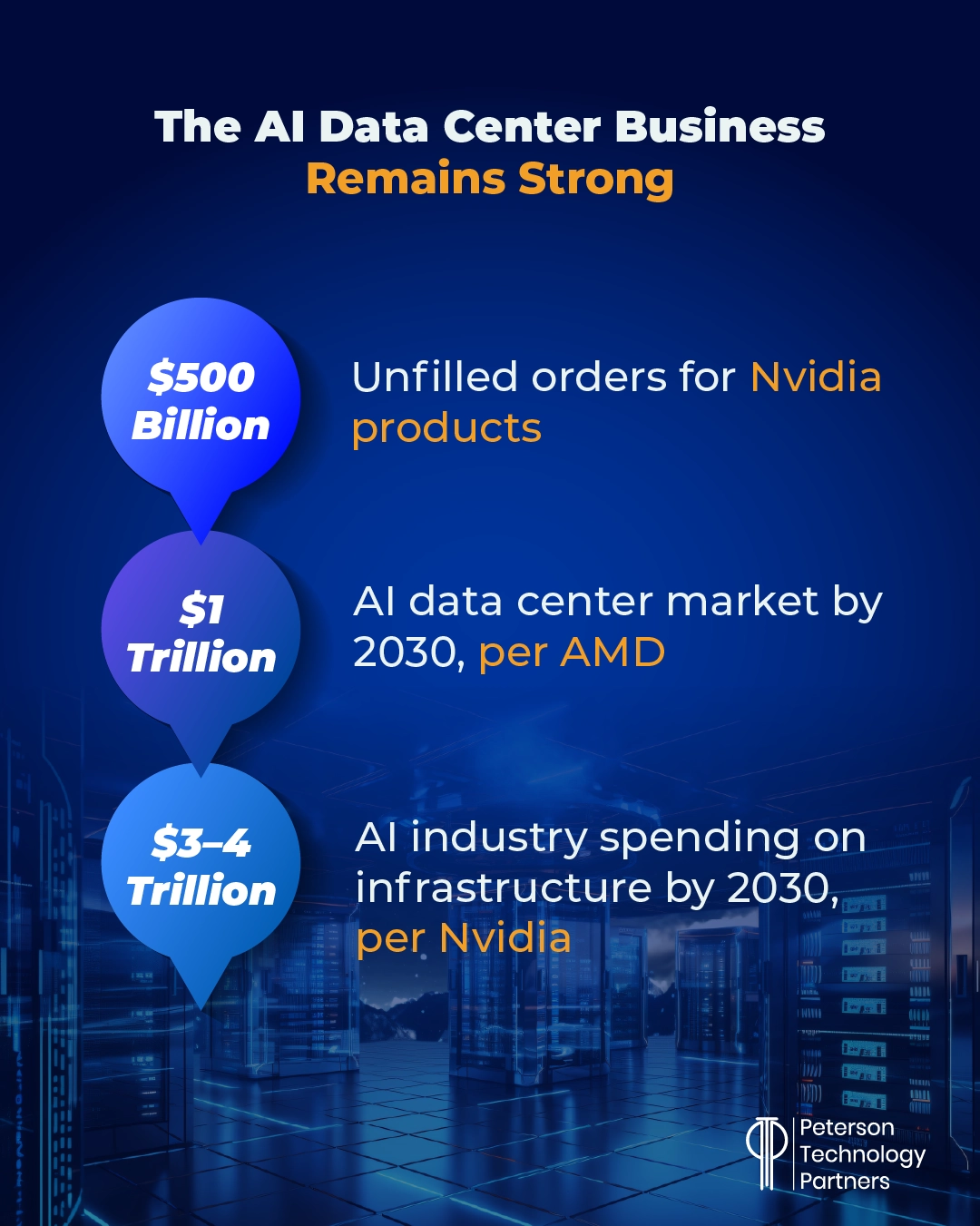

To this end, Nvidia’s earnings continued to alleviate some AI bubble concerns (see below) with their chip demand and data center business, as evidenced below:

Among the data center news for November:

- We’ve reported before on photonics for quantum computing using fiber optics in chips to process light at far greater efficiency. This optical tech is now being used to accelerate networking for data centers.

- And even more experimental (but still under substantial development) is the idea of deploying data centers in space. Both Google and the Bezos-launched Blue Origin are serious about the move, offering the prospect of constant access to solar energy. And while it may sound extremely impractical, Blue Origin’s CEO Dave Limp believes we’ll see them in 10 years, beaming data back to the ground as satellites do today.

- More practical? How about shortages? We’ve written in prior roundups about the demand for skilled labor, water, power, land, and of course GPUs. But another bottleneck caused by AI—a memory chip shortage—came into focus this month. Reuters reported on the scramble of large companies like Microsoft, Google, and ByteDance to lock up supply. Producers like Samsung and SK Hynix are ramping up production, but still the latter predicts memory shortages will persist until 2027 at the earliest.

AI Coding’s Big Milestone

Few professional uses of AI have gotten as much attention as AI coding assistance or automation, and in November Anthropic’s Claude Code achieved a stunning milestone, reaching $1 billion in revenue in just six months of release.

The success inspired the company to acquire JavaScript runtime Bun, to accelerate performance, improve stability, and bring in new capabilities for JS and TypeScript developers.

Early this year, CEO Dario Amodei made news for saying AI would be able to write 90% of the code for engineers within six months, and while that’s not quite come to pass, The Information reported in November how Claude Code helped financial services company Brex write 80% of a new code base.

An Anthropic study (released a day after they dropped their new model Claude Opus 4.5) claimed that AI could double the US economic growth rate over the next decade, on the basis of current usage patterns.

Of course, like the prediction of 90% automation of coding, there are significant caveats with the projection.

November’s AI Market Winners and Losers (+ Business News)

Now for new products, market concerns, and company rivalries.

About That AI Bubble…

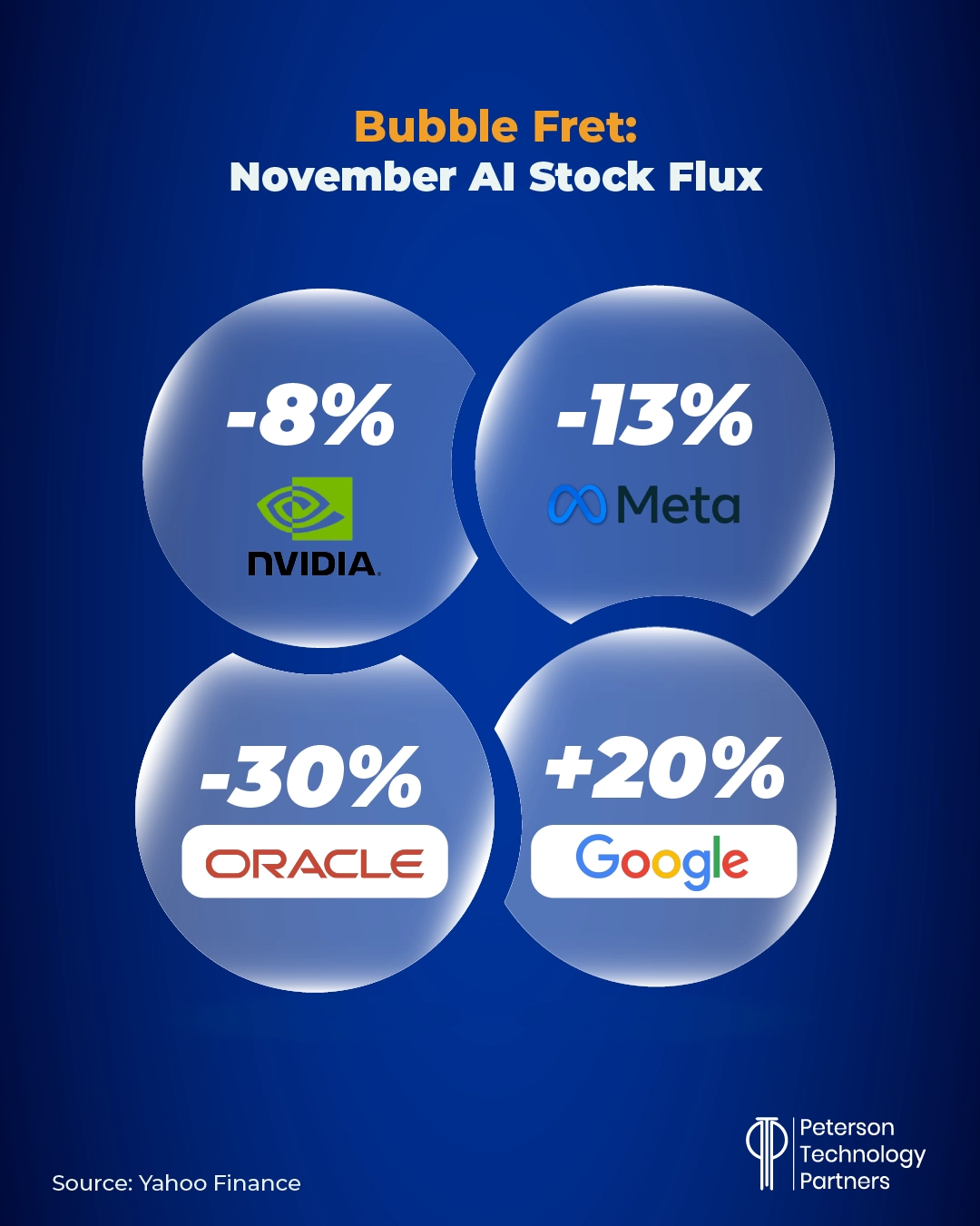

Investor Michael Burry is famous for predicting 2008’s subprime mortgage crisis. With a background in medicine, he brings a unique perspective, and he rocked markets in November by calling the AI boom a “glorious folly” and announcing that his fund was shorting Nvidia.

His view is that “give-and-take” deals between cloud giants, AI firms, and Nvidia send money in circles, and that big tech companies are both under-counting expenses and over-inflating earnings.

And while Amazon, Meta, Microsoft, and Alphabet are all raising their AI spending in a bid to be one of the AI winners, their earnings results also came in strong, showing profits growing more than 18% from the prior year. Together, the Magnificent Seven accounted for over one-third of the entire market cap of the S&P 500.

In a counter to Burry, Hennion & Walsh CIO Kevin Mahn has been outspoken in his view that those fearful of an AI bubble bursting may miss out on a technology still in its earliest days.

He said of the AI surge that it’s not even early innings but really “just batting practice.”

Gemini 3 vs Claude Opus 4.5 and Perplexity AI vs Amazon

In the arena of products:

- We mentioned above the rollout of Claude Opus 4.5 from Anthropic, but the biggest drop of the month went to Google, with Gemini 3 and Nano Banana 2. Powered (in part) by Google-made chips (which have since drawn investment from Meta) and topping most benchmarks, Gemini 3 drew strong reviews across the industry, including by rivals. Salesforce CEO Mark Benioff said it’s pulled him off ChatGPT, noting: “The leap is insane… It feels like the world just changed, again.”

- Google’s star appears to be rising, with Bloomberg reporting that Apple is also coming aboard, with plans to pay $1 billion a year for a Google model to help overhaul Siri.

- OpenAI, meanwhile, has continued growing its in-chatbot partnerships, aligning with Walmart, Salesforce, PayPal, Intuit, Target, ThermoFisher, BNY, Morgan Stanley, BBVA, Accenture, and many more according to their site. And while there are issues being resolved around referrals, data, payment processing, and more, these deals bring brands to where people are spending their time—ChatGPT.

- Softbank, meanwhile, announced they were selling their entire Nvidia stake, with the founder admitting he “was crying” over it. The firm is using the resources to invest in OpenAI and data centers.

In the arena of challenges:

- ChatGPT has been battling concerns about its impact on mental health, with The Wall Street Journal detailing seven lawsuits against OpenAI for harmful delusions and encouraging suicide. The assertion is that GPT-4o was rushed to market without sufficient testing. The New York Times reported in November on OpenAI’s adjustments to increase use, before making a move to dial it back in the search for optimal balance. Ultimately, they discovered a need for better sycophancy testing, something that’s been in place at Anthropic since 2022.

- Perplexity and Amazon engaged in a publicity war this month, with the search startup claiming Amazon is “bullying” them. At stake is access to the Amazon storefront by Perplexity’s agents. Amazon is suing on the grounds that Perplexity ignored their requests and even disguises its agentic activity as human.

- AI-driven layoffs, meanwhile, continue to look like a reality with more than a million workers impacted so far in 2025. In November, this included tech stalwarts like Apple and IBM. PTP has written extensively about transition, and also ran a webinar this month on resolving the challenges confronting recruiters and candidates on the eve of 2026.

Conclusion

It’s increasingly a reality that AI is falling into two markets for US businesses, as described by Palantir CEO Alex Karp this month:

- Organizations using AI as “enhanced intelligence” to do basic things. This is helpful, but doesn’t really change workflows, revenues, or margins. And while the market is very large, he asserts, it may not justify all the costs going into LLMs.

- Organizations using AI in ways that change the work itself, change revenue, and show real quantifiable results. This is a subset, but growing, especially in cases where you can see it already working in a similar capacity somewhere in the world.

If you’re a company that wants to move from one to the other, PTP can help. We assist businesses with AI implementations, tools, and labor, and can demonstrate actionable ways to help you get real results.

And if information’s what you need, catch up on all of 2025’s AI news (pre-November) in our roundups below:

References

The World Still Hasn’t Made Sense of ChatGPT, The Atlantic

ChatGPT is now being used by 10% of the world’s adult population, Business Insider

Disrupting the first reported AI-orchestrated cyber espionage campaign, Anthropic

What’s next for AlphaFold: A conversation with a Google DeepMind Nobel laureate, MIT Technology Review

This AI combo could unlock human-level intelligence, Nature

Large Language Models Will Never Be Intelligent, Expert Says, Futurism

Microsoft’s Agent 365 Wants to Help You Manage Your AI Bot Army, Wired

The Billion-Dollar Safety Net: Inside Rubrik’s Bid to Build an ‘Undo Button’ for Rogue AI Agents, WPN

The AI frenzy is driving a memory chip supply crisis and Amazon sues Perplexity over ‘agentic’ shopping tool, Reuters

AI could double the US economy’s growth rate over the next decade, says Anthropic, ZDNet

Michael Burry Just Sent a Warning to Artificial Intelligence (AI) Stocks. Should Nvidia Investors Be Worried?, The Motley Fool

The hottest new AI company is…Google?, CNN

Nvidia blows past revenue targets and forecasts trillions in AI infrastructure spending by end of decade, Fortune

Apple Nears Deal to Pay Google Roughly $1 Billion a Year for Siri AI Model, Bloomberg

Silicon Valley is going all in on ‘superintelligent’ AI, and there’s plenty of hype, Blue Origin CEO says data centers are headed to space ‘in our lifetime’, Investors fearful of AI bubble burst are missing out: Strategist, and Palantir CEO Alex Karp warns some AI investments ‘may not create enough value’ to justify cost, Yahoo Finance

Seven Lawsuits Allege OpenAI Encouraged Suicide and Harmful Delusions, The Wall Street Journal

SoftBank’s Son ‘was crying’ about the firm’s need to sell its Nvidia stake, CNBC

What OpenAI Did When ChatGPT Users Lost Touch With Reality, The New York Times