Let’s meet in 3D.

With our cypto wallets underpinning the new economy, we can send our cars to the store for us, while we walk in the park, working all the while on our smart glasses and whiteboarding on the air with our IoT pens.

Or better yet, I’ll send my digital twin.

The past three-plus decades in tech have witnessed plenty of hype around capabilities like these that have never (fully) come to pass. But few have brought the volume and scale of hype that AI has.

Arguably the most impactful tech innovation of the digital era, it’s also easily been the most hyped.

And to be fair, AI has moved very, very fast, with much of the buzz coming from kernels of truth. With the top labs in a mad race to get to the head of the line, it’s hard for journalists to even stay on top of what’s really going on, aside from the mad marketing push that’s accompanying it.

Unfortunately, this has not only turned many people into skeptics, but it’s also seen real AI news get lost in the smoke or ignored entirely by hype pendulum backlash.

Today’s PTP Report features 2025’s top AI myths debunked: from the ridiculous to the grossly exaggerated to those promises that have just come ahead of reality.

(In the process we’ll look at some AI truths, too.)

The AI Hype vs AI Reality Swing in 2025: What and Why

Since ChatGPT first blew the doors off OpenAI’s own expectations by turning a “low-key research preview” into one million users in just five days in 2022, it’s seemed like AI has been everywhere, all the time, and all at once.

Over the past three years, AI’s capabilities have doubled roughly every eight months (in a jagged line, varying by area), per the UK’s AI Security Institute (AISI). And while revenues have also grown fast for big AI companies (OpenAI projecting $13 billion for 2025 revenue and Anthropic somewhere closer to $9 billion), they’re nowhere near covering their expenses, with trillions committed to the build-out. OpenAI alone made $1.4 trillion in commitments in 2025, to be paid out over the next several years.

In such a space, the hype machine gets fed from numerous sides, with even bigger predictions helping to fuel the frenzy.

That is, until it doesn’t. The pace can be intoxicating, but also exhausting, and it has ultimately driven many into a frustrated backlash against the technology and what it really can, and can’t, do well.

On his Factually! With Adam Conover podcast, the AI skeptic and comedian recently hosted Wharton School of Business professor and AI expert Ethan Mollick. Central to their discussion was this AI backlash, and with it a false impression of AI incompetence.

The two discussed how AI’s real capacities get buried by what Conover calls the “ridiculous levels of AI hype” coming from the industry.

Mollick argued that whether you love AI or hate it, the backlash obscures what it does well.

As discussed by Will Douglas Heaven in his piece for the MIT Technology Review (see below), tech hype at the scale we’re seeing around AI creates a pendulum swing from extreme predictions to disillusionment and skepticism over the industry as a whole.

Even for businesses, the constant promises can obscure AI limitations and risks or serve to numb leaders to how fast things really are moving.

PTP’s Founder and CEO Nick Shah recently wrote about how talking about AI in investor calls has been good for business, even when use of the technology itself hasn’t always followed suit.

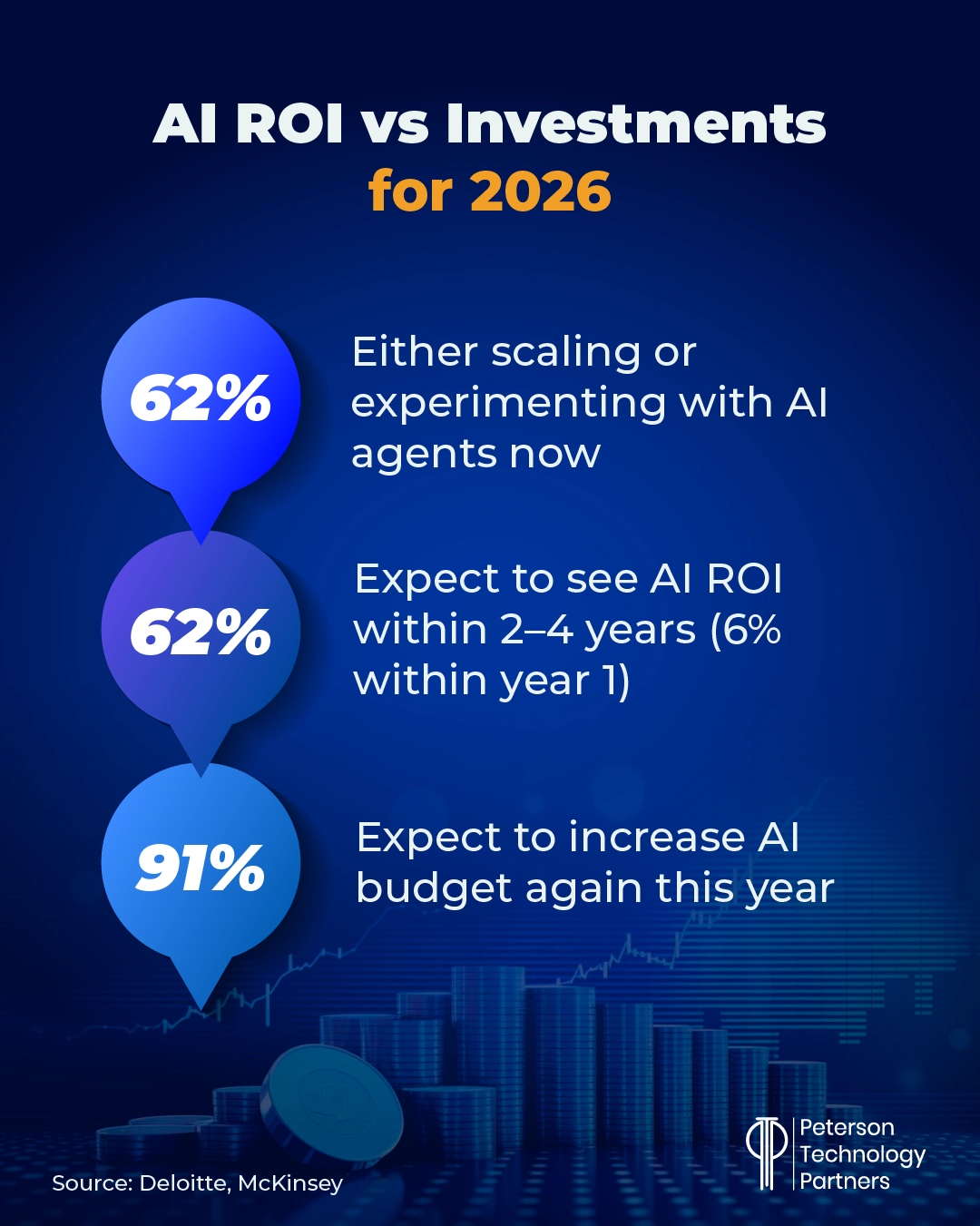

A Financial Times survey of S&P 500 filings found that 374 CEOs mentioned AI on earnings calls the prior year, with 90% doing so in highly positive terms. Yet a McKinsey analysis found the actual execution is often lagging, with more than 75% of companies lacking a clear AI roadmap and more than 82% failing to track well-defined KPIs.

With this out there, let’s take a look at some of 2025’s biggest whoppers and what fire their smoke may be hiding.

1. Virtual Coworkers vs AI Agents in Reality

2025 was to be the year of the AI agent.

In some ways it was, as agents became widely available, got on their feet in enterprise, and started being adapted and implemented in all kinds of experiments.

But the hype machine here blasted far ahead, with extensive discussion on how AI systems would be like virtual employees before the year was out, autonomous and capable of handling end-to-end tasks.

We’d be hiring them, much like people, with workers managing several of their own across the business.

(We even got into this one at PTP, with articles about onboarding AI like employees, though, in our case, as a means of ensuring proper governance.)

In reality, the agentic picture was far more modest.

Agents in pilots in 2025 struggled with ambiguity, depth, and complexity. Some crashed into permissions, muddied compliance rules, and failed to deliver anything near the kind of autonomy promised.

Former OpenAI co-founding employee and former director of AI at Tesla Andrej Karpathy was among the AI luminaries throwing cold water on agents as AI employees late in 2025. Comparing them to characters from films like Rainman and 50 First Dates, he’s described the technology as way too short on recall and real-world understanding. Far from true autonomy, he’s regularly discussed how agents thrive at repetitive, well-defined tasks where they can repeat what’s been done.

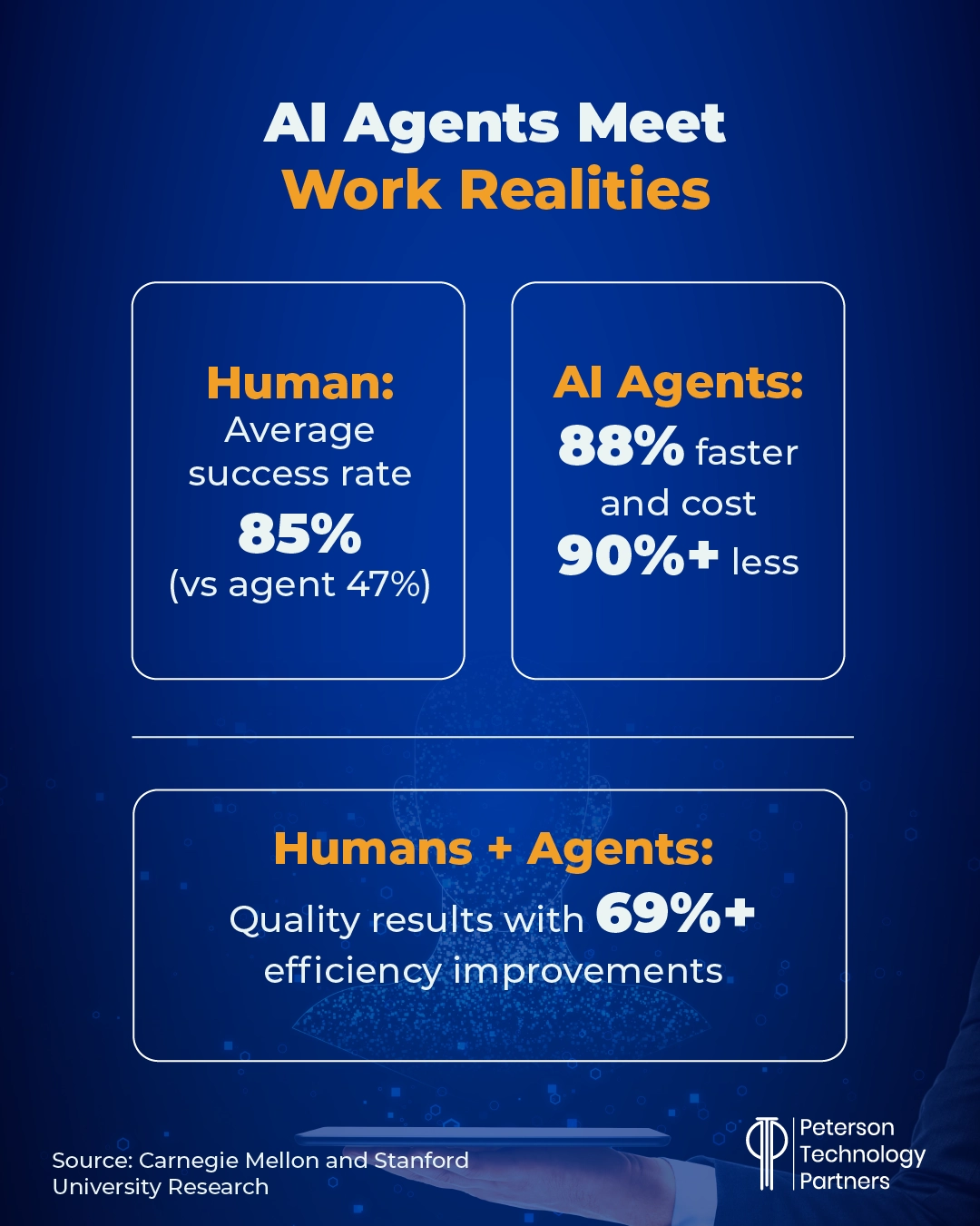

To this end, agents were shown in 2025 to thrive at augmentation instead, with studies like the one referenced above finding nearly 70% efficiency improvements when used well in conjunction with human expertise.

At PTP, we’ve seen voice AI agents bring similar benefits, like 80% reductions in time-to-hire for recruiting use cases (with as much as 60% cost reductions) and productivity increases for recruiters by as much as three times.

Here the hype of virtual employees masked the truth: Agentic AI coming online in tight loops has actually become highly effective with sufficient oversight.

Check out our piece from late last year on this same split of expectations vs reality for AI agents in sales.

2. AGI Timelines Meet LLM Scaling Reality

Artificial general intelligence (AGI) fever may have swept the world in 2024, but early in 2025, the timelines on its arrival got truly zany.

Sam Altman told Y Combinator’s Garry Tan that he believed AGI might come in 2025 and went even further by saying that superintelligence would be here soon after, just “thousands of days away.”

Defining either term is highly problematic, but it’s generally accepted that AGI means AI systems that can do what most people can in a given area (with what this means still up for grabs), and superintelligence (or ASI) meaning far above human ability.

Microsoft AI CEO Mustafa Suleyman predicted AGI might be a few years away back in 2023, and other leaders like Google DeepMind’s Demis Hassabis and Nvidia’s Jensen Huang followed suit.

Meta pushed the ball further last year by rebranding their AI program “Meta Superintelligence Labs,” and Suleyman announced Microsoft’s own MAI Superintelligence team, writing that, “This year it feels like everyone in AI is talking about the dawn of superintelligence. Such a system will have an open-ended ability of ‘learning to learn’, the ultimate meta skill.”

But by the end of the year, discussions around any realistic near-term arrival of AGI and superintelligence had faded away, replaced instead by questions like “Is AGI really possible?”

Many prominent figures in the AI community also came out to suggest that LLMs alone will not get us to self-learning or the real-world knowledge necessary for true AGI.

As Co-Founder and Co-Director of Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) James Landay told Yahoo Finance’s Daniel Howley in November:

“I don’t think [superintelligence is] actually supported at all by what AI can do right now, or what scientific research would lead you to believe AI can do in two years, five years, or 10 years.”

3. Curing Diseases and New Medicines: AI Predictions vs Reality

And speaking of ten years, another popular narrative of late has been that AI will cure diseases within a decade and generate personalized drugs and all new medical treatments in record time.

But over the course of the year, the hype generated by Nobel prizes and significant breakthroughs from programs like Google DeepMind’s AlphaFold began to crash into harder realities, begging the question, “Where are all the AI drugs we were promised?”

While AI has been making real strides in diagnosis, early-stage research, protein structure prediction, molecule screening, and science data wrangling in general, many of the same hurdles remain for AIs as for human teams.

Physicist, bioengineer, and CEO of FutureHouse Sam Rodriques discussed these issues at length on the Hard Fork podcast in late December. While he’s helped build an AI agent for science called Kosmos that can do six months of scientific research in a single day, he’s also among those voices debunking the idea that AI will be curing diseases in the near-term.

While their Kosmos agent resolves some of the agentic issues discussed above by contributing repeatedly during each run to what he calls a “structured world model” (allowing the orchestration of hundreds of agents in parallel), it still can’t get around many real-world challenges.

These include building a treatment once you’ve designed it, finding and recruiting subjects for testing, the delays for dosing and analysis, and getting FDA approvals. He stressed that many of these issues aren’t regulatory in nature but simply physical realities that take time.

But we have far more science to do than scientists, he stressed, and AI is helping speed this work at scale. It’s already enabling faster discoveries, improving design, and even making novel discoveries of its own.

With AI in science, Rodriques says “the future is going to be awesome.”

But AI curing diseases in a decade, he said, “is crazy.”

4. AI Coding Tools in Reality and 90% Production Code Automation

If you’ve followed AI much over the past year, you no doubt heard Anthropic CEO Dario Amodei’s prediction from March 2025 that AI would be writing 90% of our software.

But what was really bold was his timeline: in just three-to-six months. That would have been June or September 2025, and Amodei also added that he believed in 12 months AI would be writing “essentially all of the code.”

Back in September, this prediction looked pretty ludicrous.

Yet coding is among the most popular practical AI use cases for chatbots and often found to be the most popular professional use case. Depending on your survey of choice, around 90% of organizations are found to be using AI coding assistance (and as much as 85% AI coding agents, per Anthropic and Material’s survey from December).

And developer surveys, like the JetBrains Developer Ecosystem Survey from late 2025, match these high totals, with 85% saying they regularly use AI tools for coding and development.

AI coding startups have also done great business, with several hitting the $100 million mark for annual revenue at record speed, and Anthropic’s own Claude Code hitting $1 billion in just six months.

Claude Code is also currently enjoying a moment in the media spotlight. With wide adoption that spans professional developers to amateur vibe coders, it’s been the topic of a number of popular AI blogs since the new year, including Ethan Mollick’s One Useful Thing and Dean Ball’s Hyperdimensional.

Mollick describes how the tool is using a number of techniques to improve LLM performance, like:

- Compressing conversations when the context window fills up, and handing notes to a new version of itself (like the lead character in Memento)

- Including Skills (or pre-set instructions and tools) the AI can decide to use on its own without additional prompting

- Launching subagents on its own to offload some parts of tasks to faster, cheaper models

Dean Ball profiled 12 things he built with Claude Code in just the past month (from automated invoicing to an autonomous options trader), saying to him the technology has become so impressive that it already meets the definition of AGI.

Nevertheless, we’re nowhere near 90% of all production code being written by AI.

Like many of these cases of AI hype, the issue may be mostly to do excessively optimistic timing.

The Pendulum Swing of AI Hype vs Inverse Reality

While there’s been hype around AI capabilities, there’s also been excessive fear around an AI bubble burst and hype on AI failures. One of the most widely quoted comes from an MIT NANDA study from August that claimed 95% of enterprise AI pilots have failed to get meaningful ROI from AI (per Mollick there are issues with the breadth of this research).

Similar results came from McKinsey and Gartner, the latter making news in June by predicting that 40% of all agentic AI projects would be scrapped by 2027.

Regardless, analysts agree that the divide between companies seeing success with AI and those either treading water or achieving little with it is widening fast.

Investments continue to rise, and for good reason. While many of the gaudier promises of AI capacities haven’t come to bear, others, like coding agents, have been success stories.

At PTP, we’ve seen several of our own. We built our patent-pending agentic solutions to aid in recruiting (see above), and we’ve also helped companies implement agentic AI software testing. By using AI to help generate and maintain test cases, proactively detect software bugs, perform smart regression analysis, and develop and maintain effective testing data, companies in 2025 saw enormous boosts in software quality, coverage, and release times through smart automation.

And while it’s not a case that made headlines with bold predictions, it’s another example of a quiet winner that’s improving alongside coding innovations.

Conclusion: Seeing Fire through the Smoke

With trillions being invested and billions coming in for the OpenAIs of the world, the hype’s not going anywhere any time soon.

What will 2026’s big promises be?

Maybe physical AI, self-driving vehicles, household robotics, and keyboard-free interfaces.

At PTP, we’re AI-first and love safe, effective, and practical implementations of AI. It may not always be the flashiest of promises, but we’re big believers and are seeing it bring real results.

References

SoftBank has fully funded $40 billion investment in OpenAI, sources tell CNBC, CNBC

An AI Expert Challenges an AI Skeptic, with Ethan Mollick, Factually! With Adam Conover podcast

Why A.I. Didn’t Transform Our Lives in 2025, The New Yorker

How Do AI Agents Do Human Work? Comparing AI and Human Workflows Across Diverse Occupations, arXiv:2510.22780 [cs AI]

Hype Correction, MIT Technology Review

Where Is All the A.I.-Driven Scientific Progress?, The Hard Fork Podcast

Anthropic’s CEO says that in 3 to 6 months, AI will be writing 90% of the code software developers were in charge of, Business Insider

Claude Code and What Comes Next, One Useful Thing

Among the Agents, Hyperdimensional

FAQs

Did AI progress down slow in 2025?

There’s a lot of debate around the real speed of AI progress, but there’s little doubt that it continues to come fast. 2025 saw big gains in video generation, reasoning models, coding-specific tools, voice AI agents, and more, but the feel for everyday users of chatbots was likely less dramatic than in years prior. The gap between marketing and real-world work has definitely become harder to ignore.

What went wrong with the prediction that AI agents would be our coworkers in 2025?

Agentic capacities no doubt thrilled researchers and companies as they have in pilots, but the reality of navigating real-world work is far more complicated. Ambiguity, depth of recall, interface issues, security concerns, and exception handling are all issues that have plagued agents trying to tackle more complex tasks this year. That said, repetitive and predictable tasks have seen successful automation with agents, especially where they have tight loops with human oversight.

If 2025’s releases looked like AGI isn’t close, why did everyone talk so much about superintelligence?

Defining AGI and superintelligence are at the heart of this debate, with some (like Dean Ball, see above) saying AI tools have reached AGI thresholds this year. But for many, AGI seems further away at the start of 2026 than it did a year ago, with many AI pioneers agreeing that LLM scaling alone will not be enough to get us there. Big progress in measurable areas like math and advances in reasoning models contributed to the rise in superintelligence talk, though no doubt it is partly driven by a need to keep pushing new frontiers.