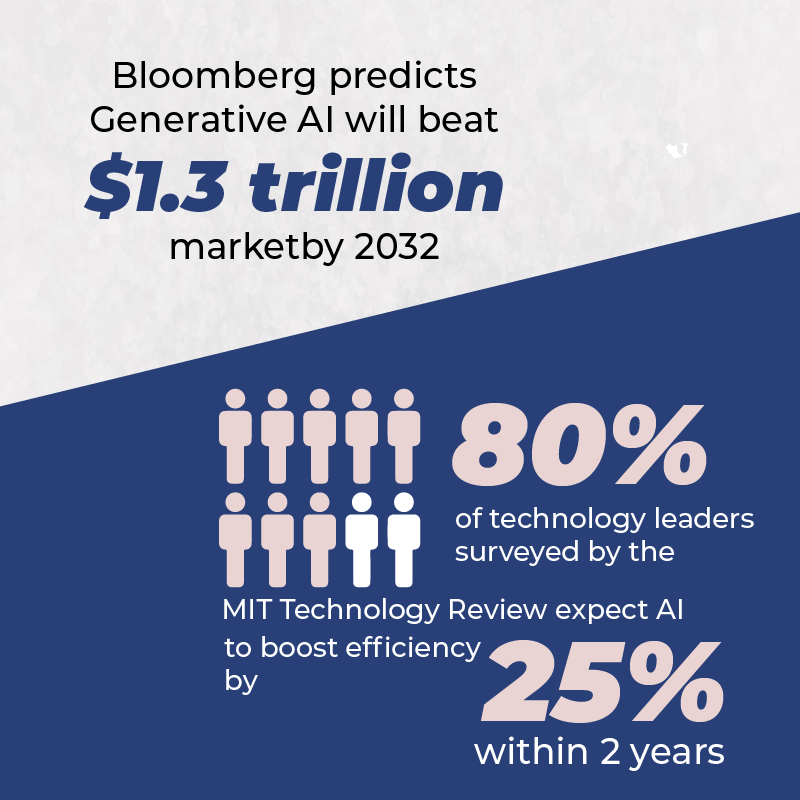

Generative AI is already in use professionally, handling customer service inquiries, automating tasks, and empowering non-tech employees, as discussed in this PTP article. And the scale of use is only expected to rise exponentially in 2024 and beyond.

But despite early successes and an almost unrivaled surge of enthusiasm, serious concerns remain. You’ve probably encountered stories of generative AIs appearing to blatantly lie, such as providing bogus legal cases and opinions (as discussed in this PTP piece), or giving ridiculous advice, like claiming a foodbank is one of Ottawa’s top tourist destinations.

There have been charges of generative AI creating damaging content, like running a tasteless poll alongside an aggregated news story, or even convincing a college professor that his students cheated when, in fact, they didn’t.

The tendency for generative AIs to periodically generate outputs that are erratic or factually wrong is called hallucination. We’ll take a look at what it is, why it happens, and what can be done by generative AI users to safeguard against it.

Why do generative AIs hallucinate?

An innovation built more around probability than precision, it’s no surprise to experts that generative AIs sometimes make mistakes. After all, making guesses is how they’re trained to be more correct, as discussed in this PTP article.

Erroneous outputs can come from numerous sources, including incomplete or flawed prompts provided by users themselves. As discussed in this Harvard Digital Data Design Institute video, consider if you ask an AI if cats can speak English. While we’d expect such an answer to be obviously no, there are cartoon cats, like Garfield, that do.

And the source data that generative AI learns from contains loads of useful information, but it’s also rife with bias, including personal opinions, politics, hate speech, rants, and blatant untruths.

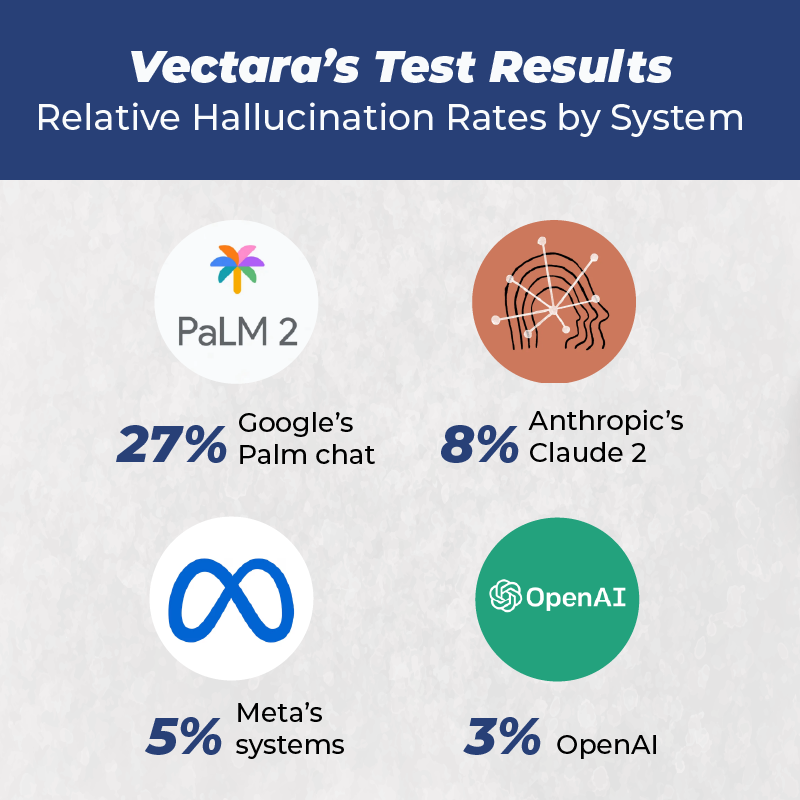

Even with these known issues, a recent article by the New York Times finds that hallucinations appear to occur at a much higher rate than previously suspected. Profiling a start-up by ex-Google employees called Vectara, the article details how even a relatively simple assignment (summarizing news articles) caused the tested generative AIs to hallucinate regularly, with varying rates per system ranging from 3% to 27% of the time.

Given the specificity of this example study, hallucination rates will likely be much higher overall.

What can we do about it?

As discussed, generative AI is a technology built on human data, using human models, so it shouldn’t be all that surprising that it makes mistakes. But as we’ve come to expect precision and logic in our interactions with computers, some shifting of expectations may be necessary for generative AI users.

No one reasonably expects a quick internet search to yield 100% factual results, for example, yet when responses come from a generative AI, potentially formatted to look like an official document, or spoken with authority, our expectations shift, leaving us startled when we get results that are sometimes blatantly wrong.

Rather than perfection, a more realistic goal may be to aim for generative AI systems to simply make less mistakes than people do.

And since most generative AIs currently contain no fact-checking systems, the responsibility for verifying results falls entirely on the users. (Consider, too, if generative AIs were used to fact-check other generative AIs, there’s the risk of double hallucination falsely passing results through.)

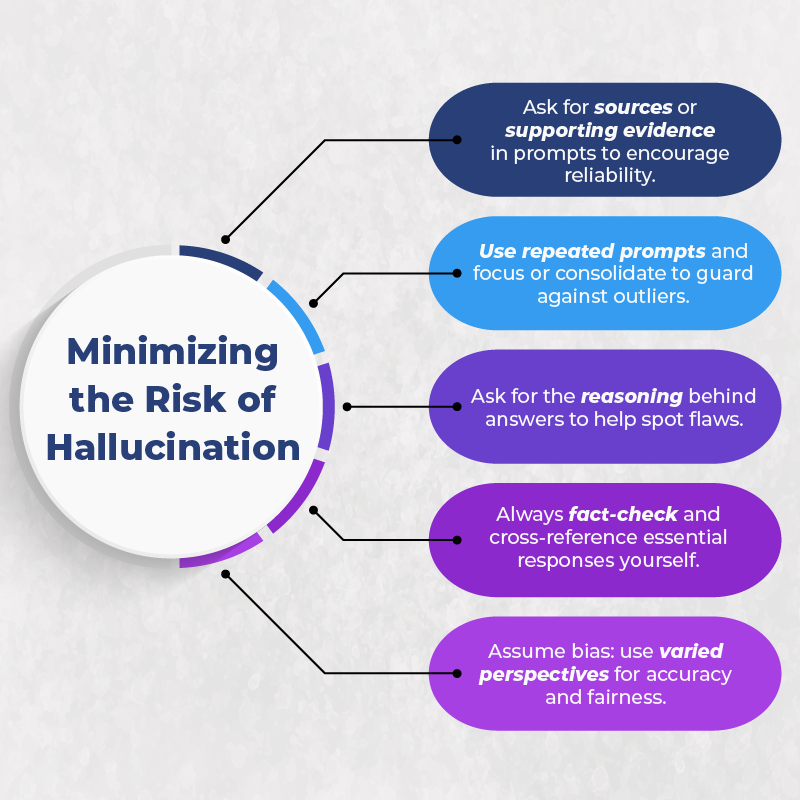

Harvard Business School Professor and Chair of the Digital Data Design Institute Karim Lakhani offers these specific ways to minimize the risk of hallucination from generative AI:

- Ask for sources or supporting evidence in prompts to encourage reliability.

- Use repeated prompts and focus or consolidate to guard against outliers.

- Ask for the reasoning behind answers to help spot flaws.

- Always fact-check and cross-reference essential responses yourself.

- Assume bias: use varied perspectives for accuracy and fairness.

By verifying sources and fact-checking (as we’d expect now from ethical journalism and sound research), hallucination is a risk which, if not entirely removed, can at least be safeguarded against.

Conclusion

The speed and apparent creativity of generative AIs can seem miraculous, as well as terrifying. With a tendency to hallucinate, generative AI outputs can pose serious risks for users who aren’t prepared, in addition to the risks outlined in this article.

The potential power is undeniable, but with this added power, users must be prepared to take personal responsibility, as failure to do so can have severe consequences.

While the companies building and maintaining generative AIs must continue to improve their reliability, all of us can safeguard against hallucination by using them wisely.