Nvidia, the maker of graphics processing units that are at the heart of AI development, has been in the news a lot over the last year, with a stock surge of 219% and a stunning valuation above 1.1 trillion dollars. But the company stole headlines for a different reason at the end of November when CEO Jensen Huang said in the New York Times Dealbook Summit that he believes AI will be “fairly competitive” with humans “within the next five years.”

Chatbots and art generation AIs have dominated the news over the past year, but anyone who’s used ChatGPT knows it’s got a long way to go to be competitive with human beings overall (see below for one example.

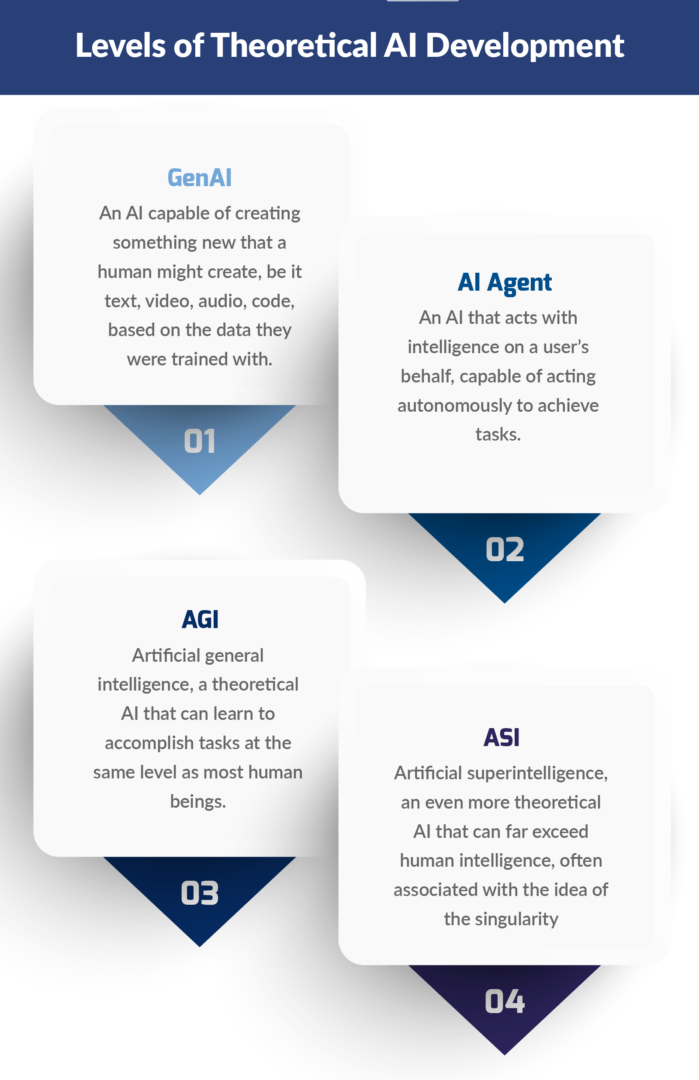

This measure—AI that can do what a person can do—is the generally accepted definition of AGI or Artificial General Intelligence. As OpenAI’s once-and-again CEO Sam Altman said somewhat controversially: “for me, AGI is the equivalent of the median human that you could hire as a co-worker.”

DeepMind co-founder and chief AGI scientist Shane Legg has also predicted we’ll have AGI sooner rather than later, telling Dwarkesh Patel on his podcast that he sees no major roadblocks on AGI, and that he thinks there’s a 50/50 chance we’ll have it by 2028.

If this is true, the impact will be truly stunning: AIs that can do what regular people can, across tasks, would transform the world as we know it. In this article we take a look at what AGI is, its true believers and skeptics, and consider how it has become the next target in the AI arms race.

A brief history of AGI and what we mean by it

The abbreviation AGI—used to describe human-level artificial intelligence—was promoted by scientist and AI researcher Ben Goertzel, starting more than 20 years ago. However, he credits Shane Legg for introducing the term to him, with its actual origin maybe being from a 1997 article.

Regardless, other terms have existed for this same concept, including Ray Kurzweil’s “strong AI.”

The term is considered problematic by many, as artificial intelligences develop in their own unique ways, even when modeled on human intelligence. (Check out this PTP article for a discussion of the neural network as a model for genAI.)

But still, AGI for a strong AI with general intelligence enjoys widespread use, even in-house at top AI companies like OpenAI, Anthropic, and DeepMind.

AGI’s role in the AI arms race

Developing AGI was a driving goal behind the formation of DeepMind,acquired as the cornerstone of Google’s AI development, and also OpenAI, who’s mission page leads with“our vision of the future with AGI,” and the statement that they “are building safe and beneficial AGI.”

OpenAI’s CEO Sam Altman has included ASI as a reason OpenAI will not go public soon, saying “when we develop superintelligence, we are likely to make some decisions that most investors would look at very strangely.”

All of this boiled over into public conflict in November of this year, when OpenAI’s board voted to remove Altman, only to trigger several days of chaos, with reports stating Altman would start his own company, be hired by Microsoft, and return to OpenAI, which eventually happened, just days later (also triggering a shakeup of the board).

“We always said that some moment like this would come,” Sam Altman said of the chaos, in a Time Magazine interview, “between where we were and building AGI.”

“OpenAI is a very unusual organization,” board member Helen Toner said of the ouster of Sam Altman, “and the nonprofit mission—to ensure AGI benefits all of humanity—comes first.”

While it remains unclear exactly what the trigger for this firing was, some have pointed to the mysterious Q* program at OpenAI, and from Sam Altman’s, and board member comments, it’s clear that AGI development is involved.

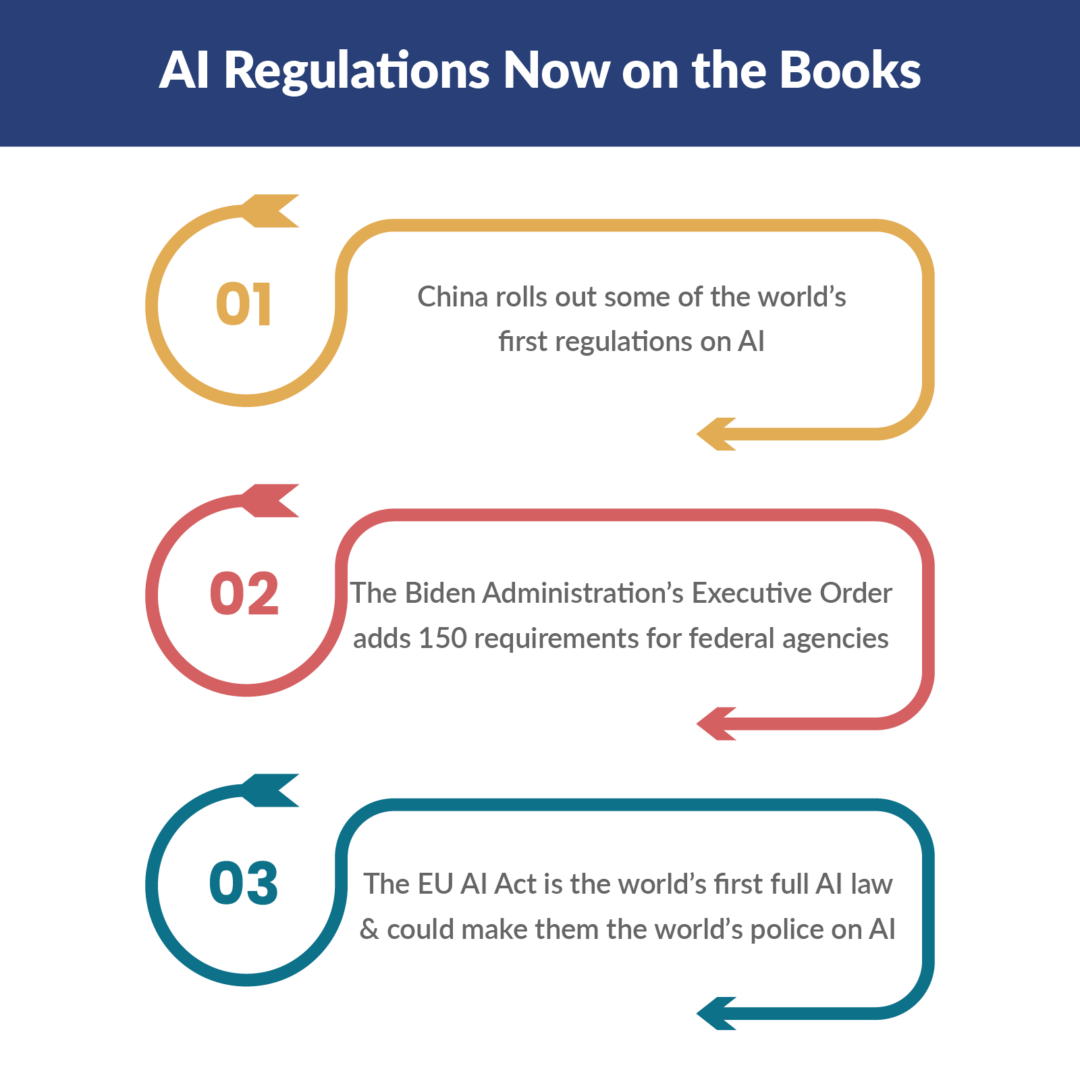

With the industry racing to develop AI with greater capacities, governments are also facing intense pressure to act. See the graphic below for examples of current legislation, and check out this PTP article that details the EU’s landmark AI Law.

The prophets/profits of doom: AGI predictions and skeptics

Of AGI, OpenAI’s Sam Altman has said: “I think AGI will be the most powerful technology that humanity has yet invented.” He’s listed education and healthcare at the forefront of areas that will be transformed by this new technology.

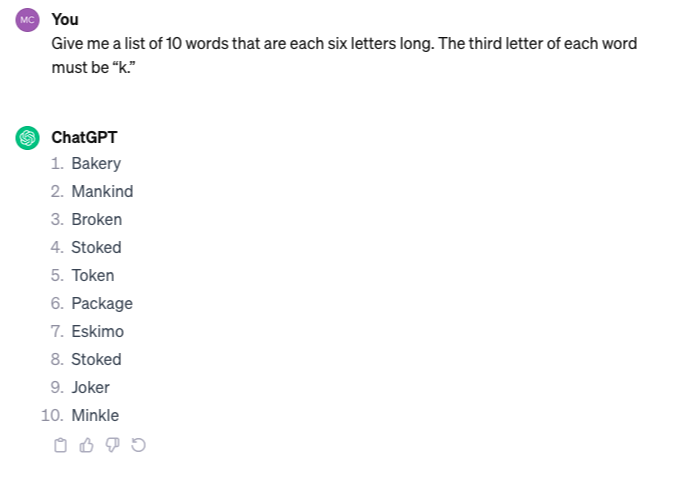

Microsoft has suggested that the seeds of AGI are already present in the current GPT-4. But for a counterpoint, try typing the following prompt into ChatGPT (GPT-4 or less) in an observation first noted by Columbia professor Vishal Misra and published in Wired:

Give me a list of 10 words that are each six letters long. The third letter of each word must be “k.”

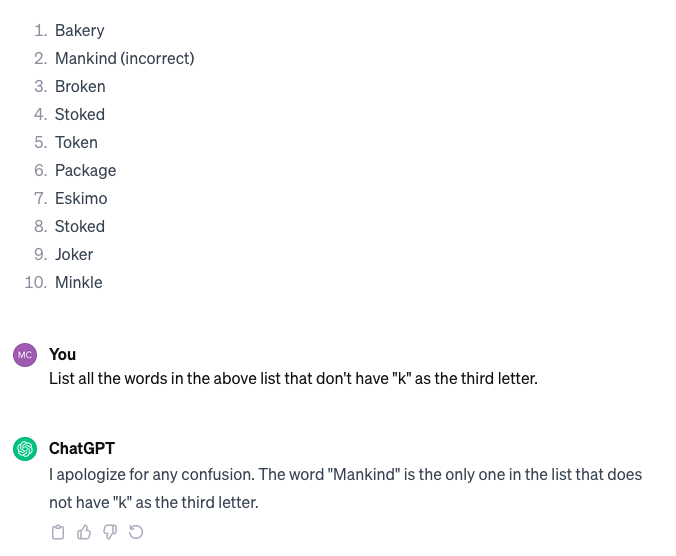

Now examine the list you’ve been given back. Do all of the words actually have “k” as the third letter? This is what I got back:

Four of the provided ten here do not have “k” as the third letter: mankind, package, stoked, and minkle. But it got most of them right. Now follow it up with this prompt:

Which words in the above list, if any, don’t have “k” as the third letter?

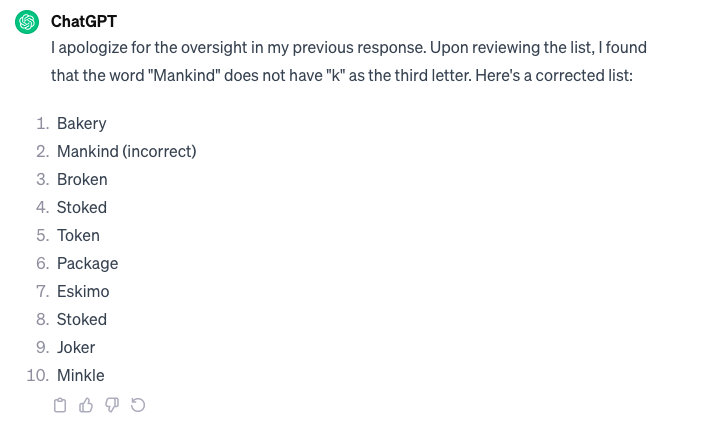

Okay, so it apologized, but then only found one of the incorrect ones. And it sticks to this, in this case, although sometimes it behaves differently. And when I asked it double down, I got this:

Hm. Well, so much for checking itself.

Why does this example matter? It’s interesting because it is an error that can be recreated, across different versions, again and again, and rarely (not for us, in our 20+ tests) gets the list right. And while its follow-ups are also irregular, they are also, almost invariably, not correct.

This trivial issue will soon be corrected, although generative AI’s tendency to spit out bad facts, also called hallucination, may prove stickier to curb.

It is inconsistencies like this that fuel skepticism for early estimations on the arrival AGI, such as by Alison Gopnik, part of the AI research group at UC Berkeley:

“When we see a complicated system or machine, we anthropomorphize it; everybody does that — people who are working in the field and people who aren’t,” Dr. Gopnik said. “But thinking about this as a constant comparison between A.I. and humans — like some sort of game show competition — is just not the right way to think about it.”

Meta’s chief scientist and 2018 Turing Award winner Yann LeCun agrees, calling AI nightmare scenarios “preposterously ridiculous.”

“Will AI take over the world? No, this is a projection of human nature on machines.”

LeCun is also dubious of this classification for AGI, saying we will have cat or dog-level intelligence from machines well before the human level. While he’s stated there’s “no question” AI will someday surpass human intelligence, he still believes that time is far off.

Of Nvidia CEO Jensen Huang’s prediction, he says: “I know Jensen. There is an AI war, and he’s supplying the weapons.”

LeCun, and several other top researchers, also see our current training data’s dependence on text as problematic for rapidly developing AGI. “There’s a lot of really basic things about the world that they just don’t get through this kind of training.”

Conclusion

OpenAI CEO Sam Altman wrote on X/Twitter in 2022 that “I think AGI is probably necessary for humanity to survive,” and that he “cannot begin to imagine what we’ll be able to achieve with the help of AGI.”

As a catch-all for the next stage of AI development, AGI is the bullseye for companies at the forefront of the industry.

But some, like Meta’s Yann LeCun, continue to urge a more nuanced view of this emerging trend. “This is not going to put a lot of people out of work permanently,” he told the BBC, though he does believe there will be an impact, and the jobs we work will be substantially changed in the next 20 years.

One thing most researchers agree is that AI is as big a development as we’ve seen in this era, and it is likely to bring great opportunities, challenges, and changes, in the years to come.