Have you ever been confused reading a DM, email , or text—without emojis or images, you’re lost as to the writer’s intent: be it direct, satire, joke, type-o, failed autocorrect?

Add images and a message’s meaning becomes clearer, but it’s still less certain than getting it on a video call. (And as we remember from Covid, this is still more prone to breakdown than face-to-face interaction.)

65–95% of a message’s emotional content comes outside the text itself, and this can also be true about the factual meaning. We read gestures, expressions, postures, and more by sight; pick up inflections, tone, pitch, and pacing with sound.

In other words, if a picture’s worth a thousand words, how many words are moving pictures worth? Moving pictures with sound? Now combine that with volumes of text, like your entire email history, or a company’s sales history.

Artificial Intelligences haven’t had this capacity, until now.

Multimodal AI is here—bringing additional context to the text-heavy world of Large Language Models (LLM). The generative AIs we used throughout 2023 were mostly unimodal—text in and text out, or text in and images or video out, or images in and text out.

The AI systems emerging late last year and currently being implemented in scale are multimodal: meaning they process multiple types of input at the same time.

And while we may soon ignore this distinction altogether (multimodal as default), here and now this is a highly significant change.

This article takes a look at this next big step in AI: what multimodal AI means, what it’s already doing, and what it will soon be doing in the future.

What Does Multimodal Mean (LLM, MM-LLM, or LMM)?

In 2024, multimodal is already an AI buzz word.

Google CEO Sundar Pichai has called 2024 Alphabet’s “Gemini era,” and frequently champions Gemini’s multimodal nature. A model trained with data from varying formats (see below), Gemini has the ability to respond in varying forms of output.

In an interview with Wired, Pichai explains:

“That’s why in Google Search we added multi-search, that’s why we did Google Lens [for visual search]. So with Gemini, which is natively multimodal, you can put images into it and then start asking it questions. That glimpse into the future is where it really shines.”

Multimodality is like giving AI a sensory upgrade, allowing it to process input more like we do.

Note that these newly created models are sometimes still called LLMs, but you may also see them referred to as multimodal (or multi-modal) large language models (MM-LLM), or LMMs (large multimodal models).

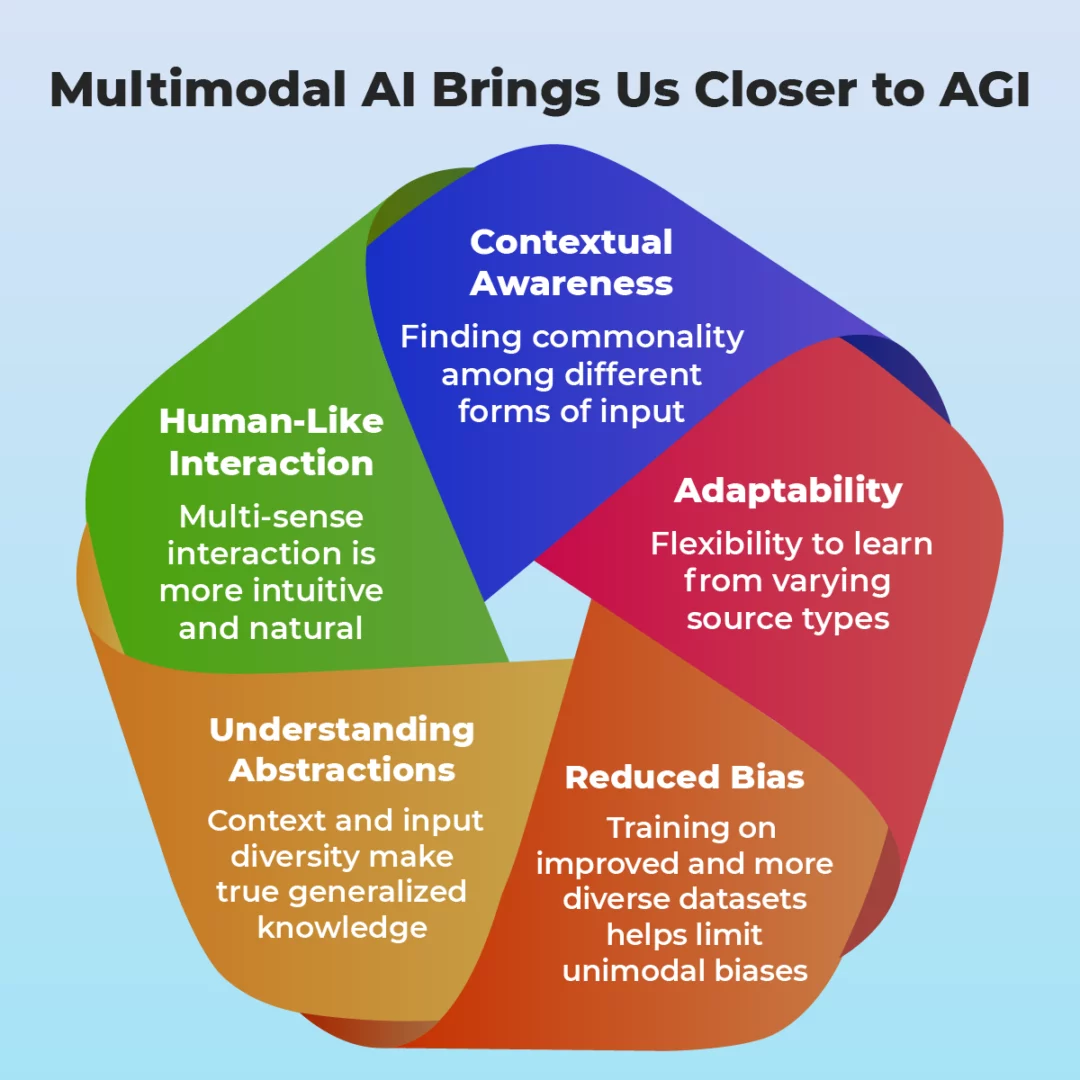

Context here is the goal—multimodal AIs make connections across these different data types, giving the potential for more human responses, along with the capacity to solve a wider array of problems.

For example, consider receiving one line of text from a customer:

“Your service was GREAT.”

Now consider it with coming from someone scowling, or audio or video of that line, spoken in a deeply sarcastic tone of voice.

Not only does the emphasis change, the meaning of the entire message is flipped.

Examples Already in Use

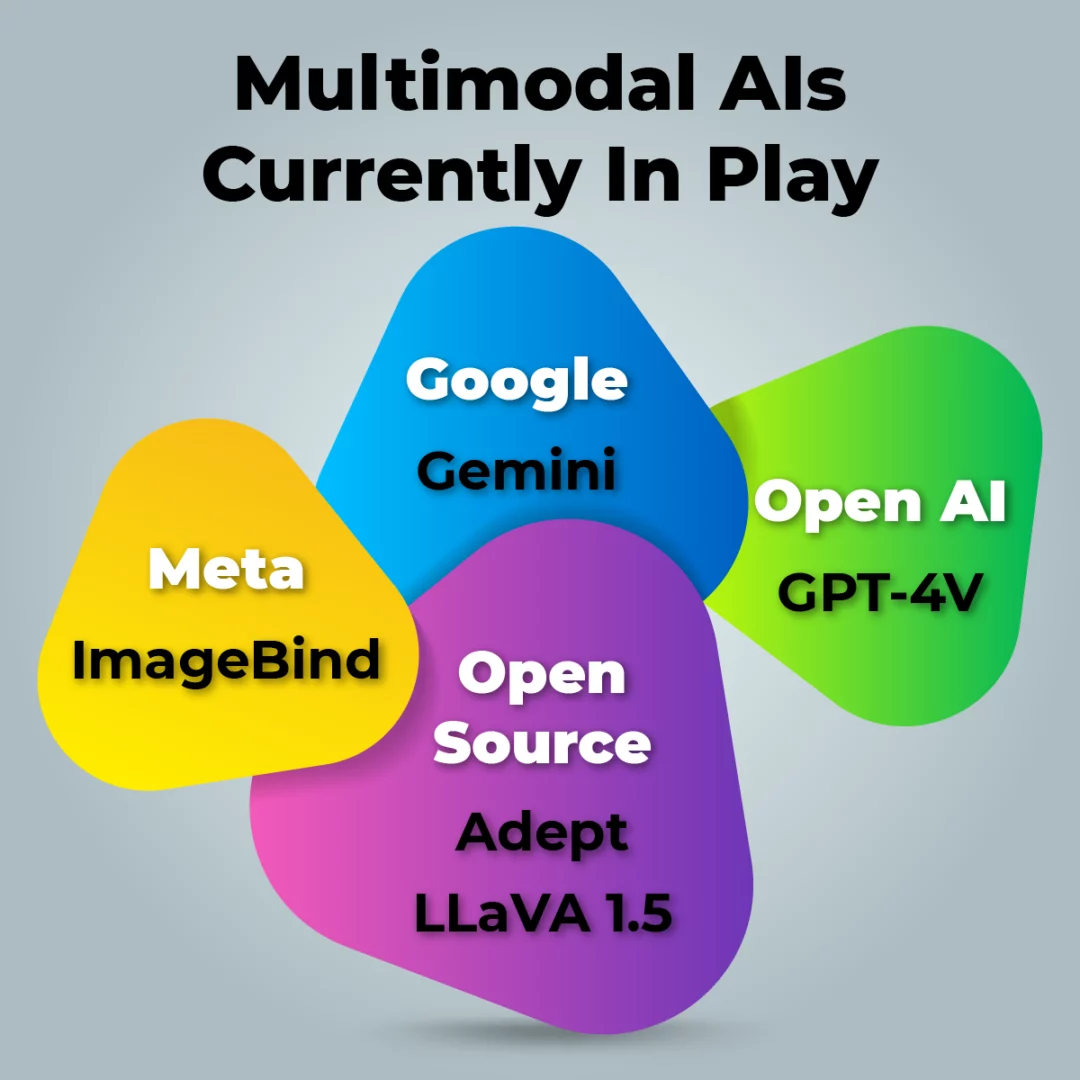

Multimodal AIs have long been in development, and a number are already out in varying capacities:

Gemini and GPT-4V

If you have an Android phone (Google Pixel 8 and Samsung Galaxy S24 as examples), there’s a good chance you’ve already got multimodal AI capacity.

Multimodal AIs first hit the mainstream late last year, with Google using Gemini to power their Bard chat (now being replaced as Gemini). Gemini is incorporated in the iOS Google app, Gmail, Google Docs, and more.

(Everywhere except the UK and Europe, that is, where the company is holding off for the EU AI Act to be finalized. For more on the EU AI Act, check out this piece from the PTP Report.)

When people say “Hey Google” in 2024, many will be utilizing multimodal AI for their responses, even if they have no clue what it is.

Note that multimodal AI is also central to the rash of for-pay AI services launched either late last year or this year, including OpenAI’s ChatGPT Plus (with access to the GPT-4V, the V for vision, their multimodal AI), and the Gemini multimodal AI in Workspace applications (like Google Docs, Sheets, Slides and more).

Multimodal AI Has Immediate Medical Impact

Multimodal AIs are already at work in the medical field, even aside from large grants being secured on its promise (for example ArteraAI’s $20 million funding for personalized cancer therapy).

Experimental programs are underway, like one conducted by University of Michigan researchers that uses multimodal AI to improve tuberculosis treatments.

“Our multimodal AI model accurately predicted treatment prognosis and outperformed existing models that focus on a narrow set of clinical data,” said lead researcher and Associate Professor Sriram Chandrasekaran.

Their model accepts multiple modalities including clinical tests, genomics, medical imaging, and drug prescriptions. By working across this spectrum of input types, the results find drug regiments that are effective even against drug-resistant TB, across varying countries.

The Continued Evolution of AI Training

Multimodal AIs also require a new approach to training. Rather than devouring massive quantities of text, these models must be multidimensional, needing input from the variety of data types we’ve been discussing.

This cross-modal learning in artificial intelligence creates a fusion of sensory modalities in AI, finding patterns even between inputs of entirely different kinds (as our minds do).

While this may amount to dumping vast quantities of different sorts of data in, it increasingly seems possible to train AIs as we ourselves learn: by experience.

This concept is examined in a startling NYU study. Researchers trained a multimodal AI using headcam video recordings of a child, from the age of six months through their second birthday.

While the video captured just 1% of the child’s daytime hours, the study found it was surprisingly effective at teaching language to a multimodal AI.

Says Wai Keen Vong, a computational cognitive researcher on the team:

“We show, for the first time, that a neural network trained on this developmentally realistic input from a single child can learn to link words to their visual counterparts.”

While the idea of camera-mounted toddlers training AIs may trigger reasonable unease, this kind of training opens the door to startling new possibilities: AIs trained on actual human experience, bringing models more in line with our actual, lived experience.

On to the Next Step

In terms of future developments, one thing is clear: multimodality moves us further down the road of Artificial General Intelligence (AGI). For years, this has consistently been listed by researchers as an essential step.

[For an in-depth introduction to AGI and what it could mean for us, check out this PTP Report. ]

As Shane Legg, DeepMind co-founder and lead AGI scientist explained to Dwarkesh Patel:

Multimodal AIs: “open out the sort of understanding that you see in language models into a much larger space of possibilities. And when people think back, they’ll think about, ‘Oh, those old-fashioned models, they just did like chat, they just did text’… the systems will start having a much more grounded understanding of the world…”

Okay, so that’s good, but let’s consider a real-world example from the future of multimodal AI: imagine you have a broken appliance. The issue is frustratingly trivial, and you suspect you can fix it, but you have no clue how to do it.

Many of us find Youtube the tool of choice in this situation.

And while that’s already much easier than in decades past (where you needed a repair manual or an expert), it’s still a pretty exhaustive process:

- You start with a search engine, looking for the right terms, get generalized understanding, and teach yourself the basics.

- Then you look for the actual solution to your problem—sorting through fluff and scams for reliable sources.

- If you’re lucky, you find a comparable solution. (Enough other people must have had this same problem.)

- Still here, you’re likely dealing with the wrong make or model, or a different issue that’s frustratingly close to your own. You may have to iterate here (steps 1–4 again) until you find something comparable.

- Failing any of the above, you have to start the process over from the top—this time looking for a repair technician.

With multimodal AIs, the process is more like this:

- Point your AI device (glasses? phone? pin?) at the broken appliance and capture image or video, while explaining what’s wrong.

- Show it, explain it, and let it find the correct solution right off, personalized to your situation.

Maybe the AI walks you through the fix (telling you where and how to buy the part or even buying it for you), or, if the problem persists or there’s no solution, it contacts the best reviewed place (by price) for the solution, uses your schedule to match appointment times and, pending approval, goes ahead and schedules the service.

While this may be a trivial example, consider engineers using multimodal AIs to help repair complex equipment in the field, potentially in hostile or difficult environments where time is at issue, working with machinery that cannot fail.

Conclusion

We’ve looked at what multimodal AI refers to, examples in use, and how it opens the door for even more useful tools, including the development of AGI. But with its rapid (and often opaque) rollout and adoption, it’s also triggering serious concerns.

Some examples are outlined in the World Health Organization (WHO)’s publication: “Ethics and governance of artificial intelligence for health: Guidance on large multi-modal models” (geared at healthcare, which as we’ve seen is an early-adopter).

With genAI “adopted faster than any consumer application in history,” the piece outlines several potential concerns, including an undermining of human authority in the fields of science and medicine.

The more human-like and useful the technology, the more potentially dangerous it becomes.

2024 may already be the year of multimodal AI, as it continues to improve our genAIs. By year’s end many of us will be using it, whether we know what it is or not.