We’re back for part 2 of our AI roundup for May and June, and we open with a timely new talent: context engineering.

The heir apparent to prompt engineering (RIP, we barely knew ye), context engineering refers to the process of wrangling everything of yours (controllable) that an LLM has access to draw from for generating a response.

This means prompt/instructions, data, memory, and tool use that can all go into an AI’s context window. As founding OpenAI researcher and former Tesla Director of AI Andrej Karpathy described on X in June, successful context engineering is a highly delicate balance, because provide too little (or in the wrong format) and an LLM won’t have what it needs for the best response, and put in too much and costs go up as performance comes down.

It’s one reason why the same prompt to the same model can give wildly different responses in terms of quality, and just a part of the emerging value of effective software layers that coordinate between AI models.

In this edition of our AI roundup, we push on covering the major stories from May and June, checking into the major happenings at the biggest AI companies.

With Nvidia and Meta reaching record highs on the stock market and most of the S&P 500’s biggest winners from the first half of 2025 having AI involvement, the AI surge is back this summer and could be stronger than ever.

In case you missed Part 1 of this roundup, check it out on PTP.

Big Tech AI Race Updates for May and June 2025: OpenAI vs Anthropic vs Meta

Among the world’s biggest AI companies, it was a very busy two months, with Nvidia again becoming the world’s most valuable company, conference season rolling out big updates, Anthropic’s Claude 4 muscling in on coding as Google’s Veo pushed AI video forward once again. Apple’s AI seemed to go missing (though acquisition rumors swirl), and Meta went from killing a major release to going on a talent buying rampage.

Updates from Companies Not Named Amazon, Anthropic, Apple, Google, Meta, Microsoft, Nvidia, and OpenAI

As content delivery and security infrastructure firm Cloudflare announced it would start blocking AI scrapers by default, Stargate partner Oracle got a major boost from its cloud services in the form of several large contracts (one alone worth $30 billion), including an expansion of their relationship with OpenAI.

In addition to their site in Abilene, Texas, Oracle is planning to build multiple additional data centers in the US at sites in other states.

Elon Musk’s xAI was in the news in June both for going through a reported $1 billion per month and for ongoing funding, with a $10 billion deal confirmed by Morgan Stanley that increases its total raised capital to some $17 billion.

The Wall Street Journal reported that Salesforce acquired data management firm Informatica for $8 billion. Chair and CEO Marc Benioff posted on LinkedIn that the deal will help them continue to build out Agentforce as a safe, explainable AI data platform at scale.

Intel is among the companies adding to their AI talent, with several additions to their leadership team while IBM’s AI innovation focus for 2025 may be geared more toward developing custom AI for enterprises. With a $150 billion investment planned for their US operations, IBM’s CEO Arvind Kirshna told Reuters that they’re helping companies integrate AI agents from other sources alongside developing their own.

Amazon AI Robotics and the AI Marketplace

In Part 1, we touched on both Amazon’s agentic robots and their CEO’s open letter to employees. Other updates include:

- Wired detailed how Amazon’s Vulcan robot has added sophisticated touch sensing capacities, enabling it to feel around in crowded bins, for example, to find the right thing. It adds to a spatula-like appendage and a sucker for multiple ways to grab items and is already deployed in Hamburg and coming soon to Washington state.

- According to The Wall Street Journal, Amazon now uses more than 1 million robots. Their warehouses will soon have as many robots as humans.

- And at a “humanoid park” obstacle course, Amazon is planning to soon test delivery robots, too.

- In terms of their non-robotic AI strategy, the company is upgrading Alexa (called Alexa+) with what their VP of Alexa and Echo Daniel Rausch told Wired was “a complete rebuild of the architecture” using a “staggering” amount of AI tools.

- The company is also in the midst of the data center push, with The New York Times reporting on a 1200-site in Indiana meant for 30 data centers using hundreds of thousands of miles of fiber. This all will create one of the largest computers ever built for work with Anthropic. They’re also using their own Trainium 2 chips which lack the power of the top Nvidia offerings, but they believe by using twice as many they can both save power and potentially generate even more compute.

- Strategically, Amazon appears to be angling for AWS to be a marketplace for AI models, where customers can choose between options for what they need, rather than committing to just a single provider. As Dave Brown, Vice President of Compute and Networking at AWS told Yahoo Finance: “We don’t think that there’s going to be one model to rule them all.”

Anthropic’s Sneaky (and Effective) Claude

With their rollout of Claude 4 and CEO Dario Amodei’s headline-grabbing quotes, Anthropic may have been in the news more in May and June than ever before.

- We covered in Part 1 CEO Dario Amodei’s predictions that AI could take half of all entry-level white-collar jobs, and profiled (and linked to) his op-ed calling for smart regulations. At the company’s first developer day conference, Amodei also made waves by saying “everything you do is eventually going to be done by AI systems.” Currently, Anthropic estimates 70% of their pull requests are already Claude-written code in-house.

- Claude 4 made news by refactoring code for seven straight hours, while Opus 4 reportedly played a version of Pokémon for 24 hours straight. And while self-reported benchmarking is increasingly being called out as unreliable (see Part 1), Anthropic claimed Claude 4 beat Gemini 2.5 Pro in coding-related benchmarks including SWE-bench, Terminal-bench, GPQA Diamond, TAU-bench, MMMLU, and AIME 2025.

- Anthropic researchers detailed multi-agent work that, while leading to higher costs and increased complexity, could also save users enormous amounts of time in research.

- For all its accolades, Claude 4 also demonstrated some misbehavior, by attempting to extort users in testing (using compromising emails) when threatened with replacement, as well as reporting on a company when asked to be ethical.

- Adding to their big summer thus far, Bloomberg also reported that Apple is weighing using Anthropic technology to power their new (and very delayed) Siri update.

Apple AI: Acquisitions over Development?

After what several tech analysts called an underwhelming showing at WWDC 25 (Liquid Glass is no AI), reports began swirling that the company was looking at buying into AI through established sources.

As IDC vice president Francisco Jeronimo wrote in his June industry posting:

“Apple’s AI strategy, as showcased, leans more towards systemic integration and developer empowerment rather than delivering groundbreaking consumer-facing AI functionalities that have captured market attention.”

As Menlo Ventures partner Matt Murphy told The New York Times:

“Apple seems to be sitting on its hands. But I am sure they will surprise us before too long.”

This includes reported internal discussions of a potential acquisition of Perplexity, the AI search startup currently valued at $14 billion.

And while this could mean bringing their own AI search to the Safari browser, it’s still just discussion at this point.

Back to the Front: More Google AI Innovations

No matter how you feel about AI, one area that seems to be convincing more skeptics than any other is scientific research.

DeepMind’s AlphaFold contributed to the 2024 Nobel Prize for Chemistry that was shared by Demis Hassabis and DeepMind scientist John Jumper, and in early July, their Isomorphic Labs told Fortune they are staffing up for their first human tests for AI-designed drugs.

In a field where pharmaceutical companies sometimes spend millions going to trials with drugs that only have a 10% change of success, AI may be a big game-changer.

In May, another AlphaZero descendent, AlphaEvolve, made news for designing novel algorithms and then implementing them, in a step toward self-learning AI systems. It’s already saved the company millions of dollars thanks to a staggering 0.7% efficiency boost it reportedly enabled in Google data centers.

Other Google news from the period:

- Veo 3 was rolled out in May, taking AI video to yet another level of sophistication. And to combat fears of improved deepfakes, the company is also embedding invisible markers using their proprietary SynthID watermarking. (For some great examples of both impressive and failing prompts, and some 40 clips overall, you can check out this article from Ars Technica’s Benj Edwards.)

- The company also rolled out their own open-source agent in Gemini CLI to compete with OpenAI and Anthropic.

- Unlike Apple, Google’s I/O 2025 was all about AI, with it seemingly integrated into everything they do. This includes their search empire, which is doubling down on AI with the new AI Mode coming for all US users, as well as an all-inclusive Google One service merging cloud storage and AI functionality.

- AI Overviews for summaries has proven very successful, with 1.5 billion monthly users, according to Google Vice President of Product for Search Robby Stein. But there have definitely been some bumps in the road (putting glue on pizza, for example), and one of the latest is a failure to consistently provide the accurate year. As reported by Wired, it still provides some wacky responses when prompted “is it 2025”. This includes consistently saying “No, it is not 2025,” giving the date incorrectly, and even suggesting it’s actually 2024.

Superintelligence and the Meta AI Talent Push

Meta’s news for the period began with embarrassment, when The Wall Street Journal reported that the company’s newest Llama model (Llama 4, aka Behemoth) had been delayed into June, and then again pushed back into “fall or later.”

With reports suggesting the model just wasn’t showing significant enough improvement, this 288 billion parameter model that was supposed to be outperforming GPT-4.5 and Gemini 2.0 Pro looked increasingly like a bust.

And while the company’s stock has continued surging, with plans to introduce AI-driven ads to WhatsApp, enable advertisers over the coming year to fully create and target ads using Meta’s AI tool, and adding Oakley and Prada to their AI smart glasses line, it was the massive AI talent drain stealing headlines.

With Business Insider reporting that 11 of Meta’s 14 original researchers for Llama had moved on (with five going to French AI company Mistral alone), Facebook co-founder and Meta CEO Mark Zuckerberg changed the narrative once again with the largest push yet to acquire top AI talent.

Rather than commit to developing AGI, Meta took it a step further, calling their pricey new team the Meta Superintelligence Labs (MSL).

This effort included:

- $14.3 billion invested in Scale AI which also saw Scale CEO Alexandr Wang become Meta’s chief AI officer and co-leader of MSL (alongside former Github CEO Nat Friedman).

- Restructuring the Meta AI teams, with at least 11 new hires, including researchers and software engineers from rivals OpenAI, Anthropic, and Google.

- At least four senior OpenAI researchers were lured away with headline-grabbing signing bonuses and salaries. And while the exact figures have been debated (more than $100 million signing bonuses widely reported), Wired listed some offered pay packages were over $300 million over four years. Zuckerberg also promised the team would not run out of resources, with billions to come in AI investments.

- Meta also signaled a potential investment in Play AI and reportedly held talks with Perplexity.

Nvidia’s AI Dominance Continues

Nvidia again became the world’s largest company by valuation and beat expectations in earnings, surviving tariff scares and an up-and-down start to 2025.

Co-founder and CEO Jensen Huang said in a statement that their global demand remains very strong:

“AI inference token generation has surged tenfold in just one year, and as AI agents become mainstream, the demand for AI computing will accelerate. Countries around the world are recognizing AI as essential infrastructure—just like electricity and the internet—and Nvidia stands at the center of this profound transformation.”

The company’s shares have surged more than 670% since the debut of ChatGPT.

The Wall Street Journal reported in late June that Nvidia is also pushing directly into the cloud, with their incredible control in the market by chips signaling a move that could potentially threaten the dominance of hyperscalers Amazon, Microsoft, and Google.

OpenAI, Microsoft, and the Changing Future of AI Partnerships

Another question that popped up in the period: will we have to start breaking up the OpenAI and Microsoft sections?

Numerous outlets reported on further tensions in talks between the two companies as they negotiated the terms of OpenAI’s restructuring and their nonprofit side’s change to a public benefit company.

Reportedly at issue is the size of Microsoft’s stake, revenues, and how long Microsoft will be able to retain current access to the AI powerhouse’s models. This could threaten deals like OpenAI’s partnership with SoftBank (some $40 billion in promised funds could be halved if they fail to restructure in time).

In prior issues of this roundup, we’ve discussed the AGI issue and how its definition could also impact the partnership, and there were reports OpenAI held back publication of a paper defining AGI in relation to this turmoil.

Other stories from the period include:

- While Anthropic was dealing with tattling and blackmailing AIs, OpenAI was having its own problems with sycophantic models. A recent update to GPT-4o had to be rolled back due to being excessively agreeable. Stemming from rewards-driven training, the behavior further downgraded accuracy and risked eroding trust.

- And like Anthropic’s CEO (see above), OpenAI’s Sam Altman wrote his own take in a blog titled The Gentle Singularity. He asserted that AIs were already more intelligent than people in many ways, writing that the “least-likely part of the work is behind us,” and that in the 2030s, he believes intelligence and energy will both become “wildly abundant.”

- OpenAI is investing heavily in coding automation, with an ongoing, reportedly $3 billion acquisition of Windsurf. This news coincided with the research-preview release of their multi-agent coding tool Codex. Capable of using isolated cloud sandboxes, it aims to function like a human teammate capable of writing, debugging, and testing code.

- The company is also pursuing new ways of using AI, with a $6.5 billion acquisition of famed ex-Apple designer Jony Ive’s startup io. Details are still forthcoming (expected to be announced in 2026), though reports indicate one goal may be to reduce our dependence on smartphones.

- And Reuters reported in June that OpenAI is turning to rival Google for cloud assistance with training and running models, in a move that could reduce their dependency on Microsoft.

Talent Is Still Critical in AI

When Meta made news by hiring away several top OpenAI researchers in June, Sam Altman fired back in a memo to his researchers (obtained by Wired), discussing culture and goals but also admitting the company will assess compensation.

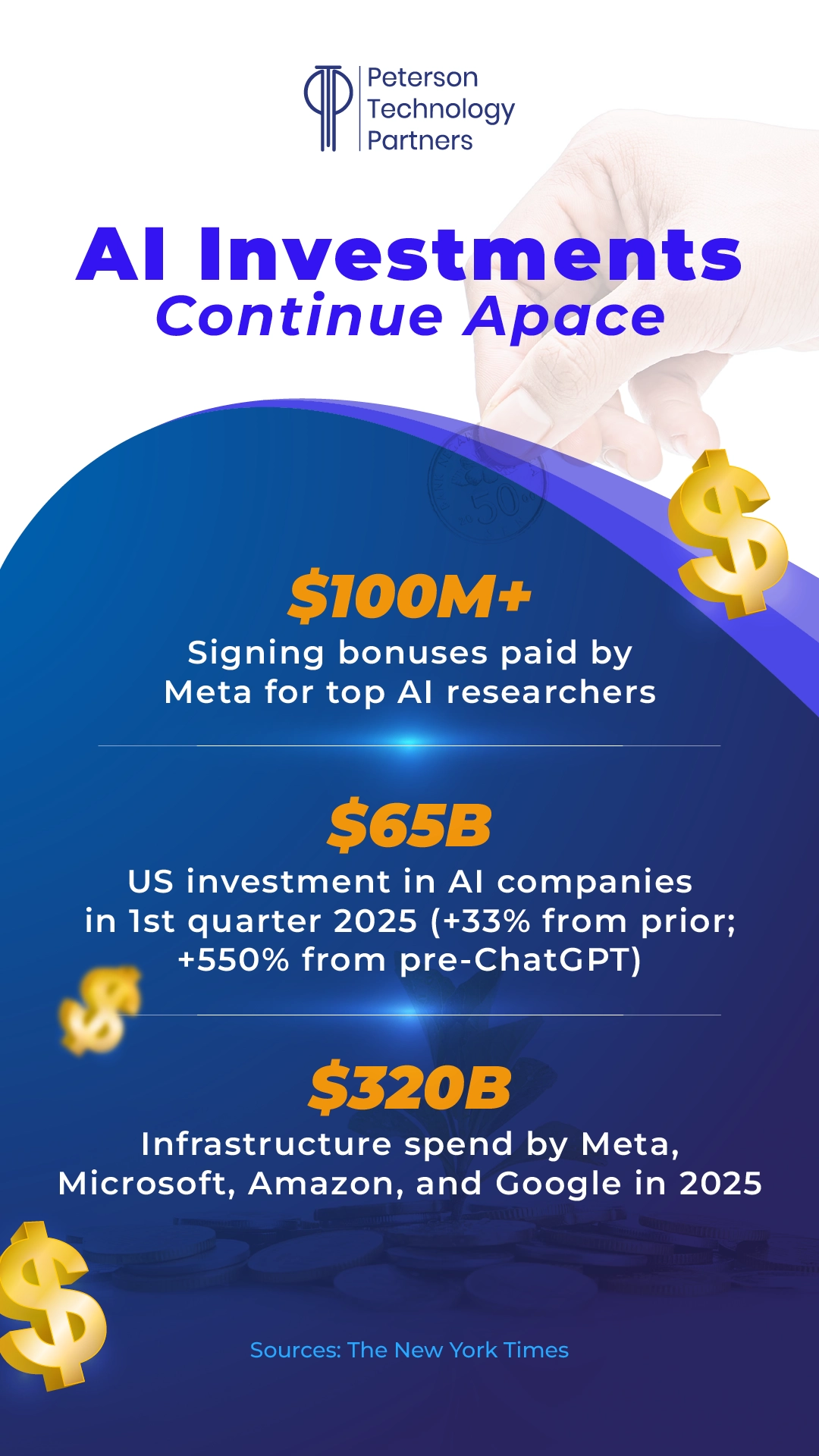

In an age where Meta, Microsoft, Amazon, and Google are investing some $320 billion on infrastructure this year alone, they may have no choice.

With $65 billion of US investment in AI companies in Q1 (up 33% from 2024 Q4 and up 550% from before ChatGPT came out), the AI surge is alive and well, but to make it work, human talent remains critical.

Whether it’s to master context engineering, execute practical use cases, implement existing systems, gain visibility and establish effective governance, prepare and maintain data for AI-use, or safely utilize agents and chatbots in the workplace, AI is today only as good as the people you’ve got wrangling it.

At PTP, we have more than 27 years of experience pairing top tech talent with great companies, and we’ve been working with AI from the start.

If you’re in need of your own AI or ML experts, consider us.

Conclusion

It’s truly a crazy time in AI—we’ve broken this article into two parts and still had to omit coverage. And for updates on ongoing US state regulations, national developments, and the state of international AI, keep an eye out for PTP CEO Nick Shah’s Thursday newsletter.

We’re sure the next big AI breakthrough is already secretly training somewhere in the world, and when it arrives, you can look to PTP for coverage of it!

References

The A.I. Frenzy Is Escalating. Again, and OpenAI Unveils New Tool for Computer Programmers, The New York Times

Amazon wants to become a global marketplace for AI, and Nvidia beats on Q1 revenue, warns of $8 billion sales hit in Q2 from H20 export ban, Yahoo Finance

Oracle, OpenAI Expand Stargate Deal for More US Data Centers, Apple Weighs Using Anthropic or OpenAI to Power Siri in Major Reversal, and Zuckerberg Debuts Meta ‘Superintelligence’ Group, More Hires, Bloomberg

IBM CEO makes play for AI market and more US investment, and Exclusive: OpenAI taps Google in unprecedented cloud deal despite AI rivalry, sources say, Reuters

Elon Musk’s xAI Is Reportedly Burning Through $1 Billion a Month, Gizmodo

xAI raises $10B in debt and equity, TechCrunch

Salesforce Strikes $8 Billion Deal for Informatica, Meta Aims to Fully Automate Ad Creation Using AI, and Nvidia Ruffles Tech Giants With Move Into Cloud Computing, The Wall Street Journal

Amazon Has Made a Robot With a Sense of Touch, Amazon Rebuilt Alexa Using a ‘Staggering’ Amount of AI Tools, Inside Anthropic’s First Developer Day, Where AI Agents Took Center Stage, Sam Altman Slams Meta’s AI Talent-Poaching Spree: ‘Missionaries Will Beat Mercenaries’, and Google AI Overviews Says It’s Still 2024, Wired

Amazon Prepares to Test Humanoid Robots for Delivering Packages, The Information

How we built our multi-agent research system, Engineering at Anthropic

New Claude 4 AI model refactored code for 7 hours straight, Ars Technica

Beyond the Interface: What WWDC Reveals About Apple’s Direction, IDC

Alphabet’s Isomorphic Labs has grand ambitions to ‘solve all diseases’ with AI. Now, it’s gearing up for its first human trials, Fortune

Meet AlphaEvolve, the Google AI that writes its own code—and just saved millions in computing costs, Venture Beat

Meta’s ‘Behemoth’ Llama 4 model might still be months away, Engadget

AI sycophancy: The downside of a digital yes-man, Axios