A Quick Thank You to Our Readers

Before we kick off this article, we want to say thank you to all our engaged readers! The PTP Report surpassed 13,000 subscribers last week, at a growth of nearly 12% over the past year. We continue to aim to bring you the tech news breakdowns and insights that can best help you stay in the know in this fast-moving climate. Drop us a line if you have unexplored topics you think we should cover, either via LinkedIn or our PTP contact page.

UC Berkeley computer science professor Dawn Song believes we’re hitting an inflection point at the juncture of cybersecurity and AI. As she pointed out to Wired’s Will Knight in an article this week: “The cybersecurity capabilities of frontier models have increased drastically in the past few months.”

She was involved in creating a benchmark called CyberGym to track how LLMs find vulnerabilities in large open-source coding projects. Back in July, Claude Sonnet 4 found about 20%. By October, Claude Sonnet 4.5 identified 30%. The tools are also finding new bugs that haven’t been previously known about or made public.

Professor Song added: “AI agents are able to find zero-days, and at a very low cost.”

But this ability cuts both ways. With hackers able to leverage the same tools, the push is on to get AI more involved, both in cybersecurity and in generating more secure code in the first place.

For today’s PTP Report, we look at the merger of AI and cybersecurity in the new year: how AI is improving defenses, accelerating attacks, and introducing new concerns through its use (sanctioned and not) across businesses.

With a World Economic Forum (WEF) study released last week revealing that 94% of leaders view AI as the most significant driver of cybersecurity change for this year, the timing feels right.

AI Cybersecurity on Three Fronts

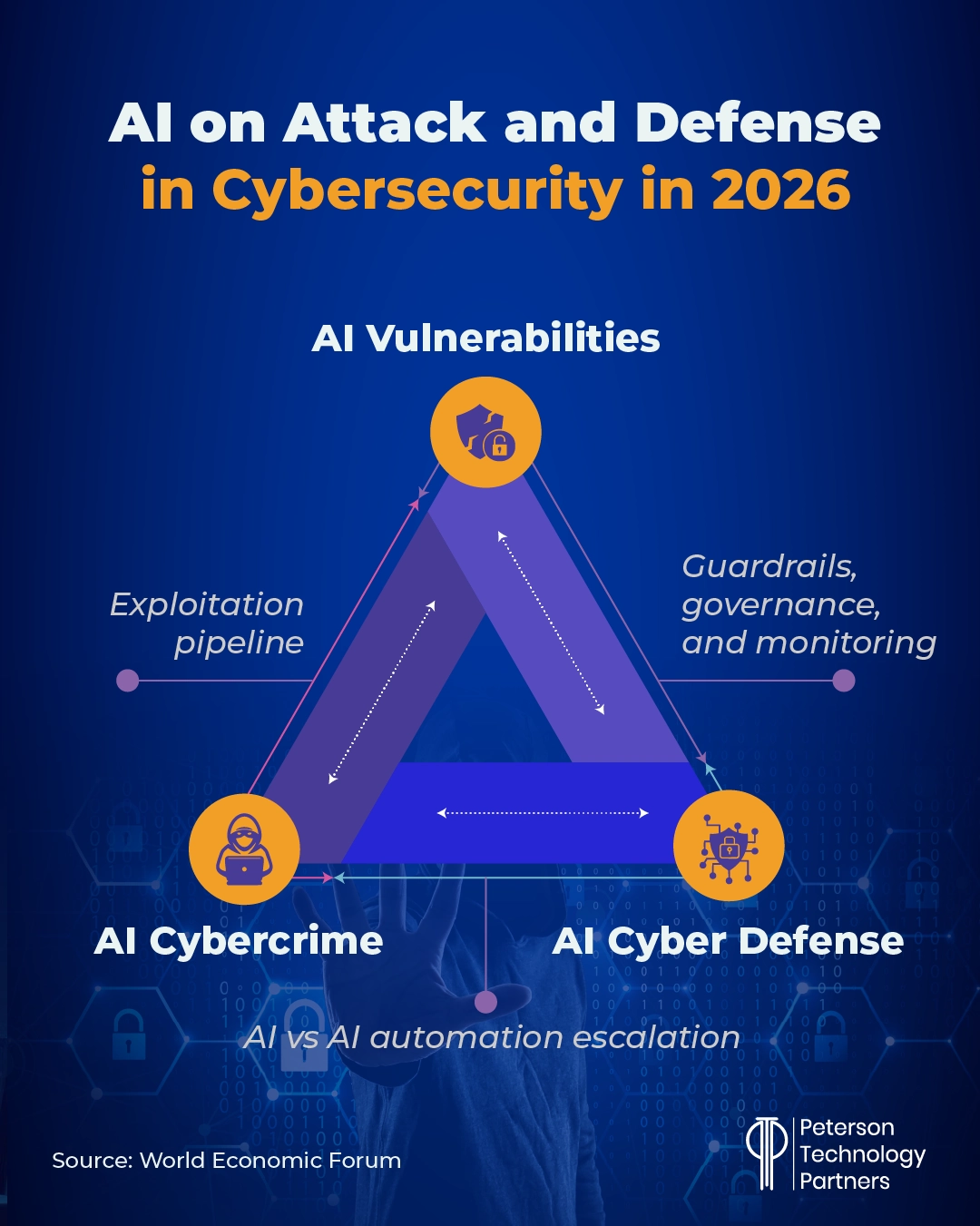

The WEF report also highlights AI’s impact in three areas as it is “supercharging the cyber arms race”:

- Cybercrime: cyber-enabled fraud with increasing attack scale and speed through automation

- Cyber defenses: anomaly detection, response, and analytics

- Vulnerabilities introduced by AI: data leaks, model misuse, and accountability and oversight concerns

The stats embrace all sides of this triangle.

AI vulnerabilitiesworry leaders more than ever before (see below), and for good reason. Netskope tracked genAI data violations and found they had more than doubled last year, led by user uploads of regulated data including personal, financial, or healthcare.

On the cyber attack side, directly AI-assisted cybercrime grew by some 72% in 2025 (per IBM), while phishing has also exploded (as we’ve reported multiple times in our cybersecurity news roundups), growing by some 1,265% with the assistance of AI. Varonis reported that AI-bolstered phishing emails get opened 78% of the time.

For good news, AI is also augmenting defense, especially for detection, response, and analysis at scale. The WEF report found that 77% of organizations have implemented AI in security, primarily to detect phishing (52%), for intrusion response (46%), and for user behavior analytics (40%).

Securing AI Systems Remains Critical

Our title hits on secure AI, but it could have also mentioned shadow AI security. Shadow AI refers to AI that’s being used all over organizations by employees on their own initiative (or outside of official, regulated channels). It’s rampant today in the American workplace. An MIT study from last summer found shadow AI at 90% of organizations, often with employees hiding their usage from IT.

The risks here come largely from unregulated input and output, with people uploading sensitive data without permission for AI to work on it and using returns that may not be sufficiently checked according to an organization’s policies.

Google Cloud Security’s Cybersecurity Forecast 2026 report sees the shadow AI problem also shifting into a shadow agent problem over the coming year. As autonomous agents improve, workers will see value in also deploying them outside of official channels to work on tasks, opening the door to “uncontrolled pipelines for sensitive data” as well as compliance violations and IP theft.

But the risks extend beyond that. We’ve covered numerous instances over the past year of agents going off the rails without sufficient protections and have also profiled prompt injection at length.

[Check out a prior edition for details on the so-called “lethal trifecta” for prompt injection.]

The WEF report covers the challenges that agents bring to traditional security frameworks, by exploding the volume of identities and connections and making interactions much harder to manage.

This propagation of access adds even more importance to adopting zero trust principles, as companies work at getting their hands around AI vulnerabilities overall.

One plus: far more organizations have processes in place to assess the security of AI tools before deployment in 2026 (64%) than did at the start of 2025 (37%). An additional 40% report conducting periodic or ongoing reviews of AI tools vs a one-time assessment.

CSO Online echoes this operationally: CISOs list securing the organization’s AI deployments and reining in unsanctioned AI as explicit priorities, warning that shadow AI can mean loss of data control, expanded attack surface, compliance risk, IP leakage, and reputational damage.

AI-Driven Cyberattacks in 2026: Better Fraud, Scaling, and Automation

In May 2024, one company’s CEO appeared to create and even attend a video Teams meeting, asking for funds and critical information. While it looked and sounded like the CEO, the attack was unsuccessful due to content, helping the team realize they were dealing with a deepfake and not their actual CEO.

But earlier that year a similar approach fared far better, when a video call including a deepfaked version of the CFO fooled an employee at a multinational financial firm into paying out $25 million to the attackers.

We wrote in a recent roundup about a deepfake Nvidia call that ran parallel to the real thing but actually landed eight-times more viewers as part of an audacious crypto scam.

AI’s ability to successfully impersonate us grew by leaps and bounds in 2025 and that trend will definitely continue in the year to come.

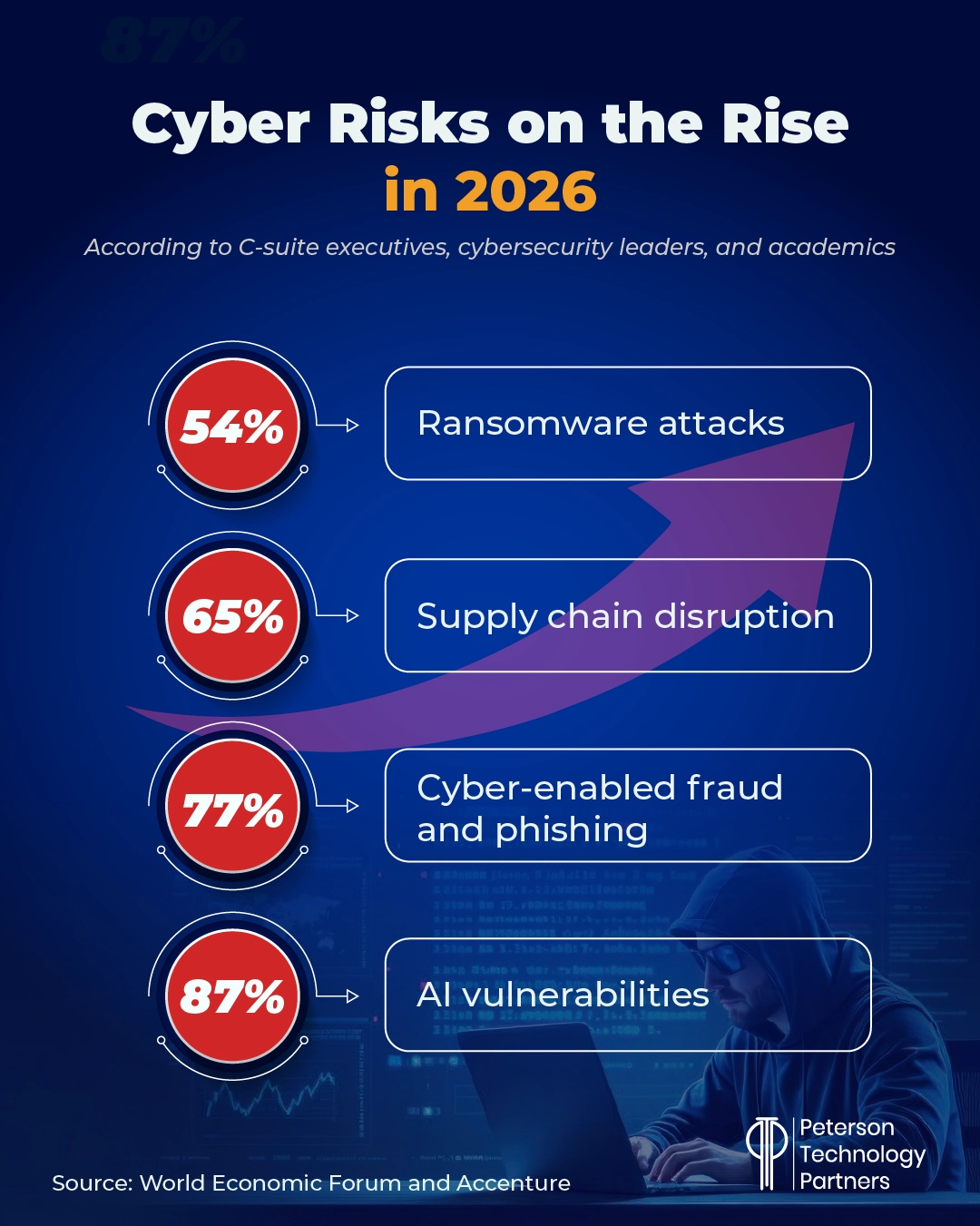

The WEF report found 77% of respondents seeing an increase in cyber-enabled fraud and phishing, with 73% reporting someone in their personal network being impacted by it.

As AI increasingly penetrates ransomware and data breaches (IBM found 16% included AI in their 2025 report with the Ponemon Institute), and agentic attacks increasingly bubble up, the difficult truth is that the greatest vulnerability may come from how easily genAI can generate convincing fraudulent materials.

As the rush of attacks targeting company Salesforce instances showed last year, it doesn’t matter how strong your security is if a convincing fake approach can convince your employees to open the door, override security protocols, or make connections directly to an attacker.

AI in SOC: Trust, Skills, and Oversight Are Problems

As mentioned above, the arms race is well underway. AI and ML are successfully being used to detect anomalies, for example, which can rapidly accelerate a team’s response to ransomware. By responding quickly, organizations can lessen the severity of attacks and catch attackers ahead of lateral movement that can lead to additional attacks downstream and supply-chain risks.

There have been impressive strides in fraud detection AI, and it excels at processing large volumes of data to spot patterns, accelerating initial triage, and as in other areas of work, taking on repetitive, manual, high-volume work that gets checked anyway. But effective oversight and governance are critical, and often a sticking point.

As in our opening, AI is also rapidly improving in its ability to identity vulnerabilities, though these techniques are not being adopted at scale yet for defense.

BCG found that the vast majority of organizations surveyed (88%) plan to implement AI-driven cyber defense tools.

But challenges remain, too, for companies looking to match attacker AI with their own smarter defenses. These include a critical cybersecurity skills gap (not new, but stubbornly persistent), flagged as a major roadblock in the WEF study by 54% of respondents.

Also contributing: bottlenecks for human validation and oversight (41%) and uncertainty around the risks of AI solutions (39%).

CEO vs CISO Priorities Differ on Security for the New Year

The WEF report demonstrates the growing concern organizations have over social engineering attacks.

Cyber-enabled fraud and phishing was the second overall concern for the CEOs surveyed in 2025 but became their top priority in 2026, moving ahead of both ransomware (first in 2025) and AI vulnerabilities (second for this group in 2026).

CISOs, meanwhile, demonstrated some variation here. While both groups had ransomware as the top risk last year, it remained at the top of the CISO list, with supply chain cyber threatscoming in second.

The change may show a divide in the groups about the trigger for what most keeps them up night, though many of the end results of the two attack types are the same: exposure of data, financial losses, brand damage, and a lockdown of essential functionalities.

AI is also being used on both sides of the fight for each.

German multinational financial services company Allianz surveyed customers from various-sized businesses across 97 countries and 23 industry sectors, and found that cyber incidents (including cybercrime, IT outages, malware, ransomware, and data breaches) remain the top risk for businesses around the globe.

But this has been the case for many years in a row. More surprising is that AI leapt from tenth in 2025 to second this year. This includes implementation challenges, liability exposures, and misinformation/disinformation.

It also shows the extent to which AI and cybersecurity are dominating the concerns of leaders across industries and around the world.

Conclusion: Implement Effective AI Risk Management and Close Skills Gaps

Two things emerge time and time again: AI governance is absolutely critical to using the technology effectively and safely, and skills gaps remain a major roadblock.

Skills gaps slow a company’s ability to implement solutions and also open the door to these issues in the first place.

At PTP, we can help you solve both. We’re proudly AI-first, and love to help companies implement effective, safe AI solutions.

We also have nearly 30 years of experience providing top tech talent to companies in need. If you’re in need of closing key cybersecurity skills gaps, consider us. We have access to great professionals, onshore, offshore, and nearshore.

AI in cybersecurity isn’t a trend for 2026. It’s a fundamental reality in the future of tech.

References

AI’s Hacking Skills Are Approaching an ‘Inflection Point’, Wired

Global Cybersecurity Outlook 2026, World Economic Forum with Accenture

Allianz Risk Barometer for 2026, Allianz Commercial

Good Practices in Cyber Risk Regulation and Supervision, International Monetary Fund

CISOs’ top 10 cybersecurity priorities for 2026, CSO

Generative AI data violations more than doubled last year, IT Pro

AI Is Raising the Stakes in Cybersecurity, Boston Consulting Group

The ‘shadow AI economy’ is booming: Workers at 90% of companies say they use chatbots, but most of them are hiding it from IT, Fortune

IBM Report: 13% Of Organizations Reported Breaches Of AI Models Or Applications, 97% Of Which Reported Lacking Proper AI Access Controls, IBM

FAQs

How widely is AI really being used in cybersecurity today?

While it’s frequently suggested that AI in defense is lagging AI in attack, multiple sources put the adoption rate for companies in the 70% range. The WEF survey for 2026 specifies use primarily for phishing detection (52%), intrusion/anomaly response (46%), and user analytics (40%) leading the way. 94% of respondents also saw AI as the most significant change driver in cybersecurity this year.

How are most attackers using AI today?

Without much doubt, it’s for fraud and social engineering attacks. This includes better phishing, more direct personalization, increasingly disturbing impersonation (deepfakes), and a fast iteration on what’s working. While Google Mandiant and Anthropic have uncovered agentic AI use in attacks, this is still scaling up. AI’s ability to identify bugs in software is also certain to be weaponized.

What is prompt injection, and why does it matter to companies?

As just one form of AI vulnerability that companies are exposed to today, prompt injection is like social engineering for AI (meets SQL injection). It involves attackers submitting prompts to your LLM covertly, to get it to perform unintended actions. This can be extremely easy where systems consume external resources (like emails, websites, or PDFs). Leaders should care because it can be very hard to defend against, often attacks interfaces that connect AI to real systems (like ticketing, email, CRMs, etc.), and can be used to expose data, among other things.