Our systems aren’t getting any less complex, any time soon.

With increased distribution across the cloud, AI integrations demanding focus, and rampant cybercrime, it’s a wonder CTOs get any sleep at night. Just AI, that mystery box we’re plugging into everything, relies on networks of other systems to go, and go well.

Got a good map? Hopefully yes, and even then, with everything clicking and humming like it’s supposed to, and locked down (so far as known), the results are unlikely to be all that predictable.

Chaos, somewhere on that map, is always lurking.

Enter chaos engineering (CE, ala chaotic evil, or chaos testing in DevOps), the act of intentionally wreaking havoc on your own systems.

In production.

This extreme form of testing is arguably as old as computing itself (“huh, production’s pretty unique; I wonder what will happen if we…”) though the terminology and current practices first rose to prominence in the 2010s, led by streaming giant Netflix.

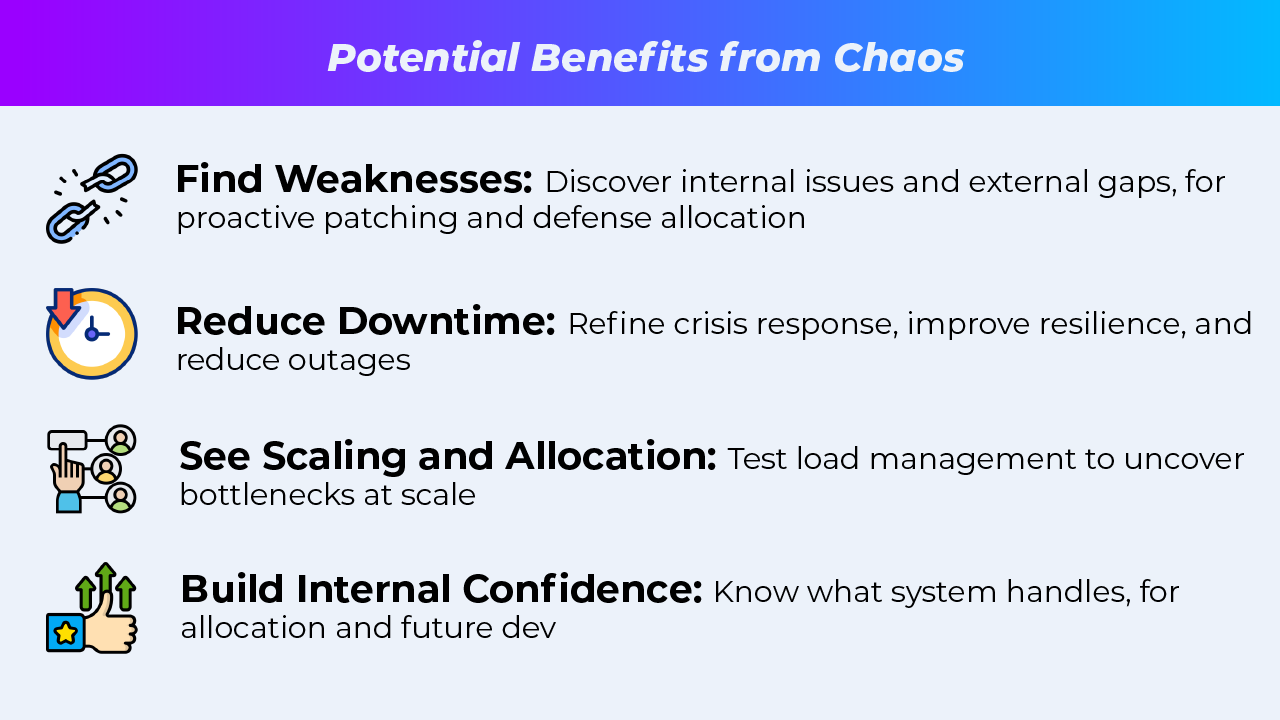

With our increasing dependence on spread system interconnectedness and the surge in cybercrime, 2024 is an ideal time to examine the chaos engineering benefits in DevOps, to improve operational readiness, the capability of your failover, backups and restoration, security weakpoints, and crisis management.

So without further delay, let’s turn the monkey loose (machine gun is optional)!

A Chaos Engineering Case Study: Netflix’s Chaos Monkey

Breaking things in production (on purpose) was first elevated to an art form by the streaming giant Netflix, following a series of nightmare experiences of their own.

The first came in 2008, with their movement from data center to the cloud proving more problematic than expected. With the goal of moving off a single failure point to a spread (and theoretically more redundant) model, they intended to vastly improve system resiliency, but instead got a multiplication of existing problems, without the anticipated improvement in uptime.

Netflix shifted to Amazon Web Services (AWS) in a bid to improve performance, but then, in April, 2011, an enormous AWS cloud outage hit the east coast.

They weathered it, albeit with great difficulty. The outage encouraged a doubling-down on their fault injection testing in DevOps.

The original Chaos Monkey for DevOps was a Java-based tool made from the AWS SDK with a simple purpose: find a virtual machine to disable. Then shut it down.

The goal was to develop a system that could handle losing servers and pieces of application services and keep going strong. With their CE in full-swing, the Netflix teams knew it was going to happen, just not when or where.

Over time, the program has greatly evolved, transforming into a full Chaos Automation Platform (ChAP), with more ambitious goals: it shuts off network ports, slows traffic, trashes their network, even hits company servers covering an entire region, to simulate the AWS outage from 2011.

Still, doing all this at random is only so helpful. The key to the success of their chaos engineering program came from developing a clear set of principles around its implementation.

Examples in Action

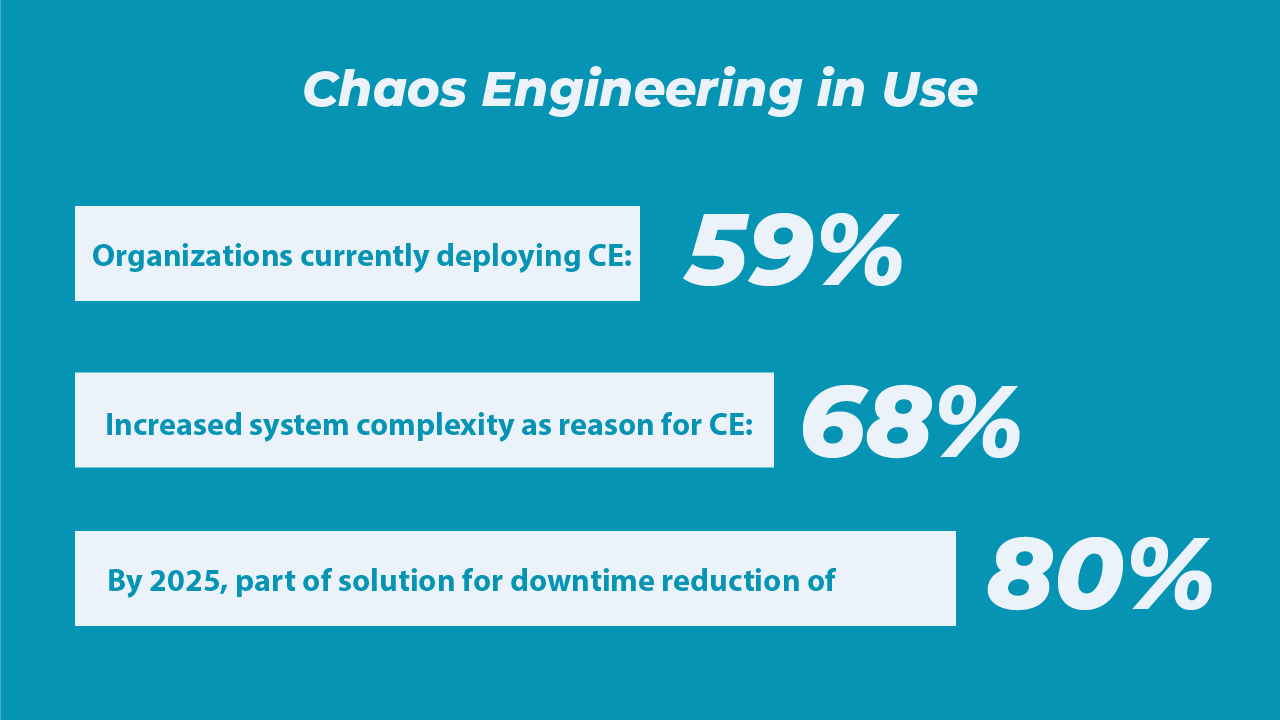

Netflix’s bold expansion of an old idea has caught on in a big way: check out this diagram for a terrific visual demonstration of how far it’s spread.

Google, Amazon, SNCF, LinkedIn, Yahoo, Uber, Kubernetes, Twilio, Microsoft, Dropbox, Facebook, Fidelity, and many more organizations now utilize CE, in a myriad of innovative ways.

Check out these statistics from Gartner:

Some examples:

- LinkedIn: FireDrill fires automated, systematic infrastructure failure triggers or simulations, helping build applications resistant to failures, while LinkedOut tests user experience degradation with failures in downstream calls.

- Days of Chaos: inspired by AWS GameDays, teams volunteer for simulated pre-production failures in a competition, earning points for detections, diagnoses, and resolutions.

- Facebook: their Storm Project simulates massive data center failures to prepare the infrastructure for extreme events.

- SNCF: Processkiller Monkey, Latency Monkey, Fulldisk Monkey, Properties Monkey, and more are loosed by the European train ticketing agency, with a Minions Bestiary.

- Uber: uDestroy breaks things on purpose, so their response teams can get better and handling unexpected failures.

- Google: DiRT (Disaster Recovery Program) is an annual, multi-day testing event to ensure successful disaster handling.

Implementing Your Own Intentional Anarchy

Leaping off the Netflix model, a clearly-outlined approach to CE (or manifesto) has been posted online, titled Principles of Chaos Engineering (principlesofchaos dot org), explaining the need:

“As an industry, we are quick to adopt practices that increase flexibility of development and velocity of deployment. An urgent question follows on the heels of these benefits: How much confidence we can have in the complex systems that we put into production?”

While chaos for the sake of chaos may make teams more adept at resolving crises, to make it really effective, CE as a practice requires clear intent, strong analysis, and carefully considered outcomes.

In other words, it must be a carefully controlled experiment, and the steps proposed in the Principles are these:

1. Define a steady state hypothesis: this should be measurable in the system under normal behavior, and ideally continue with both a control group (normal circumstances) and an experimental group (chaos-injected).

2. Initial experiments should be done in testing, using real-world issues (such as: directed latency, crashing servers, malfunctioning hardware, traffic spikes).

3. Run these experiments live. Without doing it in the real world, you will never know for sure, until the unforeseen happens.

4. Automate the experiments to run continuously (AI, we’re looking at you). Doing this manually over time just isn’t sustainable, and by adding this to your DevOps automation testing, you know failures will come, keeping the work prioritized.

5. Minimize the blast radius: this may go without saying, but the goal is not to cause real customers pain. Of course there must be some allowance for negative results, but it’s the obligation of DevOps to ensure that experimental impacts are promptly contained and resolved.

In general, the goal is to build systems that can maintain their steady state, first through disruptions in testing environments, and then in the real world (production), so that it cannot be disrupted by reasonable interference. (And when it is disrupted, your organization has a high degree of confidence in how to respond.)

Obviously, putting this into practice isn’t easy—breaking things on purpose is almost certain to generate internal resistance.

It’s not cheap to originate, either, and is perhaps best part of an organizational shift to more continuous change, and innovation—that is, acceptance of the fact that things, in this increasingly complex mesh of systems—are never really at rest.

It also requires the right people: designing and implementing chaos experiments requires a clear understanding of how your system works and the goals being pursued.

There are a number of chaos engineering tools available, and more all the time, such as:

- Gremlin: SaaS tool allows you to test resiliency from an array of attacks (memory leaks, disk fill-ups, latency injections, etc), with support for servers, Kubernetes clusters, through various means of interaction (UI, API, command line).

- Litmus/Harness (HCE): Designed for cloud-native, with custom integration to your CI/CD, and a wide array of reporting options to help monitor health before, during, and after tests. (LitmusChaos is open-source, and cloud-native, like Chaos Mesh.)

- Chaos Mesh: Open-source, Kubernetes-based, with a huge variety of attack types.

- Azure Chaos Studio: Fully managed, Microsoft-driven chaos machine, for Azure applications only.

- AWS Fault Injection Simulator (FIS): Also fully-managed, AWS uses prebuilt templates and generates real-world fault tolerance testing. Like above, but for AWS.

- Steadybit: extensive options as either SaaS or on-site, with features that aim to help you spot architecture problems ahead of CE, as well as to control your chaos experiments directly.

- Chaos Monkey: Netflix’s open-source tool is no longer being actively updated, but can still be a potential starting place, compatible with backends supported by Spinnaker (AWS, Google, Azure, Kubernetes, Cloud Foundry).

These DevOps tools for chaos engineering (several open-source) can make the process go, but regardless, it’s recommended that DevOps teams start small, like Netflix did, and iterate, to manage the unexpected consequences.

Extensive monitoring is a must, so these intentional failures can be properly contained, analyzed, and learned from.

Conclusion

With ever-greater complexity, and ever more interactions among layers and between systems, having a good grasp on your organization’s steady state gets harder and harder.

Continuous testing is already a core of DevOps best practices, but CE takes this further, expanding beyond the traditional unit, function, acceptance, and integration testing expectation, to add continuous attacks on your own system’s potential weak points, to help you uncover, refine, and improve.

Chaos engineering reflects a methodology that isn’t just about fixing what’s broken, when it breaks: but instead knowing understanding what’s vulnerable yourself, before someone else breaks it before you can.

As the late, great Stephen Hawking said:

“Chaos, when left alone, tends to multiply.”

References

The Netflix Simian Army, Netflix Technology Blog

Chaos Engineering Saved Your Netflix, IEEE Spectrum

Evaluating operational readiness using chaos engineering simulations on Kubernetes architecture in Big Data, IEEE

PRINCIPLES OF CHAOS ENGINEERING, Principles of Chaos dot org

Chaos Engineering Tools Review and Ratings, Gartner Peer Insights

Resilience testing: Which chaos engineering tool to choose?, Nagarro

Azure Chaos Studio, Microsoft