Investing in AI for your business can be extremely tricky.

Gartner projected in January that worldwide IT spend will exceed $5.6 trillion this year, nearly 10% more than 2024. And most of this is expected to offset price increases in recurring spending. With data centers, hardware, and software leading the growth, generative AI is a big reason why.

With the newness of this tech and rate of change, there continues to be experimentation and failure even as we see mounting successes. And these boosts can often be felt most in scale—with greater efficiency or volume at what a company is already doing now.

Upfront costs on bottom-up builds can be stiff, and the alternative brings the prospect of lock-in and shifting service fees, as experienced AI experts remain hard to get.

Translating all of this to cybersecurity—where reduced risk, improved monitoring, and faster response can be notoriously difficult to quantify—doesn’t make it any easier.

In today’s report, we look to shed some light on this area, taking the temperature of where AI is in cybersecurity (and cybercrime) now, looking at current cost sources, where it’s working, and where it’s not there yet.

The Value-Add from AI in Cybersecurity

The idea is a no-brainer, really. The minute AI burst on the scene, the earliest use cases included customer service, coding assistance, and cybersecurity. (Cybercrime too, unfortunately.)

When properly prepared, AI can analyze high volumes of data in milliseconds, help establish behavioral baselines and identify anomalies, and cover exponentially more ground for identifying risks.

More sophisticated systems are bringing the capacity to triage alerts, knock down false positives, and even, with agentic behavior, act autonomously to isolate and neutralize risks.

In testing, AI can shift the process left by automating the high volumes of repetitive tasks from test-writing to execution, massively expanding coverage in the process. It can also accelerate patch deployment, decreasing flaws and improving reliability.

Yet with all this wonderful efficiency, AI is also exponentially increasing attacks in scale (and eventually quality), exploiting vulnerabilities and increasing the need for speed all around.

AI-driven Security Operations Centers (SOCs) are now getting much-needed relief. As detailed by our CEO on his Substack, Google recently released agents to relieve Tier 1 and Tier 2 analysts, enabling better prioritization from the experienced professionals.

Detection and containment are seeing significant improvements, as machine learning models are improving the adaptability of defenses, acting on threat intelligence and behavior to flag anomalies and breaches faster than ever.

Getting a Handle on the AI in Cybersecurity Cost

Okay, so that all sounds great. But how does one pay for it? And given a limited choice, what’s most important?

This is the same question that’s being asked in all areas of AI implementation, with some of the same challenges here as everywhere, including:

- A lack of a clearly defined strategy is leading to duplication of efforts and impacts.

- Skills gaps in talent and a lack of experience hamper management of acquired systems and bar development of custom approaches.

- Cloud-based infrastructures need sufficient monitoring, throughput, and alignment.

- Agentic AI has an extremely varied definition, from workflow automation to greater autonomy, causing misunderstanding of limits and erratic expectations.

One of the most significant issues for cost can be data preparation.

Among updates needed to be ready for AI: access, quality, complexity, cleaning/converting, labeling, and strong governance. These improvements are essential for getting the most from AI but also don’t immediately yield visible returns.

As Barb Wixom, principal research scientist at MIT’s Center for Information Systems Research, told InformationWeek’s Shane Snider:

“AI has to be viewed, not as AI, but as a part of the data value creation or data realization… converting data to money. If organizations and especially leaders just consistently think about AI in that context, you won’t have a problem … Even if you have an extraordinary investment in AI, the outcome could be orders of magnitude greater.”

If your organization lacks the maturity or sophistication needed for this level of data governance, she recommends taking an incremental approach.

Start from the bottom, building capacity as you go. Move off silos and to a central enterprise resource.

AI in Security Automation: Dollars and Sense

Talking dollars, these investments can run from $100,000 to several million dollars just to implement.

The ongoing costs vary wildly, but, including cloud, data management, and model retraining, they add up fast.

AI/ML and cybersecurity professionals are among the most coveted in tech (ISC2’s 2024 study released in October put the shortage of cybersecurity workers at 4.8 million), and those with valuable experience have salaries in the six figures.

AI services also require more throughput, which can add thousands of dollars (to tens of thousands depending on model and volume) to monthly cloud bills.

Add this to platforms that are also getting more expensive as they integrate AI (Microsoft, for example, increased the cost of Office 365 by some 45% with the inclusion of Copilot, per reporting by TechRepublic).

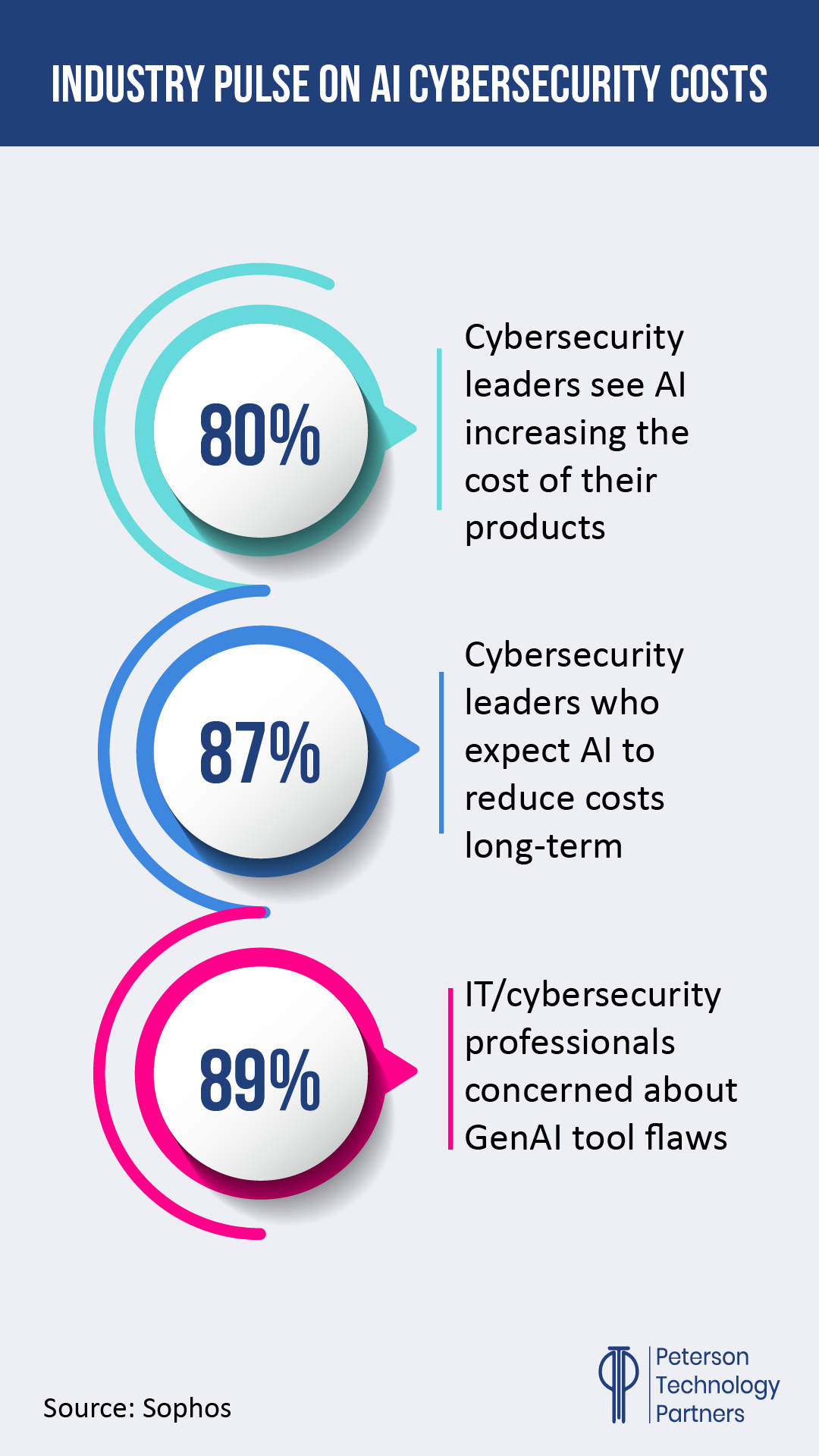

Leaders and field professionals recently surveyed by Sophos showed they’re feeling the pinch between this rise in immediate costs and the certainty that, over the long run, AI investments translate to cost reductions and overall efficiency improvements.

Cybersecurity AI Risk Reward: What’s Working Now

Things are changing fast, and the moon is being promised with each new release. But the facts are that some things are working far better than others at this stage.

Working Well:

- AI for Threat Triage: AI can really help filter out noise, reducing false positives and prioritizing real threats. (See above for Google’s Tier 1 and Tier 2 solutions; CrowdStrike claims 40 work hours per week saved by teams here alone).

- AI-Powered Threat Detection: Better flagging of anomalies also extends from improved analytics. With a better picture of baselines and standard behaviors, AI is capable of pinpointing aberrations faster and more effectively. (Venture Beat points to SOC systems managing to reduce false positives by as much as 70%. But see below.)

- Automated Responses: Maybe it is “pre-agentic,” but AI workflows are handling routine steps of responses well and can process updates, saving your staff time and improving response rate.

- AI in Security Testing and Shift-Left Development: Companies taking advantage of AI in testing are getting far more coverage, better analyzing software behavior before deployment, and saving enormous amounts of time in test case development and maintenance. (We covered this in another report, which you can read here.)

Not There Yet:

Some issues that are delaying or blocking the rollout of this improved AI in the field include:

- The Challenge of AI Cybersecurity Interpretability: Bullish as they are about the long-term benefits, the majority of cybersecurity professionals surveyed remain concerned about AI stability. Useful? Yes. Very. But how reliable is it? How secure? How clearly and easily can I see where it may have gone wrong? The black box nature of many solutions makes sufficient vetting a challenge to be solved.

- AI Cybersecurity False Positives, Too?: Remember above, where we referenced triage, detection, and reduction in false alarms as benefits? Yeah, AI can also cause the reverse problem. Depending on your data and means of implementation, the increased scanning of everything can provide a dizzying amount of alarms that need human intervention.

- Unraveling the Legal Implications of AI in Cybersecurity: Automated responses (such as quarantining users or shutting down systems) carry regulatory risks, as do questions around data (in and out). As with interpretability (above), this is another off-shoot of AI understanding and transparency.

- Immediate Costs: We led with the increase in recurring budget costs. Another issue can be the up-front expense of adding additional components to take full advantage of the best these solutions have to offer.

The Realities of AI-Driven Cybercrime

In addition to expense concerns, research is finding that cybercriminals are not yet using AI in many of the most disastrous ways initially predicted.

(And still projected, see below.)

Now it’s Mostly Generative AI in Cybersecurity Attacks

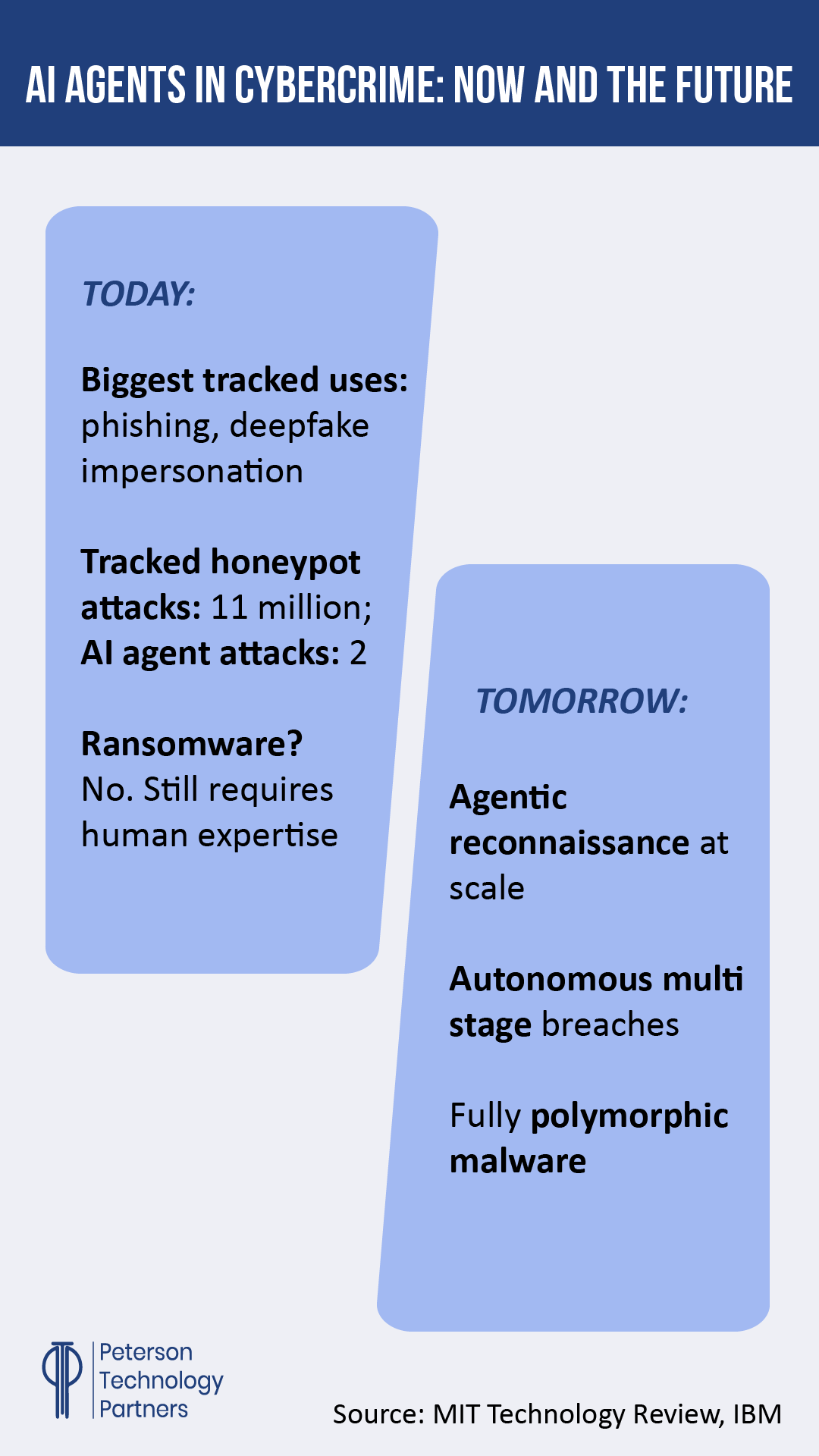

AI agents who autonomously find and exploit systems?

They’re not too common, at least not yet. So far, the biggest AI threat is coming from phishing and fraud, where generative AI is an enormous help in creating more effective snares and accelerating creation and distribution.

We’ve covered many of these methods in depth in other reports and our cybersecurity roundups, but they include language assistance, better personalization, and impressive multimodal fakes (such as audio emulating co-workers, falsified applicants, or video teams meetings even consisting of false members).

AI is also playing a role in speeding up the volume of attacks, though the most dangerous implementations are still to come.

Anticipating the Use of AI Agents for Cyber Attacks

Researchers are regularly finding that AI is capable of devastating attacks.

Anthropic’s red team has shown Claude improve from the high school level to the undergrad in one year on challenges that require finding and exploiting software vulnerabilities.

They were also able to replicate human behavior to execute attacks that could steal personally identifiable information at scale.

And yet, as reported in the MIT Technology Review in April, it doesn’t look like criminals are yet sinking the up-front time or cost needed themselves to use AI in these more elaborate ways. The organization Palisade Research created a honeypot of vulnerable servers (posing as government and military information of value) to draw-in attacks for study, and then analyzed whether they came from simple bots, humans, or AI.

And while they managed to net 11 million attacks, almost all of them were from humans and simpler bots.

Only eight were potential AI agents, and of those, just two were confirmed as AI agents.

Note that while the risk from ransomware attacks remains high, these also need human expertise to pull off.

At least for now.

The Ugly Future of AI Cyber Attacks

Of course, as Anthropic’s teams have discovered, it’s inevitable that this will change.

Malwarebytes security expert Mark Stockley told the Technology Review’s Rhiannon Williams:

“I think ultimately we’re going to live in a world where the majority of cyberattacks are carried out by agents… It’s really only a question of how quickly we get there.”

While current AI use in cybercrime is improving, smoothing over, or accelerating existing means of attack, autonomous AI systems are going to enable entirely new modes of attack.

Researchers from the University of Illinois Urbana-Champaign (UIUC) have created a benchmark to evaluate AI agent progress at autonomous exploitation. And so far, they’re finding them capable of exploiting 25% of systems where they have some context on a vulnerability. In cases where they know nothing, it stands at 13%.

The researchers hope this serves as a wake-up call, as agents continue to improve similar benchmarks across business use cases.

How PTP Helps with the Cost of AI Cybersecurity Implementation

While it’s good news that cybercriminals don’t appear to be using AI yet for the worst-case scenarios we’ve been hearing about since ChatGPT exploded on the scene, it’s only a matter of time.

Companies must stay on top of the changes and leverage what AI is doing well now, but at least have the freedom to do so safely, as products stabilize and the costs continue to shake out.

Still, from anomaly detection to incident response to workflow automation to shift-left software testing, one consistency remains the need for quality people to get the most from these offerings.

Whether your needs are in data science, cybersecurity, or AI/ML, for custom/bottom-up builds or implementing ready solutions, PTP has options available.

With established onshore, offshore, and nearshore pipelines, we can help you get what you need in the way, and at the cost, that’s best for you.

Conclusion: The ChatGPT Moment

Early adopters can pay high prices, but as AI cybersecurity costs continue to stabilize, the risks, costs, and benefits are becoming increasingly clear.

As of now, AI can increase the cost of ongoing services and fail to deliver without data where it needs to be. It can also empower your SOC, lighten the burden on your people, and accelerate your response when attacks do happen.

With new research showing the criminals, too, are going for the low-hanging fruit first, companies can take a breath, take stock, and get it right. But they can’t afford to wait.

As UIUC researcher Daniel Kang told the Review’s Rhiannon Williams, we must be proactive about the cybersecurity risks “before it has a ChatGPT moment.”

“I’m afraid people won’t realize this until it punches them in the face.”

References

Gartner Forecasts Worldwide IT Spending to Grow 9.8% in 2025, Gartner

Beyond the hype: The business reality of AI for cybersecurity, Sophos

AI’s Hidden Cost: Will Data Preparation Break Your Budget? and The Cost of AI Security, Information Week

How to Budget for AI Cybersecurity Solutions?, FinModelsLab

CrowdStrike Leads Agentic AI Innovation in Cybersecurity with Charlotte AI Detection Triage, CrowdStrike Blog

From alerts to autonomy: How leading SOCs use AI copilots to fight signal overload and staffing shortfalls, Venture Beat

Cyberattacks by AI agents are coming, MIT Technology Review

Progress from our Frontier Red Team, Anthropic Blog

FAQs

Bottom line: How much does it typically cost to implement AI in your cybersecurity?

Obviously, there’s no simple answer for all companies of all scales across myriad solutions. But estimates land anywhere from $100,000 all the way to multiple million dollars.

Ongoing expenses including services, cloud compute, infrastructure, and labor can add 15%+ per year. Yet over the long-term, nearly 90% of leaders in the field believe AI solutions will mean far greater savings, in addition to helping them stay more adaptable and protected from shifting threats.

In what areas is AI bringing the best ROI now?

Behavioral analysis and improved anomaly detection, automation of repetitive tasks (including enabling shift-left in testing to lessen vulnerabilities), faster response to breaches, and, increasingly, threat triage and reducing false positives are the areas seeing the best returns for organizations at present.

Is it really true AI is causing a spike in cyberattacks and transforming the attacks being seen?

Yes and no.

Yes, to the first point, but maybe not in the way you’re hearing most about. Generative AI is being widely used for phishing and scams overall, to improve the quality and medium used. It’s also exponentially increasing the volume.

On the second point, not yet. While researchers are continuously finding ways AI can be used to attack our digital systems in new and frightening ways, cybercriminals do not appear to yet be leveraging AI agents, for example, in these ways. While it’s undoubtedly coming, this gives experts a chance to catch their breath and prepare before cybercrime has its own “ChatGPT moment.”